There was quite a lot of coverage last year about a paper by Millar et al. which suggested that the carbon budget that would keep warming below 1.5oC was greater than had been earlier suggested. I wrote about it here, here, and here.

One of the reasons for the difference between the Millar et al. carbon buget, and other estimates, was the manner in which we would determine when we had reached some climate target. In a new paper, Richardson, Cowtan and Millar, look into this in some detail. Essentially there can be differences between how we estimate global surface temperatures if we’re using models, compared to how we do so from observations. From models, we would typical present an estimate based on global coverage and using surface air temperatures. When using observations, we typically mix surface air temperatures over land with sea surface temperatures over the oceans (air-sea blended). In addition, an observational dataset may have to switch from using air temperatures, to sea surface temperatures, in regions where sea ice has retreated (fully blended). Finally, some of the observational datasets do not cover the entire globe (blended-masked).

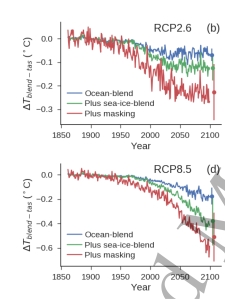

As shown in an earlier paper estimates for surface warming depends on how the surface temperature is determined; if we were able to estimate surface temperatures using air temperatures over the whole globe, we would estimate more warming than if we do so using an air-sea blended dataset, which would again show more than one that is blended and suffers from coverage bias. The figure on the right (from Richardson et al.) shows how the difference depends on how the temperature dataset is constructed, and on the emission pathway that we actually follow.As a consequence of this, our carbon budget estimates (for example, the carbon budget that gives a 66% chance of staying below 2oC) also depend on how we determine global surface temperatures. If we’re using a dataset like HadCRUT4, which is blended and suffers from coverage bias, then it will be about 60GtC greater than if we were to try and determine it using air surface temperatures with global coverage. Equivalently, we will cross the threshold about 7-8 years later.

I think it’s very useful to have this all clarified, as it would seem to suggest that the Millar et al. result wasn’t really some indication of a problem with climate models (as suggested by some) but mostly a consequence of the different ways in which global temperature datasets are determined. It might also have been nice if we’d been a bit more careful as to how we defined these various climate targets initially, but I suspect that this is mostly because many of the issues that seem obvious now, weren’t when these targets were first suggested.

I also don’t think this really makes much difference in terms of what this implies. I had a brief chat with Glen Peters on Twitter, and one way to consider this is that if we stick with the original carbon budgets, but assume that the correct dataset is one that is both blended and masked, then we go from having a 66% chance of achieving the target, to an 80% chance; not exactly a massive change in the probability of success. Also, whatever carbon budget we use, there is very little left. At best, we’ve gone from “almost certainly won’t achieve this” to “maybe we can, if we try very hard”.

Links:

Global temperature definition affects achievement of long-term climate goals, by Richardson, Cowtan and Millar.

Emission budgets and pathways consistent with limiting warming to 1.5 °C by Millar et al.

A bit more about carbon budgets a post that discusses some of the possible carbon cycle implications of Millar et al. (which I don’t really discuss in the post above).

I think we can easily stay under the 1.5 degree target if scientists and technologists will get off their backsides and solve the problems that are driving the temperature rise. I think we will not easily stay under 2 degrees if it requires that we incorporate significant changes in the way we live or aspire to live.

How are we doing with CO2 levels?

Daily CO2

March 1, 2018: 408.68 ppm

March 1, 2017: 407.61 ppm

CO2e? Oh, I don’t know. A bit higher, maybe. What’s the worst that could happen? And how soon would that occur? I think it’s ok if it out beyond the next election cycle.

Cheers,

Mike

Great! I was going to suggest an emissions post, but it needed a new paper. 🙂

My current problem is how we go from GHG emissions to airborne fractions or CO2eq (making sure to include aerosols and land use, similar to the AR5 net RF calculations).

From NOAA we have AGGI (absent aerosols and land use) …

https://www.esrl.noaa.gov/gmd/aggi/aggi.html

And from the European Environment Agency we have …

https://www.eea.europa.eu/data-and-maps/indicators/atmospheric-greenhouse-gas-concentrations-10/assessment

(Fig. 1 and the “Table” tab)

The EEA includes aerosols (but maybe twice according to their “All greenhouse gases including aerosols” column and their “All Greenhouse gases*” column, the * (asterisk) remark reads “(*) assuming radiative forcing values for aerosols from previous IPCC reports.)

Anyways, I’m trying to understand the significant differences between the CO2eg numbers from NOAA and EEA. Some people also throw in tropospheric ozone (O3) as a GHG like this Wikipedia article …

https://en.wikipedia.org/wiki/Carbon_dioxide_equivalent#Equivalent_carbon_dioxide

CO2e = 280 e^(3.3793/5.35) ppmv = 526.6 ppmv (which is higher then the NOAA AGGI number for 2016)

http://cdiac.ess-dive.lbl.gov/pns/current_ghg.html

So CO2eq = 409.344 ppmv (EEA, last column, 2015, do we really need six significant digits) = 444.536 ppmv (ditto, 2nd column, ditto, ditto) = 489 ppmv (NOAA AGGI, 2016) = 526.6 ppmv (someone at Wikipedia doing the T.J. Blasing calculation, 2015 or 2016)

409

445

489

527

409 < CO2eg < 527 (circa 2015/2016) ???

I'm thinking that keeping it in net RF (w/m2) is the best way to go as per AR5, it too would have an error bar about the best (central) estimate. Maybe the above CO2eq range is somewhat consistent with the corresponding RF range .

Anyways, doing some head scratching (getting into the weeds again) in an attempt to extrapolate out to ~2XCO2eq given current atmospheric GHG trendlines (Curve Fitters International, Inc.).

Everett,

There is this paper: Framing climate goals in terms of cumulative CO2-forcing-equivalent emissions. I haven’t quite worked out what they’re suggesting, though.

Good post.

We have to have a baseline to work with.

Whatever we choose will have problems to someone.

There is no right solution to the choice of baseline but some have better features than others.

Satellites do have a better view of the energy budget and better in real time estimation quantities however as we are finding out lack the permanency and stability to provide like for like measurement over time.

Land measurement suffers from stability of the instrument but very slow drift of the surrounds in many instances. Elevation problems and urbanisation.

The measuring devices themselves, like the satellites but slower, suffer comparison problems from change

Sea measurement has the combination of both location and instrumentation problems.

So we have to choose an imperfect system, stick with it or adapt a better one as we go along and argue for its use.

Posts like this that point out a problem risk people looking at the problems and using them to ignore the bigger picture.

Posts that gloss over the problems trying to protect the bigger picture at all costs are what lost me years ago.

Comments on post, being helpful.

6-8 years might seem far too long for many here who feel we have already past the point of action.

For those still hopeful it gives more working space.

Nick Stokes and Steven and others have made the point at times that we do not need to have all the observation points to make up a useable reference system.

The land sea mix has potential fatal flaws if warming occurs at different rates in both of them.

This is a very important point as a lot of the arguments use this as a get out of jail card and it makes our land based temperature arguments irrelevant and redundant.

We live on the land and depend on land temperatures now, not what may come out of the sea some time in the future depending on the unknown equalisation rates and ocean overturning.

So.

Choose a data set.

Preferably land only.

Accessible but remote from local change effects.

Preferably ones already in use for a long time (history)

Select an elevation range, ignore mountains and Grand Canyons.

Use that as the standard world temp for better or worse, like the standard mean Greenwich time.

Tack the other different comprehensive data sets onto this for useful comparisons.

Things like Everett suggests, CO2 equivalents and aerosol considerations, get their own place in the sun but not as the basis.

Too many assumptions, particularly aerosols, which ones and what effects and what amounts and looking at GHG without taking into account the variation of the main GHG effector, water vapour, which dwarfs the minor players. These considerations are important and useful and effect future planning but we need a reference standard in place.

“I think we can easily stay under the 1.5 degree target if scientists and technologists will get off their backsides and solve the problems that are driving the temperature rise.”

We could also visit nearby stars if those lazy physicists would just repeal the law limiting travel to the speed of light.

Reference points and definitions:

couple of observations: when I started at BE (2012) I made a stink about doing an “all air” global product. Hmm but Marine air temps kinda suck. The next idea was to do a global product, BUT

in the arctic use the water temperature under ice, as opposed to using the air temp above the ice.

Reason: the water temp under ice should be more predictable ( less variation) that the air temp above ice ( correlation length in the arctic for air temps has strong seasonal differences).

Anyway, that idea never caught on. we still publish it. As a reference ( since we have to pick a reference) I think its better.

Or you can use angech suggestion: maybe regional reference points (CET is an obvious one)

New zeeland is another.

Interesting problem ( ps, I love the clarity of Cowtan and Richardson– good work )

Steven Mosher,

If some Arctic region’s air warms from -30 C to just above freezing then that’s lots of warming. Water under the ice would be stuck near the melt point and give ~0 C change. Then somewhere that was -30 C is now melting despite “no” warming, and from one way of looking at it that is nonsense!

My current preference is air over sea ice then SSTs once it’s gone with an algorithm that doesn’t “jump” when sea ice melts or expands. I suspect that’s how Berkeley works but we need to confirm that.

Different choices can make sense but change carbon budgets in potentially expensive ways. Luckily this is up to the negotiators & policymakers to work out! For researchers, I think that checking this when comparing studies is important. We showed already that it affects your climate response estimates, but it’s possible to imagine one study on coral reefs that says 2 C is a danger limit from HadCRUT4 and another that says 2.3 C is the limit based on CMIP5, and then find out that they’re actually saying the same thing.

ya. mark. again let me praise your clarity and jargon free writing. its true the water under ice wont show that much variability. i suppose one could make the same argument for air temps over melting snow or ice. the really cool thing about your work is that you make temperature series policy relevant. I always assumed that the field was largely acedemic.. technical. but you and kevin have made it relevant (in your other work as well).

so kudos.

Interesting, per the paper HADCRUT underestimates the temperature increase since 1861-1880 by 20% relative to BEST, which has better treatment of missing data and sea ice. Note that the same bias impacts energy-balance ECS and TCR estimates. As Richardson et. al. showed previously most of the disagreement between models and EBM is due to use of HADCRUT in EBM. Substituting BEST for HADCRUT in EBM gives a TCR of around 1.6 which is in much better agreement with CMIP5 and with temperature data since 1970.

Note that the recent Cowtan et. al. paper on SST implies that the ocean warming since the 19th century could be underestimated in existing SST series. Substituting their very provisional SST series into BEST increases EBM TCR to roughly 1.8. So, use of existing observations to project both EBM ECS and 2C carbon budgets has significant limitations.

I guess I’m going to be known as the “cause and effect guy” around here, but this is a question I’ve thought of before and never asked:

Aren’t the negative consequences that we are concerned with subject to the actual system dynamics that are also predictive of mean temperatures in the models?

IOW, it isn’t as simple as saying (e.g.) ocean temps may be higher or lower, so global mean temps may be off one way or the other, but that factor would also be affecting melting rates or storm patterns, so those might be more or less problematic.

Is this factored in when thinking about the “budget” goals?

zebra,

I think the basic answer to this is essentially no. In a sense, what we’re interested in is how things will change relative to some base period (say pre-industrial). This depends mostly on how much the temperature changes, and not all that particularly on the actual temperature (for relatively small difference in absolute temperature).

MarkR

It would be cool if there were a simple way to make the budget more personal and individual

More emphasis might be placed on metrics that deal with changes in time rather than temperature or space.

On a generational scale, the Anthropocene has more to do with human dependence on stable planting seasons, rain and harvest dates than the statistics of overnight temperatures or ocean heat content .

This distinction already motivates some notable contestants in the Climate Wars

Oh dear, we can’t have that! That would make it all too personal and individual!

Once you apply the denominators of global population and current “spend” rates of the budget, small changes to the numerator/budget for 2C, 1.5C, etc. are rendered pretty “meh”.

If, say, you start with some pretty bog standard data points – 7.6 billion global population, 42 GtCO2 emissions in 2017 (and apparently still rising, but let’s be neutral), and a “medium” remaining budget of about 713 GtCO2, as of a few minutes ago, anyway… – and then arbitrarily assign the budget per “individual” – ignoring age or historical emissions and, well, pretty much everything else except personhood.

That budget leaves about 17 years of run-rate left. Which should scare the living sh*t out of anyone younger than 60ish, infuriate anyone under 40 and incense anyone under 25. And, or course, if an “average” person in US, Australia, Canada, etc. were somehow given this per capita budget of 713/7.6 = 94 GtCO2, they would be blowing through it in just 5-6 years.

Now, whether you reassuringly expand the budget – either by using 1880 instead of pre-industrial or this or that temperature dataset, or even by the “Do you feel lucky? Well, do ya, punk?” methods (NETs, BECCs, 50% instead of 66% odds, climate sensitivity) it still doesn’t make enough difference to make those “personalized” budgets anything other than shocking to any detached observer.

What is most interesting to me is how facilely get detoured into “but Nic Lewis”, “but BEST”, “but grid parity and energy storage and fusion!”, etc., in the face of this. Instead of apprehending what even optimistic budgets imply for us individually.

By the way, I suppose there might be some merit in coding some sort of simple calculator like that – moreso to force hi-emitters (especially “old” ones) to face the personal assumptions underlying their personal cognitive dissonance. At least so that they can personally cognitively dissonant better!

Although, as John Sterman pointed out about 15 years ago or more, once you try to communicate beyond “stock versus flow”, you’ve effectively lost the audience. As the reverse of what Bill Murray said “so we’ve got that not going for us, which is nice.”

I raise the personal approach because years ago on keith Kloors blog I tried to do the calculation ( in repsonse to M Tobis) and I screwed it royally ( carbon versus c02 mistake).. so iwant to undo that mistake.

Anyway, I think it would make a strong impact on people. Maybe some folks will decide the best thing they can do for the planet is to get a snip job. Me? I will prolly burn more than my fair share;

I’d make up for by re incarnating as a tree.

“That budget leaves about 17 years of run-rate left.’

I’m think more like, how many more miles can I drive? or fly? how much more electricity can I use?

meat eat?

You know one of those games where people try to balance the federal budget , but done as a carbon budget.

So, ya you start to have kids play that game ..hmm

@ATTP,

I don’t get your answer at all, so I am guessing you didn’t get my question. Let me try again.

First, I realize that the outcomes are going to be in the bad, pretty bad, really bad range– this is just academic curiosity about the modeling.

An example of what I’m thinking about:

SST has an effect on the intensity of hurricanes and storms. For those of us near the coasts, this is one of the negative consequences we would like to avoid by holding the change in GMST to, say, 2C. Is there an assumption that this intensity has a linear relationship to the value of GMST?

I’m not talking about “tipping points”; it just seems unlikely to me that producing an average value for the energy in the system accurately reflects the changes in the elements of the system. What made me think of this is the inconsistencies you describe just in calculating the mean– say, if SST is off, that error may have more effect on the prediction of hurricane strength than on the GMST value. Hence my question about the assumptions. Hope this is clearer.

Zebra,

All I’m really suggesting is that we’ve developed with a certain typical climate, which includes how often we will be impacted by severe weather events. Of course, this depends on absolutes, like the mean global surface temperature. What’s of interest is how this will change as we dump more and more CO2 into the atmosphere, which depends more on the resulting change in temperature than on what the actual baseline GMST is. I may still be missing your point, though.

ATTP,

Aaargh, yes, you appear to be missing my point completely! 🙂

When I say GMST, I’m talking exactly about the delta, not the absolute value. That’s why I said “holding the change in GMST to, say, 2C”.

What I’m addressing is the fact that it (the change) is a Mean. We know that the energy in the system is not uniformly distributed, and we know that the effects we are concerned with are caused by the energy content of different elements of the climate system. Perhaps you could read my example about SST and storms again?

In terms of risk in hydrology there is not a linear relationship. Probably more like an exponential.

The 2 degree target was a political decision based on what was deemed achievable. I think it is important not to pin this target on scientist but rather on politicians.

Zebra,

Oh, if you mean that there will be regional impacts that will depend on local changes and that are hard to predict if we simply consider global changes in surface temperature, then yes, of course. In a sense, though, they’re related. So, I would still argue that limiting the change in global surface temperature, will also limit the local changes and, hence, will limit of these local/regional changes. It sitll, ultimately, comes down to emissions (which doesn’t, though, imply that understanding the regional impacts isn’t also important). Maybe I’m still missing your point, though.

Are you (zebra) talking about “damages function” specification, a la Martin Weitzman and others? (notoriously weak aspect of IAM’s, by the way). There is a basic treatment in chapter 3 of “Climate Shock” (Gernot Wagner and Martin Weitzman), especially section “How much for a degree of warming?”.

But even if you are asking about strictly physical impacts, whether the damages w.r.t. temperature are linear, quadratic or exponential, the answer is very often “we don’t know”. If you consider just the implications if the hypotheses that DeConto & Pollard are working with for ice sheets – “previously underappreciated processes linking atmospheric warming with hydrofracturing of buttressing ice shelves and structural collapse of marine-terminating ice cliffs” (MISI and MICI) – and we are deep, deep into “we really don’t know but plausibly exponential” territory.

Thanks guys. I think HH is making a valid point– the 2C target, and associated “budgets”, is something everyone could accept without needing super-high-resolution in the modeling.

ATTP: You got it, and of course less emissions is desirable whatever the case. But I’m not even talking about any specific geographical result, rather what I think HH means about hydrology in general– more water vapor in the atmosphere forces the temperature change, but may have a greater impact on flooding risks. So, an error on water vapor is a bigger deal than just getting Sensitivity right or wrong.

This just in…

March 5 (UPI) — Scientists have developed new models to better understand how governments can work together to ensure global warming is limited to 1.5 degrees Celsius by 2100.

The different models consider a variety of political, socioeconomic and technological factors, including the impacts of economic inequality, energy demand and regional cooperation. The models considered five different so-called Shared Socioeconomic Pathways, or SSPs.

“A critical value of the paper is the use of the SSPs, which has helped to systematically explore conditions under which such extreme low targets might become attainable,” Keywan Riahi, energy program director at the International Institute for Applied Systems Analysis in Austria, said in a news release.

Models show global warming could be limited to 1.5 degrees Celsiusby Brooks Hays, UPI News, Mar 5, 2018

“I think we can easily stay under the 1.5 degree target if scientists and technologists will get off their backsides and solve the problems that are driving the temperature rise.”

Err – how are scientists and technologists going to solve what is essentially a political and economic problem? Short of waving a magic wand, there’s no way to make CO2 not-a-GHG, and no way to magically sequester CO2 for free.

@Russell

“On a generational scale, the Anthropocene has more to do with human dependence on stable planting seasons, rain and harvest dates than the statistics of overnight temperatures or ocean heat content ”

My two cents (not an expert but fairly well informed ;-)).

Short term – use radioisotopes (atmospheric bomb-test pollution). Radiocarbon workers already use 1950 as the reference date for Before Present. Why re-invent the wheel?

Long-term (thousands of years, if our civilisation survives that long). Use the C isotope excursion. We already use that for PETM, ETMs, Toarcian, Snowball Earths etc. Why re-invent the wheel? We’re changing them one or two orders of magnitude faster than the PETM. Trust me, that will make one helluva sharp Golden Spike. Probably puts it around 2000, as we won’t be able to tell by then exactly when the onset was, only when it sped up big-time.

There is a Working Group looking at it.

Maybe the Anthropocene began in 1965. The time it took for the radioactive signature of early ’60s bomb tests to make it right down into the S hemisphere.

It is a complete waste of money to try controlling the climate. Even if we succeed to remove all human impact we will have natural variety that will be much warmer and colder than today’s climate as paleodata shows. We must focusing on techniques to adapt to climate change, because it will happen no matter what we do. There has always been droughts that reduces crop yield, this will be the case no matter what. Let’s say it will occur twice as often because of us in the coming 200 years, it won’t be a problem if we spend the trillions of dollars on for example techniques to store and desalinate water.

I find it disturbing that people think we will have a stable climate if we reduce CO2. We already have problems today with droughts, fires, floods etc. We can eliminate those problems today already, but instead you want to waste alot of money, live with these problems for decades /centuries and then fix it when it becomes unbearable. But it’s our children that will pay for these mistakes. Fossil fuels will run out anyway, the co2 problem will solve itself. We need to prepare us now for the climate change that will happen even when fossil fuels are depleted.

Peter,

Firstly, the timescales are vastly different. The kind of changes that we could potentially induce in a couple of centuries would normally take thousands of years. Also, the temperatures we could reach will probably be higher than they’ve been for all of human history.

The argument is not that we will have a totally stable climate if we reduce CO2 emissions. The point is that if we want our climate to not continue to change (on top of natural variability) then we need to get CO2 emissions to roughly zero. We would, of course, continue to have a climate that will include various extreme events, but it will be similar to what we’ve experienced before, rather than potentially vastly different.

ATTP,

People are already dying and starving today because of droughts, surges and storms. I say, let’s fix their problems now, at the same time we prepare for the more extreme events.

With your solution people will continue to die and starv even if co2 is completely reduced.

I think my solution will cost less and help more people, starting now. Although, I admit that this is pure speculation.

Peter,

firstly, why should reducing carbon emissions be in conflict with adaptation?

Secondly, why should anyone pay any attention to your, admitted with admirable self awareness, pure speculation?

Plus what AT said on timescales.

Verytallguy,

Because we have a limited amount of time and money.

Most of these discussions are based on speculation, you talk about a 2C target, but the range is likely 1.5-4.5C sensitivity, but might be higher or lower. That is speculation. You don’t need to care about anything I say, I’m fine with that.

Steven Mosher:

”

Me? I will prolly burn more than my fair share;

I’d make up for by re incarnating as a tree.

”

That won’t work.

First, trees are carbon-neutral.

Second, a Mosher-karma-tree would be cut down, fashioned into paper, and used to print copies of ‘The CRUTape Letters’, which would immediately burst into flames and release the sequestered CO2.

You could, however, come back as an artificial tree.

Peter Langlee:

”

It is a complete waste of money to try controlling the climate.

”

Too late.

We’ve already been geoengineering the carbon cycle for a century.

People are just starting to take notice.

Humans ARE in control of the Earth’s future climate, like it or not.

Peter,

Well, yes, and it’s already clear that not spending it wisely.

Peter Langlee:

Huh? We had a relatively stable climate for thousands of years. Then we started digging up fossil carbon and burning it for energy. Global climate is destabilized as a result. If we stop transferring fossil carbon to the atmosphere, there’s no reason to think the climate won’t re-stabilize, albeit at a couple of degrees higher GMST, for another few thousand years.

vtg asked, why should reducing carbon emissions be in conflict with adaptation? You replied:

Well, that’s a reliable truism. But ‘we’ are still left with a wide range of potential policy responses. Are you sure you’ve thought this through? Do facts matter? How do you know you’re not fooling yourself?

Peter,

You appear to regard policy as a binary “all adaption” or “all mitigation”. It’s worth asking why – no other prioritization decisions are treated in this way.

And the 1.5-4.5 range is not speculation. It’s what the evidence tells us.

Pingback: Talking solutions and motivating action | …and Then There's Physics

“You appear to regard policy as a binary “all adaption” or “all mitigation”. It’s worth asking why”

Because librulz?

@BBD

“Maybe the Anthropocene began in 1965. The time it took for the radioactive signature of early ’60s bomb tests to make it right down into the S hemisphere.”

I’d still go with 1950. Continents are what get preserved over geological time, and most land is in the NH. The IR anomaly at the K-t (H-Pg) boundary is unusual in its global distribution. And even there it needs to be in the right age and facies, so entire countries don’t have it. The palaeo-dated spikes are defined in one place and rely on correlation elsewhere. Plus not re-inventing the wheel.

Long-term the C13 excursion is better. It will still be detectable when the Sun goes red dwarf, long after most radioisotopes have decayed.

The Ir anomaly at the K-T (K-Pg) boundary 😦

Steven Mosher:

Thanks for your nice comments! I’ve thought about personal carbon budgets a fair amount and I apply one to myself but didn’t do it very formally. I’d like to hear your thoughts about how to approach it first. I’ve run into a few apparent dead ends and a new perspective would be great. I’m happy to share my personal experience too, but that’s possibly a series of blog posts in length…

Re the Anthropocene:

https://www.nature.com/articles/541289b

Excerpts (original is not paywalled; it’s a short note but ATTP, please mod if you think the snips exceed fair use):

IOW to meet the International Commission on Stratigraphy standards, it has to be a golden spike which will be preserved over geological time. Not a mea culpa or hair-shirt description of all the change humans have wrought on the planet.

Entertainingly (given some of the past topics here), it’s a response to a letter bemoaning the exclusion of social scientists and humanities from the discussion (despite one of the authors being on the working group ;-)). Some excerpts from that:

(Hmm, I thought the Anthropocene was going to be nested in the Quaternary, a pretty minor chunk of Earth history)

(Way to miss the point. The AWG is in sync with contemporary (and past) thought in science – objectivity, repeatabliity, consistency… The question on the table is: does the Anthropocene qualify as a distinct unit of geological time. That’s not answered by narratives, elite, Eurocentric or not. It’s answered by determining whether it qualifies using the same criteria used to delineate other units of geological time. And if it does, by answering the question: where would a geologist place the boundary, looking back from thousands or millions of years hence?)

You go about this the wrong way. First you need to look what has happened since the 50′ s, has the warming caused a net negative effect? Then you look at the regional areas where there has been a negative effect, what would it cost to adapt? How much have you gained in areas where global warming is good?

When you have done this you compare what the cost would be to reduce CO2, with 4.5C sensitivity. Now you can decide what to do. Spend all money on adaption? 50/50 adaption reduction? No adaption? You are diving into this too fast.

Look up FMECA if you are unfamiliar with the concept.

We have 70 years of observations, use that before using models.

Peter,

Thanks for coming here and explaining this to all of us. Why don’t you go and look up the concept of a carbon tax and then get back to us about that?