The Imperial College code, the results from which are thought to have changed the UK government’s coronavirus policy, has been available for a while now on github. Since being made available, it’s received criticism from some quarters, as discussed by Stoat in this post. The main criticism seems to be that if you run the model twice, you don’t get exactly repeatable results.

As Stoat, points out, this could simply be due to parallelisation; when you repeat a simulation the processors won’t necessarily return their results in the same order as before. However, it could also be due to other factors, like not quite using the same random number seed. These simulations are intended to be stochastic. The code uses random numbers to represent the probability of an outcome given some event (for example, a susceptible person contracting the virus if encountering an infected person). Different runs won’t produce precisely the same results, but the general picture should be roughly the same (just like the difference between weather and climate in GCMs).

For a while now I’ve been playing around with the Imperial College code. I should be clear that I’m not an epidemiologist and I haven’t delved into the details of the code. All I’ve been doing is seeing if I can largely reproduce the results they presented in the first paper. The paper gives much more detail about the code than I intend to reproduce here. However, it is an individual-based model in which individuals reside in areas defined by high-resolution population density data. Census data were used to define the age and household distribution size, and contacts with other individuals in the population are made within the household, at school, in the workplace and in the wider community.

I’ve run a whole suite of simulations, the results of which are shown on the right. It shows the critical care beds, per 100000 of the population, occupied under different scenarios. If you’ve downloaded the paper, you should see that this largely reproduces their Figure 2, although I did have to adjust some of the parameters to get a reasonable match. The different scenarios are Do nothing, Case Isolation (CI), Case Isolation plus Household Quarantine (CI + HQ), Case Isolation, Household Quarantine plus Social Distancing of the over 70s (CI + HQ + SD70), and Place Closures (PC). To give a sense of the severity, the UK has just under 10 ICU beds per 100000 of population.

I’ve run a whole suite of simulations, the results of which are shown on the right. It shows the critical care beds, per 100000 of the population, occupied under different scenarios. If you’ve downloaded the paper, you should see that this largely reproduces their Figure 2, although I did have to adjust some of the parameters to get a reasonable match. The different scenarios are Do nothing, Case Isolation (CI), Case Isolation plus Household Quarantine (CI + HQ), Case Isolation, Household Quarantine plus Social Distancing of the over 70s (CI + HQ + SD70), and Place Closures (PC). To give a sense of the severity, the UK has just under 10 ICU beds per 100000 of population.

I’ve also included (dashed line) the scenario where you impose Case Isolation, Place Closure (Schools and Universities) and general Social Distancing for 150 days (which they show in their Figure 3). As you can see, this really suppresses the infection initially, but there is a large second peak when the interventions are lifted. This is what, of course, is concerning people at the moment; will the lifting of the lockdown in some parts of the UK lead to a second wave?

So, I seem to be able to largely reproduced what they presented in the paper. This doesn’t really say anything about the whether or not the results are reasonable respresentations of what might have been expected, but it’s a reasonable basic test. I will add, though, that there are a large number of parameters and I can’t quite work out how to implement the somewhat more dynamic intervention strategies.

Something else I wanted to add is that I’ve also played around with some other codes, including a simple SIR code, a SEIR code, and one that included an age distribution and a contact matrix. Whatever you might think of the Imperial College code, all of the models seem to suggest that without some kind substantive intervention, we would have overrun the health service.

Um…we did over-run the health service. Unless you think that refusing to treat a huge number of ill people was just for fun?

Fair point. Poorly phrased on my part. I was simply trying to stress that whether you trust the Imperial College code, or not, there is little to indicate that we over-reacted.

> Unless you think that refusing to treat a huge number of ill people was just for fun?

You might also consider a shortage of PPE as a measure of being overrun.

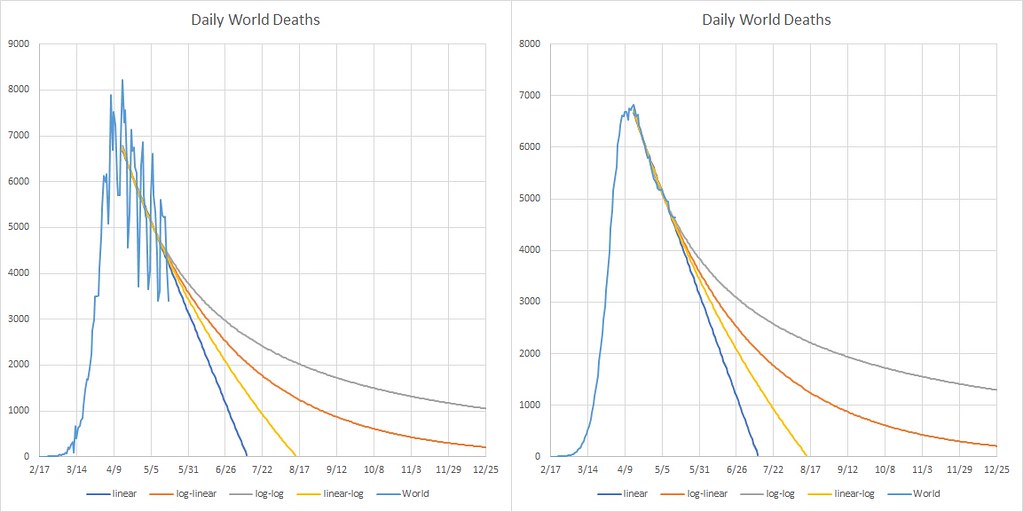

Any way you slice it, the World will not drop below 1000 deaths/day in 2020 …

World = World – CN = EU + US + RoW

RoW = World – CN – EU – US

SA = South America

RHS = x-axis log scale divisions are 30, 60, 90 and 120-days

A 2nd wave in the fall will make the time series look like a double humped camel with a global saddle point above 1000 deaths/day. That is the best prognostication that I can make as of today.

I forgot to mention that those time series are 7-day rolling means. Done so to remove the so-called weekend effects (which is real).

I dug into this non-determinism issue being raised to discredit the model.

First of all, in regard to getting two different results in single-processing mode, it was a bug introduced by the MicroSoft team. There’s really no reason to believe it exists in the original version of the model used by IC to generate the earlier projections used by government.

As so often happens, this bug was introduced when a new feature was added. Generating the network of individuals, households, etc is time consuming, and the developers decided to introduce the option of generating and saving a network without running the simulation, then in the future loading the simulation. This resulted in the pseudo-random number generator being seeded twice. If you generated and then simulated the network in one run, the seeding was only done once. Different results. Hmmm. BUT once saved, every time you run the simulation on the same network you get the same results using the same seed. The developers had never compared the generate-save-restart-load-simulate workflow with the generate-simulate workflow and hadn’t noticed the two scenarios gave different results with the same seed. It was fixed two days after it was reported and diagnosed, but the fallout has not died.

Now, regarding the multi-processing case, given the expense of network generation they don’t serialize the processes to guarantee that each time it is run, individuals are assigned to the exact same households or schools. The assignments do follow the parameter values used to drive the algorithm, so the statistical distribution does fit those. The developers state this is intentional because the time savings is more important to them than guaranteeing reproducibility – after all, you can save a network and then rerun using that same network to your heart’s content (regression testing, debugging, etc).

When run in multi-processing mode they guarantee reproducibility when you simulate the same network with the same number of threads. Again, important for regression testing etc.

Now, I can think of two possibilities here:

1. The developers from MicroSoft who are working on it haven’t actually tested reproducibility under the conditions where they guarantee it and are lying about the fact that they have and, indeed, depend on it. I’ve found no evidence for this.

2. lockdownsceptic doesn’t know what he’s talking about. Having read his commentary, I’ll say this is definitely true in some cases, at least.

dhogaza,

Thanks, that is very useful.

https://twitter.com/nevaudit/status/1258287467133677570

Has anyone read Tommaso Dorigo’s post, starting out:

“First off, let me say I do not wish to sound disrespectful to anybody here, leave alone my colleagues, to most of which goes my full esteem and respect (yes, not all of them, doh). Yet, I feel compelled to write today about a sociological datum I have paid attention to …”

Keith,

No, I haven’t read that. I’m not even sure of the context Do you have a link?

Google search

https://www.science20.com/tommaso_dorigo/the_virus_that_turns_physicists_into_crackpots-246490

Joshua,

Thanks. I think that article makes some pretty good points.

Yup.

With respect to comparing the effects of a “lockdown” in one country to voluntary social distancing in another, (or, I might add, extrapolating a national fatality rate from an infection rate in a non-random sample from one locality that isn’t nationally representative on such basic metrics such as SES and race/ethnicity) ::

>… I saw curves describing data from one country overlaid with other curves describing data from other countries, shifted according to ad-hoc criteria;…

Willard

Actually there really was a bug, as I described above. lockdownsceptic clearly didn’t understand what the bug is when he jumped on it, nor the fact that it had been recently introduced along with the new feature I described, and ignored the fact that it was fixed two days after being diagnosed with the help of the team that reported it. Or that the development team hadn’t noticed because the way they configured and ran the model DID lead to deterministic (reproducible) results. Blah blah.

It’s just FUD, though. It’s the same strategy used to try to discredit models like the NASA GISS Model E. Scream for them to be open sourced. Then scream “the code is unreadable (I don’t think Model E is, though the physics is incomprehensible 🙂 )”, OMIGOD it is written in FORTRAN it must suck!!! etc etc.

Of course everything thus far is unrelated to the MODEL part of the model source code, i.e. the parts that implement the SIR model that moves people through various states, the parts that model the geographic spreading of the disease, the parts that model the pace of infection through households and schools and all that. The parts that correspond to Ferguson’s paper describing how the model works. It’s much easier to say “your variable names suck!” than to address issues of substance.

> OMIGOD it is written in FORTRAN it must suck

Jinx:

https://twitter.com/nevaudit/status/1258554550128173056

If you read back the thread you’ll notice auditors who fail to realize that R (which is as ugly as it can get imo) is a bit newer than they presume.

Requiring bit-level reproducibility for stochastic simulation is a bit unreasonable – in most cases we ought to be more interested in whether the stochastic variability from run-to-run is suitably small that we can place reasonable confidence in any given run.

I did an experiment a few years ago that was basically a low-priority job that could be run when there wasn’t something more important to be done, and it ended up taking a N months to generate the results. The individual simulations took longer than the maximum allowed on the cluster (@5 days), so I designed the system to checkpoint, including saving the state of the random number generators, so in principle it would be possible to re-run all the simulaiions again and get the same numeric answer (I’m not naive enough to think that would hold in practice). This ended up taking quite a bit of work to get just right. But then it occured to me it would be an egregious waste of computer time to re-run all of these simulations for another few months, so what was the point in aiming for bit-level reproducibility for a study that would never be replicated (because of the cost and because stochastic reproducibility would have been acceptable for all practical purposes)?

There is some irony in people preferring R to Fortran as programming languages – they are both fairly horrible in their own peculiar fashions! ;o)

(They both have their uses though)

It was also amusing in that twitter thread some were also suggesting MATLAB as better than Fortran, despite MATLAB (especially back in the 90s) was mostly a front end for a set of highly efficient and reliable library routines written in, errr, Fortran (with some nice graphics)!

dikranmarsupial

“Requiring bit-level reproducibility for stochastic simulation is a bit unreasonable – in most cases we ought to be more interested in whether the stochastic variability from run-to-run is suitably small that we can place reasonable confidence in any given run.”

Well, the issue here was whether running the same executable on the same machine, no parallel processing, same seed, same data would give the same result twice. Which it should, and does. The point is for regression testing – do your changes to the code change the output? Sometimes changes should change the output, after all that’s the point of changing the underlying theoretical model and then implementing those changes. Other changes – say speeding up I/O or the like – shouldn’t.

Obviously this has nothing to do with the stochastic simulation itself, and the developers talk about doing a lot of testing to make sure the model is giving reasonable outputs for a range of parameters, seeds, etc.

But as I said earlier, it’s just FUD. When calls were made to open source the thing, it was obvious the point was to discredit it, just as was attempted when climate model sources were published.

> Requiring bit-level reproducibility for stochastic simulation is a bit unreasonable

It’s at the very least bit-level unreasonable.

I’ll grab my coat.

You can be sure that no Real Programmer would be caught dead writing accounts-receivable programs in COBOL [1], but

[1]: See https://web.mit.edu/humor/Computers/real.programmers

Pfooey! Ed Post was 30 years too late.

My mother was a REAL programmer on the brace of IBM 704s at what was then named Los Alamos Scientific Laboratory. Only assembly code, FORTRAN II wasn’t good enough for blowing up atomic bombs to propell Stan Ulam’s space ship.

Operating systems? What’s that? REAL programmers ran their codes from the front panel after stacking their cards in the hopper.

dhogaza true – my simulations were all compute-bound batch jobs, and methods for speeding it up (e.g. choosing different methods to solve large sets of linear equations) don’t necessarily give bit-level identical results either, so not really typical. I’ve been experimenting with reproducible code for quite a while (the aim is to be able to type “make” and have the computer re-run the experiments, patch the results into the LaTeX source for the paper and then recompile that), but it is much harder to do once the time taken to run the experiments is more than a couple of days.

Fully agree about the FUD, has *any* skeptic ever done *anything* substantive with the source code of a climate model? [I’d genuinely be interested in positive examples]

What about the divide-by-zero error in the agricultural damages in FUND? Skeptics identified the problem and showed that it lead to climate damages being systematically underestimated.

Of course, you meant ‘skeptics’, rather than actual skeptics. And this is not really a ‘climate model’ in any normal sense.

Or in a different field, the ‘fun with Excel’ in Reinhart-Rogoff.

dikranmarsupial

“methods for speeding it up (e.g. choosing different methods to solve large sets of linear equations) don’t necessarily give bit-level identical results either, so not really typical.”

Sure. Change compilers and you might see differences, too. And, back in the day before the IEEE floating point standard was adopted, floating point hardware differences guaranteed bit-level results for floating point operations would aways differ from machine to machine.

And Willard’s comment points out how unfortunate it is that log2(10) is an irrational number, and how annoying bankers are for wanting their pennies to balance 🙂

Willard

FORTRAN had its issues. The first Mariner probe failed because a DO loop of the form

DO 10 I = 1,100

Was accidentally written as an assignment statement of the form

DO 10 I = 1.100

Insignificant spaces, in space …

FORTRAN ignored spaces (should that be present tense???), and this error was probably made by a keypunch operator, not the engineer. Don’t quote me on that. Real programmers always blame the keypunch operator 🙂

“despite MATLAB (especially back in the 90s) was mostly a front end for a set of highly efficient and reliable library routines written in, errr, Fortran (with some nice graphics)!”

most of the heavy duty math in R is just wrapped FORTRAN libraries

( for matrix calcs)

in the end it is quite a bitch, because some things ( Like SVD) will call these old fortran libs

and you get errors # that reference this old legacy Fortan code. so ugly I gave up

thank you for that Joshua

‘As I said at the very beginning, I hope my colleagues understand that here I am not accusing anybody. For I have, many times over during these days of confinement, felt that urge to interpolate data and make sense of the apparent inconsistencies they present. But I resisted it, largely because I knew it would have been a dilettantesque form of entertainment – and I do have a good substitute to data fitting in that compartment, as I spend hours playing online blitz chess, where I am, indeed, a dilettante (although a good one at that). So we are human beings: and we want entertainment, and we find it in weird ways sometimes. Nothing to be too concerned about.”

Every time I start a chart or download covid data I ask myself the same question.

why? why mosher? really, think about hard steven. Why are you doing this?

oh? you’re afraid. And then I realize that no amount of number fiddling will work better than

a mask and hand washing.

And so I have spent my time playing blitz chess again after years.. maybe 18 years since I was at a board.. oh wait it was 9-11 last time I sat down at the board. how long ago was that?

dhogaza indeed – I’ve always thought that “significant birthdays” ought to be 16, 32, 64 and then aim for 128. The years get shorter as you get older, so it sort of makes them equal intervals.

The good bit about R is the libraries, which is the main reason for using it (python is a bit like that, but not as bad).

I’ve got very to using python libraries. I do remember the days when I used to type out subroutines from the Numerical Recipes in Fortran book (I eventually got hold of a disk that had them all on there – still had to copy them directly into the code though).

From the linked crackpot article:

“I saw fits using Gaussian approximations to uncertainties which ignored that the data had completely different sampling distributions”

This sounds pretty much exactly what the nonsense IHME model was doing. Completely ridiculous. These people are supposed to be pros, and yet they pumped out this garbage for weeks (it’s improved recently). I’m afraid I am increasingly coming (rather belatedly) to the realisation that “mathematical epidemiologists” aren’t really mathematicians at all, rather they are biologists who are slightly better at maths than other biologists. Which is a pretty low bar.

James,

Yes, as I think I may have pointed out to you before, I’m finding this quite a tricky situation. I’m a big fan of leaving things to the experts (as that article about physics crackpots is suggesting) but there are some indications that the experts themselves didn’t always do a great job. Of course, that doesn’t necessarily invalidate the point being made in the article 🙂

ATTP – I had a similar experience, but with “Numerical Recipies in [Fortran Brutally Transliterated into] C”, before moving to MATLAB, where I eventually started adding C “mex” files to optimse the code by selecting the best BLAS routines for the operations, rather than letting MATLAB choose them. Then we got a High Performance Computing facility, which meant that I had to spend less time (over-) optimising code and could (in theory) spend more time thinking about the theory and algorithms (in practice, I have been teaching instead).

James Annan

“This sounds pretty much exactly what the nonsense IHME model was doing. Completely ridiculous. These people are supposed to be pros, and yet they pumped out this garbage for weeks (it’s improved recently).”

Their PR machine is still pumping out garbage. That bit has not improved, IMO.

Steven –

> Every time I start a chart or download covid data I ask myself the same question.

why? why mosher? really, think about hard steven. Why are you doing this?

Bingo. Is this just pure inquiry? Or are there other “motivations” as well? Why are you being an armchair epidemiologist?

> oh? you’re afraid.

Sure. I think there are other factors as well. But yah, much does boil down to fear. The need to distract from the reality of mortality. Mortality has become much harder to avoid lately.

What’s ironic is that we wind up being divided by an experience that is so fundamentally common among us.

Don’t know if you saw this:

https://www.theguardian.com/world/2020/may/15/flying-long-haul-during-covid-19-air-travel-has-never-been-stranger

> My mother was a REAL programmer on the brace of IBM 704s at what was then named Los Alamos Scientific Laboratory.

Wonderful.

“Don’t know if you saw this:

https://www.theguardian.com/world/2020/may/15/flying-long-haul-during-covid-19-air-travel-has-never-been-stranger”

ya someone passed that on to me.

This has been the absolute weirdest few days of my life.

In all of it there was this little piece of luck

I was sitting at LAX waiting for the Hotel shuttle ( no cabs no uber) pretty beat.

I got on the shuttle and as we got to the hotel I realized.

I

Left

my

Computer

Bag

At

The

Bus

Stand.

That would be all my phones, passport, everything except my wallet.

Opps. I have never done that in years and years of travel

I paid the driver $100 bucks to race back to the airport.

Since I am typing this you know the ending. We got to the airport and there were 3 flight attendents

standing where I had left my bag. Thankfully they had given it to security.

Whew.

Otherwise, I would be stuck in LA.

If y’all have a chance to watch Midnight Gospel, go for it.

It reminded me:

https://www.mit.edu/~xela/tao.html

Willard

“The Tao gave birth to machine language. Machine language gave birth to the assembler.

The assembler gave birth to the compiler.”

Well I first learned machine language, and began writing an assembler because we didn’t have access to the assembler from the manufacturer.

And then became a compiler writer …

Oh, my. Reading about the company that had to revert back to COBOL because they didn’t understand rounding brings back so many memories.

Numerical Methods is a specialized branch of Computer Science. I’ve seen too many people that are “computer experts” that have no clue. I started learning computer programing (nobody ever finishes) in the punch-card/mainframe days, and we were actually taught about the perils of floating-point arithmetic. Things like:

…so things like “IF (b-a) = 0.2…” probably won’t behave the way you expect. And thinking that “(N/10)*10” will actually be N is a dangerous place to put your brain. Double-precision reduces the problem, but does not remove it.

A quick Google search produced this nice short list:

https://my.eng.utah.edu/~cfurse/ece6340/LECTURE/links/Embarrassments%20due%20to%20rounding%20error.htm

(The stock exchange example was the one I was looking for).

…and that site has a link to the ubiquitous Risks Digest that has been tracking these kinds of boners for decades.

http://catless.ncl.ac.uk/Risks/

Dikranmarsupial:

I was working as a Linux system administrator at Los Alamos National Laboratory when I retired. I didn’t do a lot of programming there, mostly adhoc scripting with Bourne shell, perl and finally python. I wrote a fair amount of Fortran77 and C early in my career, but perl was my most productive language ever since it was introduced, for its string processing. Assembly language was fun when I took a course in it, and felt like talking to the machine in its own language, but I didn’t use it after that.

The computational physicists I worked with at LANL, OTOH, were familiar with Fortran and C, but were happy to use R and Matlab for their diverse add-on functionality, because it saved them a lot of writing what they needed themselves: imagine that! Python immediately became popular because of the easy access to contributed extensions and class libraries. The lab contracted with a private outfit to maintain a python IDE, together with specified modules and extensions. And many of [the scientists – W] found python’s object orientation more intuitive than their earlier procedural approach. TBH, I myself did not. I thought python’s rich pre-written functionality was quite handy, but my own code was all procedural. I eventually decided it might be time to retire 8^)!

David B. Benson:

I grew up in a college town and liked science, so I was inculcated with the Los Alamos legend early. I still like the stories from the first decades, of historic physics achieved by heroic scientists who happened to be building weapons of mass destruction. I’m afraid I was rather disillusioned when I started working there. They’re still working on WMDs, but it’s hard to imagine the present-day LANL producing any Nobel-level physics 8^(. I came to recognize various causes for the lab’s decline from its heyday, to be sure. The computing infrastructure at my arrival was especially underwhelming, although the raw processing power was impressive. Be that as it may, the PhD physicists of my acquaintance were domain specialists, who cared about meeting scientific standards. They didn’t care so much what language or OS REAL programmers used, but went with whatever got in the way of their productivity the least. I for one am a Linux evangelist, so they probably all thought I was a geek. Hey, if the foo shits… 8^}.

I orphaned a “them”. The sentence should be “And many of the scientists found python’s object orientation more intuitive than their earlier procedural approach.”

[Fixed. -W]

Vaguely remember Goldberg’s “What every computer scientist should know about floating-point arithmetic” was a reasonable place to start (doi:10.1145/103162.103163).

I finally got the IC code to run on my iMac. with 32GB. It works fine and is not too slow at all.

Basically you must also

1. Install cMake

2. switch off the parallel processing option in the make file

3. install r package “sp”

4. Fix various host specific paths.

The example “non-intervention” scenario they provide is extremely scary with R0=3 and over 600,000 deaths in the UK over 2.5 months! In his original paper I believe Neil Ferguson actually used R0=2.4 and an IFC of 1% resulting in 500,000 deaths.

There is nothing wrong with FORTRAN. You can write clear and structured code.

However, the code has now all been transferred to C++ 😉

Mal – I’m starting to move to Python, but only reluctantly. The main reason is so that I can give away research toys for others in my field to play with. Python is widely used in machine learning, but mostly because of libraries like SciKit-learn and most of the deep learning tools have python bindings (I have been experimenting with Stan which is a probabilistic programming language with a python interface). I tend to write object-oriented code a fair bit, but I don’t like python’s approach to this (especially the lack of proper encapsulation), but with matplotlib, it is a good second best to MATLAB, with the advantage of being free.

C & C++ are my favourite languages for general programming, but I get to do hardly any of that these days (mostly just answers to the coursework I set). It is a shame that programmers don’t get much exposure to assembly these days, it helps you to have some appreciation of what the computer is likely to do with your code, and having some empathy with the hardware makes you a better high-level language programmer (IMHO). It’s also enjoyable in the same way that a difficult sudoku puzzle is enjoyable.

https://twitter.com/niklasfrykholm/status/1063242674717679621

Clive wrote “There is nothing wrong with FORTRAN.”

I wouldn’t go quite that far!

“You can write clear and structured code.”

agree with that though. Use a programmng language that allows you to program in the style that is best suited to the structure of the problem you are trying to solve. Shoehorning a procedural problem into an object-oriented structure is sometimes a recipe for inefficiency and a less maintainale baroque architecture.

Object Orientated Programming became too much of of a religion.

It’s brilliant for user interfaces and smartphone apps, but fairly irrelevant to scientific FORmula TRANslation 🙂

dhogaza (or anyone else for that matter) –

Would you do me a favor? Andrew rightfully reprimanded David and me for gumming up the recent comments.

I want to respect that, but on the other hand David’s now commenting on a statistics thread and I think that Andrew should have a little background. I was going to post the following and thought that maybe it’s better of someone else does it (It’s still a distraction but maybe less so if I don’t do it?). Anyway, here’s what I was going to post – a few tidbits from David’s past reflections on Andrew. If you post it, don’t forget to let Andrew know that dyp6629 = David Young

————————————-

dpy6629 | April 19, 2020 at 11:29 pm |

Josh, This Gelman is a nothingburger. He admits he’s not an expert on serological testing and that he doesn’t know if the Ioannidis paper is right or not. I think I’m done with your low value references.

and

Gelman looks like someone who likes to hold forth on subjects he is ignorant of such as serologic testing. He then tries to shame other scientists who know much much more than he does. Typical blog thrill seeker whose conclusions can’t be trusted.

Woops. I forgot the time stamp for the 2nd comment…

dpy6629 | April 20, 2020 at 5:15 pm |

Gelman looks like someone who likes to hold forth on subjects he is ignorant of such as serologic testing. He then tries to shame other scientists who know much much more than he does. Typical blog thrill seeker whose conclusions can’t be trusted.

Clive Best:

The “brilliant for user interfaces” is compatible with DM’s “research toys for others in my field to play with”. Python was an immediate hit with guys doing exploratory data visualization with sliders 8^).

In my callow formula-translating days, I said “COBOL is the COmmon Business Oriented Language for common business-oriented people” in self-congratulation. I hereby apologize.

Thanks Willard!

Joshua,

Where is that comment from DPY from?

> Gelman looks like someone who likes to hold forth on subjects he is ignorant of such as serologic testing. He then tries to shame other scientists who know much much more than he does. Typical blog thrill seeker whose conclusions can’t be trusted.

Where has David Young from the Boeing Company (who recently made a white paper about engineering practice disappear from his publications’ page) said that?

If you go to Gelman’s, you’ll see he has a listing in the recent comments thread where he asks Andrew a question.

He also, of course, left a dig after I apologized to Andrew for gumming up the recent comments. Such a classy guy, eh?

The two comments I put up were from Climate Etc., a few threads ago:

Afterwards he did walk it back just a tad:

If anyone is going to drop off David’s comments, please only do it briefly and in the thread where he asked Andrew his question. I don’t want to add anything to the other thread where Andrew asked that the childishness cease.

I’ll also point out that when I criticized the quality of the human subject methodology in the Santa Clara study and said it shouldn’t have passed an IRB review, and that anyone who does human subject science would know that, David first explained that I am not a scientist and then he explained that I post anonymously, and then he explained that he’s been publishing research for 40 years.

I didn’t realize that they do human subject research at Boeing.

David’s gonna David.

“Josh, This Gelman is a nothingburger. ”

ROTFLMAO

Oh, and also, aside from the childishness, I think some folks here might be interested in the discussion up at Andrews about “informative priors.” I recall that was a subject of a discussion here a while back – I think in particular in connection w/r/t Nic’s ability to determine which priors are “objective?” 🙂

James left a comment in his customarily diplomatic tone:

https://statmodeling.stat.columbia.edu/2020/05/17/are-informative-priors-incompatible-with-standards-of-research-integrity-click-to-find-out/#comment-1339344

Mal “The “brilliant for user interfaces” is compatible with DM’s “research toys for others in my field to play with”. Python was an immediate hit with guys doing exploratory data visualization with sliders 8^).”

????

By “research toys” I meant libraries implementing my methods so that other researchers could build on them. No sliders involved (although they are often object oriented as that facilitates their extension/modification by the users).

Re James’ comment – calling it “objective” is even worse (as it is a jargon meaning of “objective” rather than the one in general usage, but that distinction is rarely made by those promoting them).

This is a neat demonstration of a “play with a research tool”. “Build your own zero-emissions energy system”:

https://model.energy/

For those who are bored of armchair epidemiology and looking forward to armchair energy system planning.

Joshua,

Which of Andrew’s threads was the one where he “reprimanded” you and DPY?

“…so things like “IF (b-a) = 0.2…” probably won’t behave the way you expect. And thinking that “(N/10)*10” will actually be N is a dangerous place to put your brain. Double-precision reduces the problem, but does not remove it.”

In over 45 years of Fortran programming. particularly since my default is dp code for most of that time, I have very rarely run into any such issues, as long as you keep your floats over here and your integers over there, when dealing with mixed ops I have always converted integers to floats (well the integers are still there, I just will not do comparisons between data types). I code in baby steps now, in fact all my codes are baby codes, so that I rather quickly run into problems that need corrections. The baby algorithms are mostly in my head now.

I am very wary of those potential issues though. Always.

Anders –

Here:

https://statmodeling.stat.columbia.edu/2020/05/14/so-much-of-academia-is-about-connections-and-reputation-laundering/#comment-1339357

I seriously love David’s parting shot. He steadfastly turns down the chance to show any grace whenever provided an opportunity.

Thanks

Reading that thread, I thought it nice that DPY excused the human failings of the researchers involved in the Stanford study. Based on my past interactions with DPY, that seemed quite out of character. You might think that it’s because the Stanford study produced results that suited DPY’s preferred narrative, but it can’t be that, surely?

I’ll be generous to David and assume that he has no idea what kinds of expectations there are for human subject research. Too bad that he can’t just admit that than insist thst he has some expert perspective on omit by virtue of his background. It just makes him look bad instead of just lacking knowledge.

It will be interesting to see what happens with that “whistleblower” report described at BuzzFeed. Is it really a whistleblower? Is there evidence to support the accusations?

If so, I would hope there will be disciplinary action taken. If not, if they defer to the reputation and esteem of Ioannidis et al., it would be a stain on Stanford.

> Which of Andrew’s threads was the one where he “reprimanded” you and DPY?

This one:

https://statmodeling.stat.columbia.edu/2020/05/14/so-much-of-academia-is-about-connections-and-reputation-laundering/#comment-1339357

Willard,

“Real programmers always blame the keypunch operator”

Real programmers used IBM card punch machines and then fed the cards through a RIOS (Remote Input Output Station)

http://cds.cern.ch/record/1816218

Joshua

“I didn’t realize that they do human subject research at Boeing.”

Boeing 737 MAX … researching how quickly human pilots can react in a crisis situation …

OK, that’s not very nice of me.

I’m trying to imagine what would explain a researcher testing a few thousand participants for antibodies, with a test that gives false positives, and knowing that at least some participants have been told that a positive test could be a passport for going back to work, and then being resistant to informing the participants the implications of a false positive and offering them a follow up test.

To the point where someone else in the research team would withdraw from the publication becsuee of rhe ethical implications.

I mean I get motivated reaoning – but that’s just indefensible in my book. Knowing that some infectious people could be walking around thinking they have been informed that they can’t infect anyone – as in, say, their grandmother or spouse or child?

I guess that puts me into the “data cleaning” bin:

what did you do before enlightenment? chop wood, clean data.

Well, that ‘s much of the point. In the code, (0.4-0.3) and (0.3-0.2) might be expected to be equal, but in base 2 there is no guarantee. In VBA single-precision code in Excel, the results are 0.1 and 0.09999999. You won’t notice it unless you force Excel to show you more decimals than it wants to. (Excel normally uses double-precision internally. Fewer problems, but not zero.

And (N/10)*N might be equal to N for sufficiently large values of N, after rounding, but if N is an integer, and N/10 is converted to floating point, and the result*10 is stored back into an integer variable with truncation, you can bet your sweet bippy that something will eventually go wrong.

I was also taught that when doing mixed mode, make sure that you make the decisions where to cast to a new type. When I and J are integers (there’s that FORTRAN train of thought), and the (maybe ancient) compiler is happy doing integer math without forcing to floating point, I=1 and J=10 and K=I/J will result in K=0.Even with I/J is cast to float, truncation can give you a smaller result that you expect.

Don’t trust the compiler to cover your @$$.

Well, we have a winner:

While the arcania of the various features and flaws of FORTRAN, PYTHON, R MathLab are undoubtedly of importance in all this, (whats wrong with FORTH? ) it somewhat misses the purpose to which this argument has been put.

All the major newspapers in the UK are now running the story that the computer modelling used is WRONG, with the clear implication that the lockdown, social distancing, and testing are all a malicious imposition of government control that is both unnecessary and economically disastrous.

The ‘SUN’ as usual has the most dismissive headline –

“-‘IT’S A MESS’ Professor Pantsdown’s ‘Stay At Home’ lockdown advice based on badly written and unreliable computer code, experts say”

The issue has ceased to be about the quality of the code, or the modelling, but this is now being used to Attucks and change the policy response to the pandemic for reason other than scientific quibbles about computer language or variations in stochastic models.

As with climate change, the attacks on models have little to do with computational purity, but are a proxy battle against the inevitable policy conclusions that can be derived from the best scientific knowledge we have of the issue

“whats wrong with FORTH?”

great language for a small computer (like my first computer – the Jupiter Ace)

Treating it as a Fermi problem (“how many piano tuners are there in New York?”), just take fifteen minutes to knock up a spreadsheet, and you’ll soon find out you’ll overwhelm the health service within weeks.*

Box was right. All models are wrong. But Ferguson’s was useful.

*there are also a slew of studies that point out almost every spreadsheet on the planet contains errors. We still use them.

@-dikran

“great language for a small computer (like my first computer – the Jupiter Ace)”

Yeah, I wrote a sound-to-light show program in FORTH on a Sinclair Spectrum back in the day…

easier than machine code/assembler (grin)

The articles (not sure they are really in ‘all the papers’ but certainly in most of the populist and right-wing ones) are a pretty broad-spectrum hit-job on Ferguson, really, although some of them lead with the code stuff. Actually the Daily Mail one even includes more relevant/reasonable criticisms about the choice of parameters used in the models.

This is a general challenge for any domain which involves computer models, which is basically all of them. It is now standard practice to open-source anything with public-policy implications, and I think nobody really expects or wants that to change. But any bug, or even a deviation from someone’s idea of ‘coding standards’, no matter how minor, will be presented by some as a fatal flaw discrediting the work. Won’t get that much traction unless it significantly changes the results.

But the most effective ‘big hit’ against Ferguson is still going to be his personal contravention of lockdown. I mean, obviously, if you are in the public gaze, you are going to be subject to personal attacks, so your life isn’t going to be the same. You are now a minor celebrity: deal with it. Maybe the peak science bodies should do more to provide media and public image advice/services for heavily exposed scientists?

Don’t think much of this is a problem that scientists can solve. You need a press corps with sufficient science literacy and integrity to be able to correctly identify which issues are consequential, rather than one that makes a habit out of skewed hit pieces. Don’t think that will happen any time soon, given the slow apocalypse traditional media is undergoing.

Ben,

One problem (I think) is that modellers don’t always do a great job of highlighting the limitations of their model. In the case of a model trying to represent something complex, like how our various interactions might spread a virus, it’s probably impossible to capture all the complexity. So, it seems unlikely that any model results will accurately represent what happens. However, this doesn’t necessarily matter if you’re trying to check if we might swamp the healthcare system (it mostly matters whether or not we will end up over-capacity, not whether it’s10 times over, or 50 times over). Similarly, a model might not be able to precisely predict the outcome, but it can still probably tell you something of how various different strategies might change the outcome. If there are indications that we might end up many times over-capacity if we do nothing, we would want a strategy that substantially reduces this, rather than one that would only have a modest effect.

Yes, even though this has little bearing on the science, I still find it annoying. He must have been aware that he would be in the spotlight.

“…for any domain which involves computer models…”

A minor nit to pick, but it always bugs me when people talk about “computer models”. Science makes use of mathematical models. Computers are just one way of solving these. Calculus and Algebra exist independently of computers. Analytical solutions exist for many cases of mathematical models, independently of computers. Computers just happen to be a convenient and fast way of finding solutions to some problems, but the concepts are inherently mathematical.

If the contrarians’ bloviating of “you can’t trust computer models” were expressed as “you can’t trust mathematics”, then it would be obvious how empty their rhetoric is.

You can’t make predictions without a model, even if there is no computer and no maths either, there is still some conceptual model involved. If you don’t have that, it isn’t a prediction, it is merely a guess.

In my experience, most contrarians bloviating about other peoples computer models become rather reticent when you ask them about the model underpinning their predictions.

Yes, even a “descriptive model” is a model…. a collection of words describing how you think something behaves.

Mathematics is just a well-defined collection of such descriptive models, with standard symbols (it’s own language) using well-defined concepts with well-defined rules on how those concepts link together. “Y = mX+b” is just shorthand for “Variable Y appears to be related to variable X in that any increase in X is matched by an increase in Y in proportion m, and when X is zero, Y still has a non-zero value of b”.

The definition of “model” that I tend to use is “an abstract representation of reality”. There is always a level of abstraction. It is never an exact duplicate of reality. Yet such abstractions can be useful if they do a good job of approximating some portion of reality. (There is probably a shorter, niftier, well-recognized descriptive model for that concept. 🙂 )

Even this comment does not completely express the true nature of my thoughts – it uses the English language to provide you with a descriptive model of my thoughts.

Is this a model, or just Excel curve fitting at its so-called finest?

I’d go with curve fitting. Taking the most conservative (slowest decay), which is the power law decay (log-log), I’d expect half a million deaths in the July time frame (same applies to the exponential decay (log-normal).

Really not much of a prediction. All fits may decay slower than as shown.

‘log-normal’ should be ‘log-linear’ per graph labels

It’s simple, really. No model, no measurement. No model, no data. No model, no implementation of any theory whatsoever. Unuseable science.

And yet:

https://twitter.com/nevaudit/status/1261291311161884682

Just for the sake of amusement…

I didn’t let Andrew know of David’s previous reflections on Andrew’s contributions. And after Andrew noted that David was rather “rude,” David respondied.

David Young says:

May 18, 2020 at 12:05 am

Andrew, Joshua is quote mining a long comment thread with lots of other comments. What I meant to say is that your post is in my opinion a nothingburger. I don’t know about the statistics part but it seems to me the critical issue is the serologic testing. In that comment thread it is also stated that you are a world class statistician, a comment I agree with. I apologize for the thrill seeker comment.

Joshua has been heckling me on blogs for several years. He shows up with very repetitious and often unscientific comments and always brings up his motivated reasoning ad hominem. It just gets very frustrating to have every interaction taken down the same road into internet diagnoses of my “reasoning” and state of mind. In addition its unethical.

++++++++++++++++++

Ah, irony. Where would the blogosphere be without irony?.

Ooops. I “did” let Andrew know…not “didn’t.”

On perhaps a positive note – that perhaps presents a different dynamic than the attacks on scientists dynamic:

I think a few folks who read here are from Oz? I’m curious if any of them have any thoughts?

“whats wrong with FORTH?”

Dunno. I was waiting for FIFTH to come out.

Still waiting 😉

Yeah, sure, what the “skeptics” are attacking is really details of the code implementing a mathematical model. Because it turns out that whining about the appearance of code is a lot easier than actual real verification and validation or understanding maths.

ATTP: I agree that the limitations of the models are not well described in popular media. But I don’t think it is realistic to expect a careful conversation with any significant amount of nuance. And I certainly don’t think this is the ‘main problem’.

‘We predict between X and Y deaths if no policy measures are taken’ is about as good as it is going to get. I guess you could have ‘this will require 50x as many hospital beds as we have’ as well.

Something like ‘the model uses a continuum model that represents viral spread on a coarse-grained single-pool population level, rather than simulating the details of individual interactions, or considering inhomogeneous subgroups’ seems several steps too far to me.

I guess you could have ‘these are highly idealised models of disease spread through the population, but have been effective at capturing the broad features of previous epidemics’.

Ben,

Indeed, but I wonder if the model limitations are even made clear to the policy makers.

Joshua,

One of the issues I have with DPY (amongst a number) is that he seems to expect people to respect his expertise while regularly dismissing the expertise of others (Gavin Schmidt and Andrew Gelman being two prominent examples). It’s not only that this is rather rude, it also suggests a rather a severe case of motivated reasoning, which would then suggest that one should be cautious of taking anything he says seriously (IMO, at least).

Anders –

Agreed. His apparent disregard for, or inability to recognize, his obviously hypocritical complaints about ad hominems also suggests a severe case of motivated reasoning.

I left a comment at Andrew’s:

More than one link gets you in moderation at Andrew’s.

But srsly, we all should give it a rest. David’s gonna David.

Willard –

> But srsly, we all should give it a rest.

I agree. Done.

“Indeed, but I wonder if the model limitations are even made clear to the policy makers.”

Well, having worked in government for the last 25 years, and having seen some policy shops in action, the policy wonks frequently don’t have the time to listen, and don’t necessarily have the technical skills to understand the limitations. The really bad policy wonks have drunk the kool-aid and honestly think they can learn everything they need to know to advise the policy makers, after only a few hours of “talking to the right people”.

The good policy wonks will have a strong background in the subjects they are asked to advise on. The bad ones think that changing areas/departments every few years is a sign of broad experience and knowledge, and is required for a successful career. (i.e., my idea of bad is their idea of good.)

Welcome to “Yes, Minister”.

“Yes, Minister” was the first thing I thought of, too…

AFAICT, the initial idea that it would be a bad idea to intervene too early was an actual error of judgement, though, rather than communication.

I have got it running

I’m Australian and the Federal Govt hasn’t changed it’s ideology. They have long been anti-China and blandly racist; this was just an opportunity to shine… Cutting off flights from China was a key part of the response.

Then the State Govts did their part so we can’t travel between States without going into quarantine.

Australia doesn’t have the ‘individualism’ that seems apparent in the US, our history is more about helping each other (as long as you’re not black or Asian) through the tough times, so there is a streak of collectivism. We worship events (like Gallipoli, Tobruk, The Kokoda Trail) that were tough and where we all had to work together to succeed or fail (like Gallipoli) rather than heroic individuals (we tend to mock heroes), so it wasn’t hard to get people to work together.

The Federal Govt will use this to pursue their agenda of watering down labour laws and the stimulus will support their big-business mates… Same same really.

Australia also did very well during and after the GFC because the Labor Govt (the more Socialist one) spent big on infrastructure programs and handed out cash. Although the Conservatives mocked them and ridiculed the programs it was pretty clear they worked, so in this worse situation there’s no way they couldn’t follow suit.

Hmm. In the beginning I was rather surprised at De Blasio’s get it done approach

and heartened by Cuomo’s approach to data driven decision.

However, De Blasio has gone mad and watching Cuomo’s team explain needlessly complex metrics has been a disappointment.

Looks like NY will employ Imperial College. watch the guy .

yuck.

meanwhile,

feet on the gound

https://wwwnc.cdc.gov/eid/article/26/8/20-0633_article

I think part of the leveling that started in April is due to the fact April is warmer than March. That would mean the worry about the reopening soon causing a 2nd wave may be all for nought. If so, the fall could be a disaster with nation behaving recklessly with a virus that has its mojo back.

COVID-LAB: MAPPING COVID-19 IN YOUR COMMUNITY

A successful virus type is able to replicate and then transmit. So if too virulent the host goes to hospital, breaking the transmission.

Examples include the viruses causing the so-called common cold. Just enough to keep going.

My amateur opinion.

Hey ATTP, would you care to output a zoomed-in view of what was happening in the early stages of the run, say up to mid or late march, in terms of cases and deaths? Would be interesting to see how it compares to reality…

James,

Sure. I’ve put two figures below. Unless I’ve messed up, these are from the sample parameter files they provided using R0 = 3.0. Some of what they presented in the papers was for R0 = 2.4. I’ve plotted cumulative deaths and then infections, both for 100 days, starting on 01 Jan (i.e., ending around 09 April). In both cases it’s for scenarios where no interventions were introduced.

Let me know if there is anything else you’d like. I’m certainly not yet completely familiar with all the parameters in the code, but I can give it a try.

James,

Do you have any idea what is going on with this Imperial model: they have a ‘country-specific lockdown factor’ that does most of the work, but I’m struggling to explain how it worked for Sweden:

https://mrc-ide.github.io/covid19estimates/#/details/Sweden

I’m wondering if the country-specific results on the web are produced using the country-specific stan code.

what’s illuminating is some of the critics complain about the model being built for “Flu”

this is an example i have seen on a “skeptic” website

“The model itself does not withstand independent scrutiny and is based on some deeply flawed assumptions, namely that it’s based on the spread of a flu like virus”

I think these idiots mentally picture a “model” a bit like a model aircraft or model boat

“The model itself does not withstand independent scrutiny and is based on some deeply flawed assumptions, namely that it’s based on a model of a Sopwith Camel not a Messerschmitt BF 109”

the modellers have literally built a physical model of a Sopwith Camel – doh

i don’t think they have the mental cognitive strategies to internally compute what a computer model is and what it isn’t

Thanks ATTP, I was hoping for something visible:-) how about log scale so we can see the rate? Putting real data on would be good too 🙂

James,

Now you’re asking a lot 🙂 Let me see what I can do.

Seeing as you’re here James, it would be intriguing to see what your model now calculates R for Sweden. Any chance of running that? My assumption is R~1 as deaths are stable or very slightly declining?

@Ben

That is a different IC “model”. It is some mainly R code which tries to derive some Baysian statistical inference from the actual case data.

https://github.com/ImperialCollegeLondon/covid19model/tree/master/stan-models

It is indeed very confusing .

Clive: yes, I guess I should have mentioned that the Imperial model I linked is not the model in the OP. I don’t think the model itself is too complicated, but I’m struggling to understand the results…

This looks suspiciously good. Of course, it assumes no interventions (which started on around day 83). I have a telecon starting in 15 minutes, but will try to look at this some more later. Black points is the data from here.

David, this virus has little or no selection pressure favouring less lethal forms.

Death rates are minuscule among the population that will breed the next generation of hosts. And its long asymptomatic but infective period and high reproduction ratio means that even those who are sick enough to stay at home undirected won’t slow it down. Doubling rates for deaths (as a proxy for infection 2-3 weeks earlier) were down to 2.5 days in the UK before lockdown, despite its current lethality. It would need a “hopeful monster” mutation that made it less harmful without impacting transmission, rather than a chain of small mutations each favouring a slightly less lethal form.

Something like Ebola, with a 50% death rate across generations, would be a completely different matter.

This is what I get if I include the basic interventions. PC = place closures. SD = social distancing. CI = case isolation. HQ = household quarantine.

Finally, this is what I get if I consider cumulative infections. The black dots are, again, from here and almost certainly undercount the total number of infections. The model would suggest that a few million people in the UK have already been infected. This would be ~5% of the population which is – as far as I’m aware – consistent with testing that has been done elsewhere.

Dave_Geologist

“Something like Ebola, with a 50% death rate across generations, would be a completely different matter.”

Ebola’s a wildcard because the bodies themselves are highly infectious … and in fact burial practices were a major factor in its spread and a cultural barrier that had to be overcome to help knock it down. The 50% death rate just increased the odds of coming into contact with bodily fluids (let’s face it, Ebola’s symptoms are gross) while preparing a body for burial, etc.

In the case of respiratory diseases, bodies don’t breath, so a 50% death rate would be a different matter, and indeed MERS, with a death rate of about 36% in humans, has a basic reproduction rate of less than 1. Except it doesn’t kill camels, who just get a snotty nose so once the mutated form capable of infecting humans became established in camels, oh well, things sucked.

So for David Benson … things aren’t as simple as they might seem on the surface.

Of course I find wanting to maintain the burial practices of one’s culture a lot more defensible than the urge to maintain our cultural practice of assembling in large groups waving AR-15s and proclaiming the desire to use force to stop government from implementing policies meant to save lives, but that’s just me.

ATTP

That’s very cool and does suggest that the underprediction of 7K-20K UK deaths with interventions was perhaps due to their use of an R0=2.4 rather than any fatal structural issues with their agent-based SIR model.

That’s interesting ATTP though it’s clearly not the simulation they presented in March. I wonder how much has changed apart from R….they said in the paper it was initialised to have the same deaths to March 14. Maybe I should try to get it running – is it easy? I have a macbook pro laptop.

How do the interventions cause a change before they are introduced, I wonder? Is that just a random seed thing?

James,

Yes, there are a large number of parameters and I’m not convinced that how they’re set in the publicly available version is the same as was used in the model results they presented in March.

It is pretty straightforward. However, I’m running on a cluster which I think has all of the necessary compilers installed by default. As a rough guide, it’s taking about 20 minutes using 24 cores. So, probably a few hours on a mac (depending on how many cores you have). I do know of someone who was trying to run it on their macbook and ran into memory problems.

Yes, I think it must be this. The interventions should be doing nothing until after day 84.

James Annan

“That’s interesting ATTP though it’s clearly not the simulation they presented in March. I wonder how much has changed apart from R….”

When MicroSoft first took charge, moved to C++, using OpenMP, etc they did validate against the original model outputs. However it’s not clear if that original model was exactly the same as the one used to present information to the UK government back in March, i.e. the IC team might’ve been working on improvements between then and when MS got involved.

Currently, the model is definitely changing. For example this commit:

“Added Death from influenza-like-illness (ILI). Previously could only die from Severe Acute Respiratory Illness (SARI) or Critical. ” Comforting to know we can die in three ways now 🙂

Ferguson has been doing some tweaking in portions of the model, as have some other members of the team. So it’s a moving target. What you see in the repository is what they’re using in their active work consulting with governments, judging from comments they’ve made.

So the closest you can get to the model’s state back in March would be to use git to grab the initial files used to create the repository, but it’s not clear how close that would be.

vtg sez …

“Seeing as you’re here James, it would be intriguing to see what your model now calculates R for Sweden. Any chance of running that? My assumption is R~1 as deaths are stable or very slightly declining?”

.https://www.folkhalsomyndigheten.se/contentassets/4b4dd8c7e15d48d2be744248794d1438/riket-skattning-av-effektiva-reproduktionsnumret-2020-05-15.pdf

See Figure 2 for R (in Swedish, but correctly looks to be ~0.9)

OK something is being parsed so that it inserts a big empty space,

riket-skattning-av-effektiva-reproduktionsnumret-2020-05-15.pdf

From here …

folkhalsomyndigheten.se/smittskydd-beredskap/utbrott/aktuella-utbrott/covid-19/analys-och-prognoser/

[Added a dot in front of the PDF line; it seems that sometimes WP wants to embed it and gets stuck. -W]

Tack så mycket Everett!

Vi skriva på svenska i dag.

Ioannidis meta-analysis if IFR. Shocker, he finds it Lowe than other analyses.

https://www.medrxiv.org/content/10.1101/2020.05.13.20101253v1

This part is unreal:

> For the other studies, healthy volunteer bias may lead to underestimating seroprevalence and this is likely to have been the case in at least one case (the Santa Clara study)19 where wealthy healthy people were rapidly interested to be recruited when the recruiting Facebook ad was

released. The design of the study anticipated correction with adjustment of the sampling weights by zip code, gender, and ethnicity, but it is likely that healthy volunteer bias may still have led to some underestimation of seroprevalence. Conversely, attracting individuals who might have been

concerned of having been infected (e.g. because they had symptoms) may lead to overestimation of seroprevalence in surveys.

So he ignores some of the ways that the Santa Clara study might have been an overestimation because of the recruitment processes.

But then he doubles down to ignore the many reason why that Santa Clara would be an overestimate – do to higher median income, lower minority population, etc. with respect to a broader exptrapolation beyond Santa Clara.

Another example of John’s thumb on the scale:

>Locations with high burdens of nursing home deaths may have high IFR estimates, but the IFR would still be very low among non-elderly, non-debilitated people.

He ignores the uncertainty in the other direction; i.e., does Santa Clara have *fewer* long term care facility residents than what would be nationally representative? He consistently looks at the uncertainties only in one direction.

As someone who has long respected Ioannidis, I am having a hard time understanding how poorly he’s approaching the uncertainties in all of this.

More on the Santa Clara team:

https://twitter.com/CT_Bergstrom/status/1262854364512677888?s=20

“So he ignores some of the ways that the Santa Clara study might have been an overestimation because of the recruitment processes.”

I am not a fan of his but there is a fundamental problem in designing the collection

of any serology data.

Take something as simple as where the testing is and the requirement that people drive to the testing center.

Imagine that mass transit is a source of transmission. That, if you ride the bus your chance of catching a case is 2x that of someone driving. We dont know.

Now we know that race plays a role in mortality and gender does, so we can adjust for these factors in our sampling. We can sample across factors we know play a role.

age, race, gender, etc. But there are holes in our understanding of all the factors that lead to

infection, asymptomatic presentation, and outcomes.

Like in Korea, all of our early cases were young people. The testing was skewed waay young.

Bottom line. I would hate to have to design any sampling strategy for these tests. Whatever you

do is going to be subject to second guessing and unknowns.

In new york it appears they will test 280,000. 140K of essential workers, and 140K of people

who sign up.

My bet is they wont collect enough profile data or behavioral data and definitely wont publish it.

meanwhile, where is the CDC?

Model suggests masks effective ;o)

Rats [sic] link should have been

https://fightcovid19.hku.hk/hku-hamster-research-shows-masks-effective-in-preventing-covid-19-transmission/

Steven –

I agree the rush to characterize seroprevalence has people way out in front of the data. The data are what they are. The problem is trying to extrapolate from those data as Ioannidis is doing.

He then rationalizes the data to match his priors, as in saying that Santa Clara should be an underestimate – how has he quantified his speculated reasons for less prevalence their as compared to relevant factors such as race and ethnicity and SES, and their associated factors such as access to healthcare, comorbidities, likelihood of being an essential worker, prevalence of multi-generational households (and exposing older people to infection) yes, rate of use of public transportation, etc.? It doesn’t appear he has quantified any of that. Just speculated away without providing evidence.

I think it’s bizarre.

FYI:

https://phys.org/news/2020-05-covid-extreme-scientists.html

Hard to extrapolate.

David Benson

Well, there’s nothing new in the phys.org piece that I can see.

They build a SEIR model and found it’s non-linear and highly sensitive to R and the length of the incubation period. You don’t have to build a model to learn that, a few minutes in google would suffice. And it’s well known that the quality of the available data isn’t great. It’s a bit like an epidemiologist rushing up excitedly to a physicist and saying “I just experienced this cool thing called ‘gravity’, have you heard of it?”.

This conclusion is a bit odd, though:

“”Preliminary results show that implementing lockdown measures when infections are in a full exponential growth phase poses serious limitations for their success,” said Faranda.”

When else would you? In the very beginning when there are relatively few people infected, it’s in “full exponential growth phase”. After a few doublings it’s still in “full exponential growth phase”. When exactly is it a good time to knock Rt down below one, if not then? Are they suggesting we sit back and wait for herd immunity to kick in rather than lock down?

“meanwhile, where is the CDC?”

Same old question, same old, and all to obvious, answer. Two words. Small Hands.

You haven’t been watching the 4th season of The Apprentice: White House Edition wherein Small Hands plays with himself on both sides of the table.

OT: a bit of climate with your SARS reading:

Potential Factors Influencing Repeated SARS Outbreaks in China

Meanwhile…in Sweden…

I’m not particularly critical of Sweden’s approach. It’s one of the variety of bad choices.

But when you look at the metric of deaths per capita, you will note that the rate of decline is Sweden considerably lower than in many other countries, such as Switzerland, the Netherlands, even France, and many, many other countries. Sweden is rising up the chart at a consistent pace.

In fact, Sweden has had the higher per capita deaths in Europe over the last seven days. Even higher than the UK.

Cross-country comparisons are of limited value. And the reasons for Sweden’s relatively slower decline than elsewhere are complicated. And there are necessarily tradeoffs in all of this, but you can’t even evaluate the tradeoffs if your vision is limited by your ideological blinders.

Audits never end:

Willard –

Assuming what will happen with the rapid peer-review…..can wrong preprints be considered to be “wrong” published research?

> can wrong preprints be considered to be “wrong” published research?

Preprints are not published.

I think about that often.

“Preprints are not published.”

They’re just publicized …

pre-prints are fine, it is the press releases that are the problem.

(IMHO)

Also from Sweden: a month ago, about 5% of people had been infected.

https://www.folkhalsomyndigheten.se/nyheter-och-press/nyhetsarkiv/2020/maj/forsta-resultaten-fran-pagaende-undersokning-av-antikroppar-for-covid-19-virus/

I just ran Ferguson’s model for Sweden

Clive,

How did you set the parameters for Sweden?

“IMPORTANT: The parameter files are provided as a sample only and do not necessarily reflect runs used in published papers.”

Now on Clive’s page, regarding the run for the UK with lockdown results using R=3.0 which he thinks is too high, well, because … he says:

“If you look at the bottom red curve which shows excess deaths directly related to COVID-19 then you can see that the lockdown predictions more or less agree with the outcomes. Does that mean that Neil Ferguson really did call the right shot?

Probably he did, yet still today we have a fundamental lack of knowledge of the real-time numbers of infected and recovered persons in the UK.”

So it works well for the UK case, suggesting that maybe it’s not a model flaw causing the results for Sweden to be so off. I’ve seen no indication that Sweden has been working with the IC group so I’m guessing that no one, except Clive, cares about the model run results for that country.

“This current obsession with R is actually misleading government policy because R will naturally change during any epidemic. R will always reach 1 in coincidence with the peak in cases and then fall rapidly towards zero.”

Well, yes, R will always be 1 at the peak. It doesn’t appear that Clive understands that without intervention, that happens when herd immunity kicks in, i.e. lots of cases and lots of deaths. The obsession (as he calls it) with R is because the goal is to get control over the thing without reaching that very high level of infections and deaths.

And he goes on:

“At some point the costs of continuous stop-go lockdowns based on fear alone will become unbearable. We will have to learn to live with this virus.”

Based on fear alone?

“How did you set the parameters for Sweden?”

I would assume:

./run_sample.py Sweden

But note the caveat about the parameter files …

dhogaza,

Yes, I realise that it has admin files for Sweden, but the available parameter files appear to be for the UK, the US and Nigeria.

ATTP

Right, for the US and Nigeria it uses those param files, everything else uses “preUK_R0=2.0.txt”

ATTP

So a reasonable assumption would be that they’re only working on production projections for the US, Nigeria, and the UK.

I used “run_sample.py Sweden” However I think there is definitely a bug because the intervention scenario comes back as “Sweden_PC7_CI_HQ_SD_R0=3.0.avNE.age.xls” but it is still using the UK population size !

This is what it should look like (IMHO)

dhogaza,

Yes, that’s my understanding too. So, that may impact why Sweden’s results look a bit odd.

I don’t know if anyone else noticed, but James Annan got a mention in George Monbiot’s latest column for highlighting that starting the lockdown a week earlier would have substantially reduced the number of deaths.

I posted to Twitter a set of IC model runs which considered lockdown starting one week earlier, or two weeks. I deleted it when James pointed out that the earlier lockdowns had more deaths early on than the later lockdowns. I thought this might because I’d messed up the initial start times between the different runs, but I get the same kind of result when I try to fix that. I’m not quite sure what’s going on, but it could be that closing schools and universities then leads to some extra contact in other environments (the model does assume that there are enhanced household and community contacts when schools and universities close). Anyway, the figure I produced is below. It certainly shows quite a substantial reduction if intervention had started a week earlier, but maybe not quite as much as James suggests.

This is interesting.

“Bayesian adjustment for preferential testing in estimating the COVID-19 infection fatality rate:

https://arxiv.org/abs/2005.08459

Very mathy.

But seems they only looked at likelihood of sampling reflecting a disproportion with respect to infected people wanting to get tested.

So much other important unrepresentativeness (e.g., variables highly predictive of health outcomes like SES and race/ethnicity) that basically make most of these seroprevalence studies worthless, in my non-expert opinion (that plus $2.50 woukd have gotten you a cup of coffee pre-shutdown)

Anders –

> I’m not quite sure what’s going on, but it could be that closing schools and universities then leads to some extra contact in other environments (the model does assume that there are enhanced household and community contacts when schools and universities close).

That’s interesting.

@dhogaza

Thanks ! I think you are right !

Is there any documentation as how to set up the parameter files ?

Josua,

It’s one of the parameters in the parameter file. I don’t know if the assumption about enhanced contact in the household/community if schools/universities close is reasonable, or not.

Clive,

Other than the brief instructions on github, I don’t think so. I have had trouble trying to work it out. I’ve worked some of it out, but I still can’t work out how to trigger interventions on and off, for example.

ATTP,

This seems to happen in all scenarios at the beginning of a lockdown even their sample run.

It doesn’t make sense to me either.

Anders –

It certainly was my reaction when I first heard that they were sending university students home – that they would just spread virus to their families. . But when I thought about it more, I thought that just leaving students in student housing would maybe be even worse long-term.

The again, if it makes intuitive sense to me, that’s probably an indication that it’s wrong. 🙂

I’m wondering if the model is automatically rescaling to match data at a certain date. I guess the llog-scale graphs would show this, if that is what is happening. In other words, the pre-intervention curves should match each other.

Joshua,

There is an age dependence, so maybe the model suggests that leaving students at school/university leads to fewer deaths, even if it doesn’t impact the overall number of infections.

Clive,

Yes, even if you look in the Table in the Report 9 that they produced, it also shows some oddities. I think it may be that there are assumptions about contacts once the intervention start that can lead to some counter-intuitive results. These parameters may be wrong, of course.

Ben,

No, even the log-scale graphs don’t match. It could be that I’ve made some change that somehow changes when the infection is initialised.

Anders –

> There is an age dependence, so maybe the model suggests that leaving students at school/university leads to fewer deaths, even if it doesn’t impact the overall number of infections.

Except it’s unrealistic, imo, to think that you can keep them segregated, long term. They would destroy the student housing before moving out into the community (says someone who has rented houses to students).

That’s what so many of the rightwingers miss with the whole “just protect the old people and stop stealing my freedom ” rhetoric. That’s also unrealistic. Especially for older people in poorer communities, only the least because they’re more likely to live in multi-generational households.

“Joshua,

There is an age dependence, so maybe the model suggests that leaving students at school/university leads to fewer deaths, even if it doesn’t impact the overall number of infections.”

some of the models have “mixing” matrices that “capture” how much/often old people mix/contact

with young people.

As a lover of models I have to say I think they are being misused at this point.

I dont think you can use them to fine tune policy. well you can use them, just not convinced it

will beat trial and error.

to put it bluntly. Some communities will open beaches, some will open for walking only,

some will drag you from the water if you swim, and some will keep them closed.

None of it is based on any science or data analysis whatsoever. People will use

models as cover for whatever they want to do.

I too like models: