There’s an interesting post on RealClimate that discusses fallacies in discussing extreme weather events. It’s well worth a read. It discusses what one might expect in terms of trends in extreme weather events, and it points out – quite correctly in my view – that physics also tells us something about what we might expect. It also makes an analogy about a loaded dice. The basic point being that even a loaded dice will give what appears to be the expected result if only a small number of tosses are considered. So, just because you don’t see anything suspicious, doesn’t immediately mean the die aren’t loaded.

Roger Pielke Jr, who works in this general research area, commented to say,

Hi Guys, Thanks for your interest in our work. We went into that smokey bar and did some math on those dice. You can see it here:

which is a little odd in that, apart from the update a couple of days ago, the original post doesn’t appear to mention Roger Pielke Jr’s work. The see it here refers to a paper Emergence timescales for detection of anthropogenic climate change in US tropical cyclone loss data by Ryan P Crompton, Roger A Pielke Jr and K John McAneney. The paper concludes with

This study has investigated the impact of the Bender et al. Atlantic storm projections on US tropical cyclone economic losses. The emergence timescale of these anthropogenic climate change signals in normalized losses was found to be between 120 and 550 years. The 18-model ensemble-based signal emerges in 260 years.

My understanding of what Roger is suggesting, with his comment, is that this paper shows that even if the die are loaded, an anthropogenic signal will only be seen in US tropical cyclone losses in about 200 years. Roger finishes his comment with,

The math is easy, and we’d be happy to re-run with other assumptions. Or you can replicate easy enough.

So, I did. I spent my weekend painting the entrance hall in my house and working out how to replicate this study.

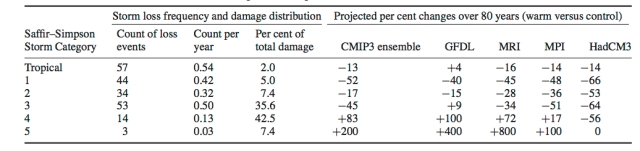

The basic data is in Table 1 from Crompton et al. (2011) which I include below. It shows the different Tropical Cyclone categories, the number of landfalling in each category between 1900 and 2005, the percent of total damage associated with each category, and the projected change in each category in the next 80 years determined by different models. Here, I’m going to consider only the CMIP3 ensemble.

The model in Crompton et al. (and which I’m trying to replicate here) works by determining for each year (starting in 2005) whether or not a Tropical Cyclone in each category could occur. Also, the likelihood of an event occurring also changes with time, by assuming a linear trend based on the projected changes from the models (in what I’m presenting here, I’m only considering the CMIP3 model ensemble). The biggest – and maybe only – difference between what I’ve done and what’s done in Crompton et al. (2011), is that they use a random number generator based on a Poisson distribution to determine the number of events in each category every year, while I’m simply using a basic random number generator. Their random number generator can allow for more than one event in a given category per year, while I simply assume that a landfalling event occurs if the random number is less than the count per year for each category.

The next step is to associate a loss with each landfalling event. This comes from the tables in the Appendix of this paper. There is a loss associated with each event during the period 1900-2005, normalised to 2005 values. One of these losses is then randomly selected if an event of the same category occurs in the model run. This way we can build up a time series of possible future losses, which I’ve done as an accumulated loss. This differs slightly from Crompton et al. (2011) since their timeseries was annual loss (I think) but I think the two are essentially consistent. The figure below is an example of a time series from one of my model runs. The 1900 – 2005 data is actual, normalised data plotted in decade intervals. From 2005 onwards, the values are generated as described above.

The process is repeated 10000 times so as to produce 10000 possible future loss timeseries. The next step is to determine when the trend will emerge. Crompton et al. (2011) do this by determining at what time 95% of the models have a positive trend. Since my timeseries is accumulated loss, what I’ve done is determine the time at which 95% of the models have a trend that is greater than the 1900-2005 trend.

Crompton et als analysis gives 2265 (i.e, 260 years from 2005); I get 2195. A little different, but broadly consistent and may well simply be that I didn’t use random numbers from a Poisson distribution to determine the number of events per year. So, given that the analyses are slightly different and I haven’t spent a great deal of time on this, I would argue that the results broadly agree. The emergence timescale of anthropogenic climate change signals in normalized losses will be around 200 years from now. So, Roger Pielke Jr is right.

Or is he? What this analysis is illustrating, I think, is the time at which virtually all models show an increased trend. So, yes, this is the time at which we would almost certainly see an increased trend (assuming the assumptions are appropriate) but it doesn’t tell you how likely it is to see an increased trend at an earlier time. Clearly if 95% of models show an increased trend by 2200, some of these should show an increased trend before 2200. This is actually fairly straightforward to determine. To do this, I take my 10000 models and I determine – for each model – the time at which the trend is greater than the 1900-2005 trend and is statistically inconsistent with this trend (at the 2σ level). I also insist that this persists (i.e., it can’t just be some blip in the timeseries). For each model I therefore have a year at which a statistically significant increased trend emerges, and I can average this and determine the standard deviation. I get 2102 ± 56. This seems consistent with the earlier analysis. From this, the time at which 95% of model would show a statistically significant increased trend would be 2102 + 2×56 = 2214.

This result seems much more nuanced than that presented in Crompton et al. (2011). It may well be more than 200 years before we’re virtually certain to be able to detect an increased trend in normalised losses, but there’s a 50% chance that it will occur before 2100 and about a 15% chance that it will occur before 2045. I would certainly argue (assuming I haven’t made some silly mistake) that this is relevant. Surely it’s not simply when we’re virtually certain to detect an increased trend, but also how likely such an increased trend is in the coming decades.

There’s also more we can do. The analysis here considers total losses. For the period 1900-2005, about half the losses come from category 4 and 5 storms and the other half from category 3, and weaker, storms. What if we repeat the analysis, but only consider certain categories. The figure below shows an example of a simulation where I’ve considered only category 4 and 5 storms (diamonds) and one where I’ve considered only category 3 and weaker storms (crosses). The 2005 datapoint is the actual losses for each subset at that time. This illustrates something that should be fairly obvious from the table I included above. The models suggests that category 4 and 5 storms should increase in frequency, while the weaker storms reduce in frequency. Therefore, with time, more of the losses will come from the more extreme storms.

If I repeat the analysis but consider only certain categories, then we would be virtually certain to detect a change in trend for the category 3 and weaker storms by 2136, and for the category 4 and 5 storms by 2130. If I consider the time for each model at which the trend will be statistically inconsistent with the 1900 – 2005 trend, I get 2036 ± 40 for the category 3 and weaker events, and 2071 ± 33 for the category 4 and 5 events. This would seem to suggest that even if we can’t detect a change in the total loss trend for 200 years or more, we could detect a change in how the losses are distributed almost 100 years earlier than this. There is also a 50% chance that we could detect such a change by the mid 21st century.

I should say that I’ve found this quite interesting. I think I’ve roughly reproduced the Crompton et al. (2011) results but, as usual, if I’ve made some kind of blunder, feel free to let me know. All the math was certainly straightforward and all the information/data was easy enough to access. I agree with the basic result that, given the assumptions, an increased trend in normalised losses will only be virtually certain to appear by sometime after 2200. However, I do think that the same analysis suggests that there is a 50% chance that a statistically significant increased trend in total normalised losses will be detectable within the next 100 years. I also think that the same analysis suggests that we will be able to detect changes in the distribution of the losses within the 21st century. Even if the total normalised losses are essentially unchanged for the next 200 years, I do think that a world in which the losses are roughly equally distributed between weaker (category 3 and less) and extreme events (as in the 20th century), is not the same as one in which most of the losses will be due to the more extreme events (category 4 and 5).

I should add that I mainly did this out of interest and because if I am going to comment on someone else’s work, I should at least try and understand it. Although I can see why understanding possible future trends in normalised losses might be policy relevant, if you really want to understand how tropical cyclones are likely to evolve, one should consider data associated with the actual events themselves, and not focus on loss or damage. Given that this has got rather long, and possibly somewhat convoluted, I would recommend reading Kerry Emanuel’s articles in 538 and a very good one on understanding the tail risk.

As an FYI, Junior is quotemining you on Twitter, “So, Roger Pielke Jr is right’

TB,

Really? So he’s read it. Interesting. I did wonder about that particular sentence. Maybe I should have been more careful in how I phrased it, but if he means “almost certain to see an emergent trend” then – given the assumptions and models – he is right. There’s more to it, though, as the rest of the post attempts to illustrate.

Note that KE and others think the models are probably failing to capture a future very large increase in TCs since they believe such a change is required for the much-increased poleward heat transport in a mid-Pliocene-like climate. Indeed, what basis is there really for the model assumption that an increasing tendency toward TC formation will be more or less smoothly balanced by increasing sheer winds on into the indefinite future?

The models are loaded with assumptions like that. It makes me nervous.

Pielke is, and he omitted this part: “There is also a 50% chance that we could detect such a change by the mid 21st century.”

Funny, that.

“Maybe I should have been more careful in how I phrased it, but if he means “almost certain to see an emergent trend” then – given the assumptions and models – he is right.”

Roger is a climate warrior (although he likes to pretend he isn’t by employing a think veil of plausible deniability). As such, he (at least sometimes) resorts to rhetorical tricks that distract from a meaningful scientific exchange. Quoting you like he did, without addressing your overall point, is par for the course, and changing your wording would not alter his approach or the outcome of his approach to your analysis.

Your analysis stands on its own. Too bad that Roger hasn’t (at least as of yet) stepped up to the plate to engage in a good faith response.

This is only about US tropical cyclone loss, so is there any way to combine analyses for lots of different types of events globally? Would it be easier to detect a trend emerging earlier from combined data than just for losses from one type of weather event in one region?

What others said. For the record:

Exactly, Opatrick. Looking at one relatively small region is misleading enough, reducing the view to landfalling-only makes it more so, looking at just the trends in insured losses is even worse, and normalizing it with GDP is the icing on the cake. This is research with a clear agenda. Others may be (and are) interested in doing the sort of work you mention, but not RP Jr.

Re a combined index, NOAA NCDC has something for the U.S., but I don’t know if that’s being attempted globally. I suspect the data gaps would be too widespread. A combined index for all of the regions that do have good observations sounds possible, but I don’t recall hearing about one.

Anders, I’d want to know more about your random number generator and how it was seeded. (I’d also want to know more about Pielke’s.) They can be particularly problematic with such small data sets like you’re using.

A long time ago… I was verifying a frequency hopping system for the FCC, and short time sets (under 40k hops) produced a variety of entertaining patterns.

I don’t suspect anything is wrong… I’m just curious.

AOM,

Mine was seeded from the computer clock. I think it is a straightforward random number generator that returns a number between 0 and 1, evenly distributed. I did do a few runs and seemed to get the same result. What Crompton et al. (2011) uses is one that’s based on a Poisson distribution. The Poisson parameter is the count per year and the random number generator then returns (I think) 0, 1, 2, ….. This means that it can select more than 1 event in each category per year, which my method can’t (I looked into setting up one like that used in Crompton et al. but what with painting and things, I just didn’t get around to it). I think (and the paper mentions this too) this produces a bit more scatter and may explain why I got 2195 instead of 2265.

I think there are many other assumptions that can have a more significant effect. The assumption of linear trends in terms of changes in event rate extrapolated for hundreds of years seems a little unphysical – especially as (for the CMIP3 ensemble) there are then no landfalling category 3 or category 1 events beyond 180 and 150 years respectively. This seems somewhat odd. Do we really expect a future in which virtually all landfalling Tropical Cyclones are category 4s and 5s?

The method’s also quite sensitive to the assumed 20th century rates. I initially used slightly different values (I forgot it was 105 years, not 100) and that made a 50 year difference. Also, there have been 3 category 5 landfalling events between 1900 and 2005. What if there had been 4? I assume that some kind of Poisson error could be assumed. The scatter in the losses for each category type is also very large. What about uncertaintes in the projected changes in the models?

I considered trying to see how these assumptions changed the results, but the post was getting rather long and convoluted as it was.

Thanks… (I’m just commenting on that which I know well. As long as you verified its stats, I’m good.)

thingsbreak says: “As an FYI, Junior is quotemining you on Twitter, “So, Roger Pielke Jr is right’”

RPJ also retweeted this tweet:

But not the one before:

Interesting Judith Curry would write.

I liked the alternative of Kerry Emanuel for the more abstract loaded dice: Imagine you know that there are twice as many bears in a forest, would you wait until the death toll has increased statistically significantly?

Physics is important, you do not always have to wait for statistics.

Here is Pielke’s tweet on this post.

https://twitter.com/RogerPielkeJr/status/451061638880169984

He seems to have forgotten that people can reply to your tweet. 🙂

Maybe I’m missing something in all of this but does it really make much difference to the need for mitigation whether we see a trend in 50 years or 100 years or 200 years? If there is going to be a trend at some stage then doesn’t this emphasise the need for mitigation now? I’m not sure what Roger’s point is. If it’s that we’ll all be dead in 200 years so we needn’t be concerned about it now, then I disagree. We should care about people of the future. There’s intergenerational equity at stake here. Even economists recognise that future generations have interests. This comes from What is permanent endowment income?

“Looking at one relatively small region is misleading enough, reducing the view to landfalling-only makes it more so, looking at just the trends in insured losses is even worse, and normalizing it with GDP is the icing on the cake. This is research with a clear agenda. ”

Steve Bloom nails it perfectly as far as I’m concerned. Forget bears in the woods. It’s about having the answer you want already baked into the cake 😉

Rachel,

Sure, I would tend to agree. The point I was trying to make though (as you probably got) is that the result that 95% of models show an increased trend within 260 years, is not the same as “it will take 260 years before we will be able to detect an increased trend” – which seems to be how some have interpreted this result. Therefore, to argue that we should not base our policies on Tropical Cyclone losses because there will be no detectable increase for over 200 years is – in my view – the incorrect conclusion given that there is a non-negligible chance that we will be able to detect an increased trend within the next 100 years and given that within the next few decades we will see (assuming the models and assumptions have merit) an increasing fraction of the losses coming from the more extreme events.

Of course, if one wants to still argue that we shouldn’t base any policies on increasing Tropical Cyclone losses that’s fine, but basing that argument on the claim that we almost certainly won’t see an increase in loss trends for over 200 years is – I think – incorrect.

> a little odd in that, apart from the update a couple of days ago, the original post doesn’t appear to mention Roger Pielke Jr’s work

Its not odd at all. The original post was obviously in response to RP, and the update merely confirms that.

Enter the discount rate, Rachel.

William,

Sure, one could conclude that and I guess the update does. I just found it odd that someone would assume that a post that doesn’t mention them illustrates an interest in their work, but maybe it was indeed obvious.

Pingback: Evidence of absence versus absence of evidence | Climate Etc.

It is really amazing, Curry copies Junior:

“And Then There’s Physics

And Then There’s Physics has a relevant post entitled Emergence timescale for trends in U.S. tropical cyclone loss data. While not directly germane to the points I want to make, the article is worth reading and concludes

The emergence timescale of anthropogenic climate change signals in normalized losses will be around 200 years from now. So, Roger Pielke Jr is right.”

For those familair with the interactions between the good folks at Real Climate and RPJr, it was obvious that the post was motivated by RPJr’s 538 piece.

ATTP, you should really go over to Curry’s and post your actual position in detail. It really wouldn’t hurt to point out that RPJr quotemined you, stripping out any nuance in your evaluation. Might be rude to point out that Judy copied RPJr without bothering to read your post, but what the heck, you shouldn’t let them get away with it.

AndThen,

The point I was trying to make though (as you probably got) is that the result that 95% of models show an increased trend within 260 years, is not the same as “it will take 260 years before we will be able to detect an increased trend” – which seems to be how some have interpreted this result.

Yes, sorry. I wasn’t really commenting on your point but rather trying to understand Roger’s. I agree with dhogaza though and think it probably worthwhile to put a halt to the spread of misunderstanding which seems to be stemming from your post.

Pielke’s Tweet is quite revealing, in that it illustrates that all he cares about is his own reputation. If you say he’s right, that’s all he cares about, and he’s going to advertise it so everybody knows you’ve said he’s right.

Curry, well, who knows what the heck goes on in her head. She just used your post for the quote mine “Pielke was right”. I guess she couldn’t find anyone to actually defend him. The rest of her post basically just throws physics out the window (or more accurately, shoves it down the Uncertainty Monster’s throat). Normally I’d shake my head in amazement, but I think we’ve all come to expect this sort of nonsense from Curry.

Rachel: “We should care about people of the future.”

I think you’re missing what appears to be an implicit policy assumption of some, which is that if you screw things up badly enough there won’t be people in the future, so the problem goes away.

On the plus side, by failing to read (or at least understand the OP) Pielke and Curry have inadvertently pointed their followers towards a post they would normally have preferred they didn’t read.

You can exploit this in future by posting devastating rebuttals of their ideas but finding some way to embed the line “Pielke was right” or “Curry was right” into the post — preferably on a line by itself, to reduce the risk of them accidentally seeing what the rest of the post has to say — so they’ll advertise it for you.

On the original topic, and the flaws already mentioned (e.g. it’s going to take longer to detect the signal if you narrow down the geographic region, etc.) the biggest one to me is what you intimated at the end, Anders: focussing on “loss” or “damage” adds in an unnecessary extra variable that’s even harder to predict than physics (namely, our ability to adapt over time so that previously devastating events become something we can take in our stride) and, if I recall correctly from the last time I looked at this, the costs of those adaptations aren’t taken into account.

Many decades ago I lived in an area prone to tropical cyclones. The building codes in those areas were necessarily much more stringent than those in areas not prone to cyclones, just as the building codes in earthquake-prone areas are. As a consequence, the losses caused by a storm that would be devastating in an area not prone to such storms were relatively minor, but the costs incurred by those building codes that make it possible to cope with those storms are significant.

If the number and severity of events increases, but we are able to contain losses by adapting our building codes to cope with them, the “normalised losses” may well be constant (and hence Pielke “right”) but if the ongoing cost caused by the adaptation is being ignored then he’s being “right” about the wrong thing.

The bottom line is that we should focus on events first, and see if there is a pattern there, and then worry about what the trend in losses caused by those events might be, taking into account the costs of adaptation should they arise.

JasonB,

Good point! In both comments. 🙂

I have recently been looking at Pielke’s Hurricane data, and South Eastern Coastal temperatures for the US. The result is that while the trend vs time for damages normalized to 2010 is only 0.1 +/-0.2 Billion dollars per annum, a trend that changes substantially with different normalization years, the trend vs coastal SST is 10.9 +/- 20.1 Billion dollars per degree centigrade. The later trend also changes with year of normalization, but far less significantly.

Given evidence that hurricanes become more powerfull with increased SST, and given that SE US coastal waters were warmest in the 1930s and 1940s, and have essentially no trend (0.002 C/annum) from 1915-2010; this seems a far more appropriate way to analyze the data, then calculate the trend in damages per year. Further, given that SE US Coastal SST will increase under global warming, that means an increase in normalized Hurricane damage in the US is already shown to be “likely” as the IPCC defines the term. How much longer before it will be statistically significant I cannot say.

For anybody trying to replicate my results, I used the normalized damages to 2010, relative to 2010 from Blake, Gibney and Landsea (2011); and downloaded HadSST3 data from 100 to 70 degrees west, and from 15 to 40 north from the KNMI climate explorer.

Jason B’s point about building code is correct. Worse, as populations have increased and become more wealthy, there is a natural tendency to build with more robust materials regardless of building code. Thus, in the suburbs there is a far higher proportion of brick buildings than in the past. More importantly, in the city centers of major populations, most construction is now in reinforced concrete or steel; building materials that mean that any reasonable hurricane damage will be superficial (windows, carpets) rather than structural. In contrast, the 1900 Galveston Hurricane destroyed a city consisting almost entirely or wood houses. The 1926 Miami Hurricane destroyed a city of similar construction, with a few multistory brick buildings in the city center.

Pielke’s normalization essentially assumes that because the wood construction of Galveston in 1900 was completely destroyed, an equivalent Hurricane striking Galveston today would also destroy all structures in the area – a ridiculous assumption.

TTP thank you for pointing out what we all should know in his debate.

That trying to link current weather events to AGW is not possible on short time scales of 20-50 years.

No one has any right to attribute current weather events to global warming if it exists as they cannot show up in a provable way in the next 20- 50 years.

Trying to prove arguments on this only lessens the character and reputation of people on both sides of the debate.

“Pielke’s Tweet is quite revealing, in that it illustrates that all he cares about is his own reputation. If you say he’s right, that’s all he cares about, and he’s going to advertise it so everybody knows you’ve said he’s right.”

Simple messaging Dana. A simple message repeated often enough is much stickier than a jargon-loaded comprehensive rebuttal. Pielke probably knows that.

Tom Curtis – there are quite a few wooden houses in Galveston that survived the 1900 hurricane. The waves destroyed the houses closest to the beach. The waves drove that debris toward the inner city. It created a “wall” of debris that protected the buildings, both wooden and brick, further in from the beach.

Newer stick construction has lots of metal reinforcement brackets that are intended to keep the structure from breaking up, which might make the formation of the debris “wall” less likely.

After 1900 they built a seawall.

If the most extreme storms are getting more frequent, then cat 5s will have a greater fraction of monster 5s. I don’t see why we would expect the same loss distribution for that category in the future.

Thanks for all the comments. Aslak, I agree. That was something else I had thought of commenting on, but things were getting rather long.

I should add that I’m likely to be mostly out of contact today, so apologies if I don’t respond to comments.

angech, you might want to read the title again, and then reconsider your large claim. Don’t disappoint me!

There also seems to be a recent increase in the number of technically weak but very large storms. Sandy e.g. was so big it didn’t have time to spin up beyond Cat. 3.

Pingback: Weather, climate change, the risk to our expensive infrastructure – and our lives | Fabius Maximus

angech,

The fact that it can take a long time for a statistically significant trend to emerge for certain kinds of extreme weather events doesn’t mean that we can’t consider attribution in the meantime. We do after all know something about the physical processes involved in these events so it is possible to make a judgement about the extent to which factors such as higher air or sea temperatures are likely to have contributed.

angech,

That you actually think that’s what he said goes a long way to explaining what you “know”.

I suggest you carefully re-read what it’s actually saying, and then, before leaping to any conclusions about what it means, try to understand the difference between the concepts of “events” and “losses caused by those events”, and the comments above about adaptation (especially Tom’s).

Personally, I think the whole “normalised losses” idea has little value due to the large number of confounding factors and the lack of accounting for adaptation costs. It’s a much simpler problem just to work out the likely outcome in terms of events and then estimate the costs of adapting (or not, as the case may be) to those events.

Marco.

Which large claim? I have used very conservative values which RP, TTP and Kerry have all agreed with.

The elephant in the room is the unloaded dice, which, as no one has mentioned, will take just as long to stake a claim to no warming and no increased damage.

As for being warned that the dice are loaded, depends on who is warning you.

You have everyone one one side using weasel words and avoiding the facts.

If, as the greats Burns and Kipling said, you could see the facts as others see them then you would be one of us.

Or Pogo in we have met the enemy and he is us.

Ouch, touched a nerve there did I , Jason and Andrew.

Just had a root canal done 2 days ago and still not right .

Still rather than argue the facts which are so clear and spelt out by people from all sides regularly

i.e. It takes a lot longer than a human lifetime to prove anything conclusively either way let’s look at your reactions. Deep down there is the tendril of fear, what if angech et al are right, then there is the argument , perfectly lucid. Then the attempt Andrew to obfuscate. What if we take a really, really long time for AGW to show up through really rare extreme events, that will do it.

Cue in 6000 years and we will have a flood so big I will wake up and agree with you.

Extreme weather events can happen at any time and there is no way to attribute warming to them.

Jason, TTP is a warmist. Read his site, don’t tell me to read it. RP and JC have and it is perfectly clear he said what I faithfully repeated. I am not the first to have used it in a comment. No conclusions leapt to at all.

Then you have the gall to say that you don’t think that “normalised losses” has any value as an idea, even though other respected and not respected highly intelligent people do and everyone is discussing it.

And why, because it shows your ideas might be wrong. And the thought of that makes you say let’s put it under the carpet and forget about that inconvenient truth.

As I said, touched a pretty sore nerve there no doubt at all.

Angech

Firstly, I didn’t say that. If anything I was suggesting there is something like a 1 in 10 chance that we could detect a trend in total losses within the next 50 years. If the models are correct, then it becomes even more likely that we’ll detect a change in the distribution of the losses within the next few decades (i.e., more losses due to the more extreme events). Also, there are already studies (Elsner et al. being one) who are starting to find statistically significant increases in trends in some basins for Tropical Cyclones. So claiming that we cannot find any trends within the next 20-50 years is not a demonstrably correct statement.

> …the same analysis suggests that there is a 50% chance that a statistically significant increased trend in total normalised losses will be detectable within the next 100 years.

I think this is still supporting the basics of RP’s claim. Not the exact numbers, which aren’t too interesting, and are clearly sensitive to exactly what you end up looking at. But the overall concept: which is that its a fair way into the future.

William,

To a certain extent, yes, and that’s largely why I wrote the post the way I have. It is likely to be a far way into the future. I would argue that the way I did this (by doing a p-test on every model, rather than looking for the time at which every model shows a positive trend) is more informative as it shows that the likely timescale is more like the early 2100s, and that there is a 2/3 chance of it being between 2050 and 2150 (roughly speaking). Reasonably far, but not necessarily that far. Of course, there is a roughly 1 in 10 chance that we could detect a trend in normalised losses within the next few decades, so that would seem to suggest that we can’t rule out an increase in normalised losses fairly soon.

So, what the Crompton et al. paper is showing (given the models and the assumptions) is that we will almost certainly have detected a trend in normalised losses by the mid 2200s. There is only a 5% chance that it will be after – roughly – 2250. However, it appears that many interpret the result as suggesting that we won’t/can’t detect a trend until the mid-2200s and that is not the correct interpretation of the analysis. If anything it’s inverted the test. The test shows that there is only a 1 in 20 chance that it will be after the mid 2200s.

I will add though, that it is quite sensitive to the assumptions. For example, do we really expect the losses associated with category 5s between 1900 and 2005 to be a good match to those in the future? If they’re getting more intense then this would seem unlikely. Do we really expect category 3s and weaker to completely disappear by the mid 2100s? Again, unlikely. I realise that one can only work with what you have, so this isn’t a criticism of Crompton et al., but it does seem that there is reason to suspect that the timescale determined by Crompton et al. is somewhat on the high end, given these issues. I was thinking of playing around with slightly different assumptions, but then it can end up just being an element of guesswork.

I agree that it is likely to be a long way into the future, but I do think that it should be acknowledged that one can’t rule out that it could be much sooner – and this is based on the same analysis as in Crompton et al.

William,

I should add that the other aspect that I was trying to highlight was that even if the normalised losses are unlikely to increase significantly for a long time, the distribution of these losses (between the weaker and more extreme events) will likely change much sooner. Hence suggesting that we can’t detect any signal in normalised losses is wrong (i.e., we can detect a change in the distribution, even if the total is unchanged). Maybe it’s just me, but if we’re likely to see more of the losses being due to extreme events reasonably soon (potentially within a few decades), then even if the total losses remains unchanged, this effect would seem to be policy relevant.

Angech, the large claim is that you went from US tropical cyclones to “current weather events”. In other words, you just generalized from very sparse data.

angec,

No, you didn’t touch a nerve, you made an argument and we answered you. I don’t know what particular facts you think we are disputing, the fact that it will take a long time for a statistically significant trend to emerge for some kinds of extreme weather events is not in dispute. This does not necessarily mean that AGW is not influencing such events or at least will not do so in the future, it’s just the nature of trying to establish trends for rare events. But as I said before we can still make certain judgements based on our understanding of the physical nature of such events.

And there are other events, such as heatwaves for example, where there is already an indication of an increasing trend and we can be more confident of attributing them to AGW, others where the picture is currently not so clear but should emerge in the coming decades.

Sorry, Angech

Andrew/Jason,

I think Angech may be playing seagull chess. A subset of climateball, but with clearer rules

Andrew Adams: “It’s just the nature of trying to establish trends for rare events”.

This. Everything else on this topic seems like a distraction to me. The bear-in-woods example is nice, but we need more of those. Proving that extreme events take a long time to show statistical significance – so what? Yeah, trivially true but more-or-less irrelevant. ATTP, I don’t mean that to be disrespectful to your analysis at all – it’s just, I’m not sure it’s a good idea ending up arguing over that particular ground. Emanuel’s response to Pielke Jr is making that point – don’t focus on the wrong thing.

p.s. Climatecrock’s resident troll claiming your article plainly confirms Pielke Jr’s findings. Calvinball etc etc.

Dan,

Firstly, I was just trying to understand this in more detail myself. It was interesting and I think I now understand the basic analysis better than I did. Also, I was trying to illustrate that using Crompton et al. (for example) to claim that we won’t/can’t detect any trend in losses before the mid-2200s is wrong. Their own paper makes this fairly clear. So, I’ve done the math and – I think – shown that those who state that we can’t detect trends for another 200 years or more are incorrect. There is a chance that it will take that long but there’s also a chance that they will be evidence within the next few decades. Even more so if you consider how the different categories will contribute to the losses.

Indeed, but hopefully that is obvious to anyone with any sense.

“Why is communicating climate change science hard?”

or perhaps:

“Why can communication about climate science so easily be misinterpreted and misused?”

Likelihood of that can be reduced by careful formulation of the posts, but to what extent is that worth the effort?

Pekka: ““Why can communication about climate science so easily be misinterpreted and misused?”

Relevant example from today, from a completely different field: “It is, in fact, a very good rule to be especially suspicious of work that says what you want to hear, precisely because the will to believe is a natural human tendency that must be fought.”

I had thought that I had been careful but my unqualified statement So, Roger Pielke Jr is right may have been a poor choice of wording. On the other hand, one of my goals is to illustrate how one may play ClimateBallTM and this post is a useful illustration 🙂

Dan,

Indeed. Something we should continually be reminding ourselves.

I think Aslak hit the nail on the head.

These stats are based on damages being caused in a linear fashion based on historical data. This simply isn’t true.

To my understanding, climate change is meant to cause fewer storms that are much worse. And no one has seen this actually happen in the data yet. But like everything in climate change, its more complicated than that. We’re talking about larger storm surges from larger storms added to increased ocean rise causing more severe flooding.

The cost of damage from storms should in fact compound.

PS. Perhaps ATTP could just say it was an April fools joke on Pielke? 🙂

A few comments:

1. Many of these comments simply assume a trend will emerge, it just takes a (long) while. The fundamental question is whether that correlation even exists, or more accurately, the size of the affect. The longer it takes to detect a good correlation here, the more likely the effect is (very) small. If AGW was already causing a 10x increase in hurricanes, clearly we would detect this quite soon. If the effect is very small (<5% change) it will take a long time, and more importantly policy wouldn't change much for this small of a change.

2. Some have questioned why the US centric approach, is there cherry picking going on? Global numbers are available and also show very little correlation. You can examine the ACE data, or other global counts, you get the same inconclusive results (= not supporting an increasing trend). Emanuel's discussion of North Atlantic numbers makes little mathematical sense as the last thing you want to do with noisy erratic trends (global cyclone data) is to split them up into subsets and expect to find something meaningful, subset are going to be even noisier and more erratic and prone to false correlation.

3. GDP and insurance losses is probably a pretty bad measure for reasons already mentioned when actual storm counts are available (why use a proxy?). I believe(?) RPJ created this analysis in response to others who trotted out the un-normalized insurance loss trend as evidence extreme events were getting worse.

ACE data:

There are many other measures. Google and look at them all. You won't find much compelling information that things are changing in a measurable way.

Tom,

I don’t think it’s quite fair to say people are assuming a trend will emerge. There are models that suggest that trends will emerge and it is these models that have been used to try and estimate the emergence timescale for losses in US landfalling Tropical Cyclones.

I haven’t looked at what Emanuel has done, so can’t comment at this stage on that, but surely this argument applies equally to considering only losses/damage as only a small fraction of the Tropical Cyclones are actually associated with losses/damage.

I agree that we should be using actual storms, rather than proxies. Maybe RPJ’s reason for doing this is as you say, but then I would argue that the typical response should not be “it won’t happen for a long time”. A more correct conclusion is that we can’t tell much about when it will happen. Again, I would argue that we can say a little more as I don’t have a fundamental issue with the Crompton et al. analysis, I just think it is being over-interpreted by some. I would argue that the general conclusion one could draw is that it will likely take more than 100 years for a trend in losses to emerge, but it could be much sooner and it could take much longer.

Of course, as others have pointed out, if the category 5s get stronger, then assuming that the losses associated with such events between 1900 and 2005 are a good proxy for future losses is probably wrong and, if so, a trend will likely emerge sooner than this study would suggest.

I think most would agree that there is not definitive evidence that things are changing in a measurable way. I would argue that there is some evidence though and – as some other posts have pointed out – there is still physics. Our understanding of the climate is not just based on whether or not something has definitively happened yet. Similarly, I would argue that one cannot use that we don’t yet have definitive evidence yet to claim that this means it will not change anytime soon.

Another one using false equivalence to conceal a denial of physics.

False equivalence: the C20th doesn’t tell us much about the C21st, especially the second half of the C21st. Note that the climate signal is barely distinguishable from the noise at present even now.

Physics denial: implicit in this “sceptical” discourse is the absence of any significant temperature response to ever-increasing GHG forcing.

> So he’s read it.

Or has he?

I didn’t define what I meant by “it” 🙂

ATTP,

I would agree with most everything you say here. Of course erratic noisy data gives everyone an opportunity to “interpret” things that support their position. It’s possible there is something about the North Atlantic that makes it especially sensitive to AGW, but it may also be random fluctuations..

I don’t have an issue with a theory that states things will get worse later in the century, only with some that state they already demonstratively have (see Haiyan / Sandy coverage). I don’t think we are close to statistical significance at this point, in fact we probably don’t even know the sign for sure yet. Wind shear has been a prominent destroyer of Atlantic hurricanes over the last decade, and it is unclear what role this and other mechanisms play in the grand scheme. We will know a lot more in 2114 than we do now.

So I may say that the observational data doesn’t support the hypothesis things are already getting worse, and another may say the data is too noisy to know it isn’t happening, and both are defensible positions (except I’m right, ha ha). We could draw confidence intervals and analyze it to death but will get no closer to the answer. We all must simply wait and count.

There is a difference between trending and truth, and a difference between probability and truth.

You may well be able to point out a trend in 20-50 years, heck there will always be a trend up,down or flat. But imputing significance to it is another matter.

A discernible upward trend in that time interval is only 10 % likely to be correct, 90 % likely to be wrong.

Given 98 years on your figures you are 50 % likely to be right! at 247 years you are 95% likely to be right.

Your words.

If we extrapolate this to surface temps for Marko we could say that the IPCC is 90% wrong to be advocating action on climate change based on a small 20-50 year trend in temperature changes particularly when the trend is now flattening rapidly due to the pause.

Physical climatology denial.

Angech,

You didn’t “touch a nerve”, but you did confirm my preconceptions about the level of comprehension required to hold the position you expressed.

Let me keep it simple for you:

If we successfully adapt to increasing numbers of increasingly severe climate events, there could well be no trend in losses.

A lack of trend in losses (whether or not you “normalise” them first) says nothing about:

1. The frequency or severity of events themselves, therefore it doesn’t disprove AGW.

2. The ability to attribute current events to AGW, which is the long bow you attempted to draw. (Made even longer by the fact that the OP is about losses caused by tropical cyclones in the US, not “weather events”, which is more general both in event type and location. Does it prevent us from attributing the floods in Pakistan or Australia? The heat wave in Russia? Etc., etc., etc…)

3. The cost the adaptation. The cost doesn’t show up in the losses (even though that cost was incurred in order to prevent the losses increasing in the face of increasing frequency and severity of events) but that doesn’t mean it’s not very expensive and that more mitigation might be more cost-effective.

And what if you restrict your analysis to a wealthy country like the US, which has more capacity to adapt than to those who might not be so lucky? (New Orleans notwithstanding, of course.)

Furthermore, the fact that in the distant future the OP says there is a very strong change of increasing losses is very bad news indeed, because it means that according to that analysis, we will no longer be capable of keeping the losses flat through adaptive measures. Perhaps mitigation would have been better?

It really does help if you understand what’s being discussed rather than outsourcing it to those who you think are “respected highly intelligent people”.

JasonB

“If we successfully adapt to increasing numbers of increasingly severe climate events, there could well be no trend in losses”.

In fact there would be a reduction if the adaptations were successful. There will always be a trend, I guess you mean it would be neutral or zero.

And that presupposes increasing numbers [the argument goes that there would be less events only more severe] and increasing severity.

Moreover there is only so much loss for a really severe event, You can only be 100% wiped out in a severe category 5 cyclone, make it a category 6 and there is no extra loss, it all went with category 5. I guess you could call it a plateau in losses.

“A lack of trend in losses (whether or not you “normalise” them first) says nothing about:

1. The frequency or severity of events themselves, therefore it doesn’t disprove AGW.”

actually it sort of says that things are not changing, which does disprove AGW, or you would not be having this argument put up by RP and being so vehemently denied by yourself and others.

thats why ATTP has done this post fight it out with him.

“2. The ability to attribute current events to AGW, which is the long bow you attempted to draw”

drawn, fired, bullseye. As ATTP says it is very difficult to attribute trends in the short time to AGW

with any certainty ie <10%. No serious climate scientist can look me in the eye and say this flood in Pakistan [or Australia] this heatwave in Russia is due to AGW anymore than I could say this record cold spell in the USA is due to AGC, Yes you can attribute anything you like, I cannot stop you but then you would not be a serious climate scientist in that case.

"3. The cost the adaptation. more mitigation might be more cost-effective."

Tell that to the people in Wellington NZ or me, ex Darwin Cyclone Tracey. There is no way that the cost of mitigation can ever reduce structural damage to a safe level. You can save lives but you cannot protect property from extreme events. Remarkably this means that low cost housing, ie poorer countries are more resilient in recovering from these disasters as it is much cheaper to reconstruct afterwards.

" It really does help if you understand what’s being discussed"

My rebuttal, while biased, is quite extensive and should show that I do understand what is being discussed. We have differing viewpoints on the responses.

My view is that people are adult enough to do things without other people telling them what to do.

Your view might be that people are, shall we say, slow to understand what is good for them, and that you know the right answers and will show them the way even if they do not want to go your way and even if they are not alive yet to make their own bad choices.

I did not appoint you to be my saviour but thanks for trying.

Angech,

No, that is not the correct interpretation. There is a 50% chance of detecting a statistically significant trend within 98 years. Our reality is not an ensemble of 10000 possible realities. It is one reality. As JasonB has pointed out, you’ve rather misunderstood the significance of these numbers.

Angech,

But we know things are changing, don’t we Angech? Or are you suggesting that buildings now, and in the past, and way into the future, are all built in exactly the same way and in exactly the same places? That changes in building codes over the past 100+ years have been completely pointless and have had no effect? That buildings now are just as prone to damage as they were 100 years ago and will remain just as prone to damage for the foreseeable future?

If you answered “no” to those questions, then you might actually start to see the problem with trying to extrapolate from a statement about “normalised” losses to a statement about the existence of extreme weather events attributable to AGW.

Why use such an indirect measure prone to changes in population density and distribution, technology, and building standards, when you could just look at the events directly?

Your theory appears to be that we “vehemently deny” RP’s argument because he is correct. I suppose that mindset is actually quite understandable for someone used to denying the science of AGW, where the more evidence there is for it, the more vehement the denial of it.

But that’s simply a case of projection on your part. Another explanation for why someone might disagree with an argument is that they can see the flaw in that argument.

How to tell which is which? Well, when the people you characterise as “vehemently denying” the argument are actually pointing out rationally what the flaws in it are, and everyone seems to see what your misunderstandings are (including the author of the piece you are misunderstanding) except you, you should wonder whether your knee-jerk reaction that they must be denying it because it is correct is mistaken.

On the other hand, when people fail to address the points at hand and instead pepper their comments with non-sequiturs like “My view is that people are adult enough to do things without other people telling them what to do” then you should probably start to question their decision-making process.

Angech,

You do actually make one good point

Moreover there is only so much loss for a really severe event, You can only be 100% wiped out in a severe category 5 cyclone, make it a category 6 and there is no extra loss, it all went with category 5.

But that supports Jason’s argument that no change in losses does not necessarily mean no change in extreme events.

To pick up on one other comment

As ATTP says it is very difficult to attribute trends in the short time to AGW with any certainty ie <10%. No serious climate scientist can look me in the eye and say this flood in Pakistan [or Australia] this heatwave in Russia is due to AGW anymore than I could say this record cold spell in the USA is due to AGC

Well first of all there is a false equivalance there because there is no AGC occurring therefore attributing any event to it would be an automatic fail. Secondly, ATTP is referring specifically to trends for losses arising from US Tropical Cyclones, the same does not necessarily apply to trends for actual extreme weather events of all kinds. The IPCC says for example in WG1 SPM –

“Changes in many extreme weather and climate events have been observed since about 1950. It is very likely that the number of cold days and nights has decreased and the number of warm days and nights has increased on the global scale6. It is likely that the frequency of heat waves has increased in large parts of Europe, Asia and Australia. There are likely more land regions where the number of heavy precipitation events has increased than where it has decreased. The frequency or intensity of heavy precipitation events has likely increased in North America and Europe.”

What’s more, the lack of a detectable statistically significant trend for particular events is not itself proof that such events are not influenced by AGW and even less so that they will not be influenced in future. And there is much more to understanding the relationship between AGW and extreme events than just looking at graphs, we can consider the physical drivers behind such events (to the extent that they are understood) and consider how they are likely to be influenced by such factors as higher air and ocean temperatures, increased sea levels etc.

The fact is that we have to work with imperfect information and make the best decisions we can in the circumstances given our current knowlege. We can’t wait 200 years to see if a positive trend does emerge for losses due to US tropical cyclones. And taking action to mitigate and/or adapt to extreme events after they’ve already occurred doesn’t seem a wise course of action, after all you wouldn’t wait to take out insurance until after your house has burned down.

Thank you JasonB and ATTP for letting me have a say and addressing the argument and not the man. ATTP I am very happy that you have put up this post. I have commented at JC that your approach is one of few ways I have seen in which one could directly attribute an anthropogenic fingerprint if it exists, just that it will take a long time.

JasonB Things do change over time and that is why normaliation was applied.

C20th GAT and forcings

angech,

But even that’s not true — if there was an increasing trend in normalised losses, that wouldn’t prove AGW any more than a lack of increasing trend disproves AGW. There are plenty of more obvious and direct fingerprints of AGW.

Unless you can demonstrate that the normalisation of loss information correctly removes the effect of all factors other than event incidence and severity, you have just agreed with what I have been saying all along.

Pingback: Some thoughts, or not | And Then There's Physics

I have only recently caught up with the articles that this post refers to, which means I have also come to discussions after they have run their course…

I posted this comment to Kerry Emanuel’s article at FiveThirtyEight, and would like to re-post it (with minor context changes) here, if that is OK. I think there is real value in KE’s argument -but it is being somewhat overlooked:

What I get out of Kerry Emanuel’s article is the very important distinction between a Bayesian approach -which looks at how we can form robust inferences about probability, based on incomplete data- and a Frequentist approach -which applies well known tests to the data, to see how it compares to a random distribution.

Many people make a tacit assumption that ‘statistically significant’ means ‘really occurring’. I think Professor Emanuel illustrates that, depending on the kind of phenomenon, and the time frame over which we sample it, the test of ‘significance’ can actually be uninformative -in other words, the lack of ‘statistical significance’ actually tells us virtually nothing of note, rather than telling us the phenomenon is not occurring. This is the point of his example of bears in the woods.

Significance testing needs to be put into context as part of a constellation of data, theory, and models, that we use to build up our understanding of the world; it is, after all, just one of the tools, and can mislead us if we treat it as the only valid tool. This is modern day statistical analysis, folks, and Professor Emanuel has just given a very clear lesson in it.

Mark,

I agree. This fixation with the frequentist approach, in my view, often tells us very little. A Bayesian approach would be much more informative. You’re also correct that many seem to conclude that the lack of a statistically significant trend means that nothing is happening, rather than we don’t know if anything is happening. People should probably look up the difference between a Type I and Type II error.

Pingback: Why do people give Junior such a hard time? | And Then There's Physics