I thought I might briefly highlight the recent Cowtan et al. paper Robust comparison of climate models with observations using blended land air and ocean sea surface temperatures. As Ed Hawkins points out, it’s really an attempt to do an apples-to-apples comparison of global temperatures. Dana has already covered this, as has Tamino.

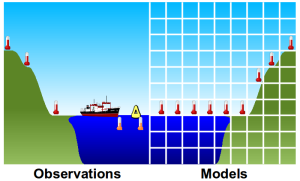

The basic issue is illustrated by the figure on the left. Typically the global surface temperature datasets are generated by combining near surface air measurements over land and sea surface temperatures. What’s presented from models, however, tends to be only near surface air temperatures. Another issue is that the change in sea ice cover means that some cells have gone from being near surface air temperatures over the ice to sea surface measurements. All of this means that typically the models are not presenting quite the same thing as is being presented by the observations. The results are shown in the figure on the right. The top panel shows the HadCRUT4 temperature data, the mean model ensemble result without any blending adjustments (red line) and the mean model ensemble result with blending adjustments (blue line). The lower panel shows what happens if you update the model forcings. Essentially, the updating the forcings and making these blending adjustments brings the models and observations into closer agreement.From what I can see, this is a pretty obvious thing to do. If you’re going to compare models and observations, you really should compare like with like. One might ask why it hasn’t been the norm in the past, but I suspect that that may simply be because it wasn’t expected to make too much of a difference; in most fields you wouldn’t normally have to deal with pedantic nit-picks. As expected, the usual suspects don’t seem to like this new result. I made the mistake of reading some of the Bishop-Hill comments; the least optimal combination of nasty and ignorant. Personally, I think it’s a very interesting piece of work which does something that seems pretty obvious in retrospect and will probably help to improve model-observation comparisons.

Has there been a substantive or technically orientated critique of this. All I have had is just generic conspiracy orientated dismissal because it says something people dont like.

semyorka,

I don’t think so. The only think I’ve heard is “what about tropospheric temperatures?” As I understand it, doing such a comparison is quite tricky because it’s hard to know if the satellite measurements are actually measuring the right altitude. I think they can be influence be stratospheric temperatures so as to give a trend that may not be quite representative of the troposphere.

Deniers have limited space here. If Cowtan15’s hind casting reveals that the models are reasonably skilful then its forecasting will do the same. Worse, Cowtan15 arrives on top of Hansen 15’s “pause buster.” Apart from asserting that UAH and RSS are the only faithful data sets, how will deniers cope with this?

I note that Anthony Watts has posted 3 papers recently without employing “CLAIM” in his headers.

Has he seen the writing on the wall?

At Bishop Hill the response has been at best muted because there is little that they can do with this. Coupled with Gavin’s paper on bringing forcings used in CIMP5 up to date, and La Nina this kills the pause.

Eli,

Indeed, that’s probably why some of the commenters are resorting to attacking the authors and their institutions. It’s all they have.

It’s all they’ve ever had. See ‘Climategate’. Nothing changes in this nasty little game.

You’re right: the net of evidence is closing, making it more and more difficult for contrarian spoilers to gain traction.

But beware: each time a COP has been scheduled, hardcore deniers have cooked up some mischief to sow doubt among the uninformed. The climate science community should be on their guard: cornered rats resort to desperate behaviour. And, just for the avoidance of doubt, I use ‘cornered rats’ purely as a metaphor. 🙂

Obviously UAH is going leap at this opportunity to join with RSS in explaining why the thermometer series are more accurate.

Do you have a reference for that RSS stating thermometers are more accurate..? Could be handy sometimes.. 😉

It’s in this blog post by Carl Mears, a Senior Scientist at RSS. He says

O^O

Here on Carl Mears’ blog (emphasis added):

Bloody hell ATTP…

😉

Great minds, and all that 🙂

I type it from my photographic memory:

UAH sucks.

Thanks all great minds.. 😉

The ENSO model killed the pause long ago.

http://contextearth.com/2014/11/18/paper-on-sloshing-model-for-enso/

There are transitive relationships between the ENSO indices such as SOI and the global SAT — see the CSALT model as an example. This essentially models the natural variability compensation that rides on top of the trending signal.

Lots of behaviors relating to climate science are pretty obvious to figure out. For example, that ENSO is basic sloshing hydrodynamics relating to the thermocline and changes in angular momentum forcing. Alas, this doesn’t mean that the mainstream will pursue it. It’s really not main-stream, but maintenance-stream that they seem more interested in.

Why is is that Cowtan, who is really an amateur climate scientist is able to contribute ideas? Could it be that he places fresh perspectives on the data?

“Why is [it] that Cowtan, who is really an amateur climate scientist is able to contribute ideas? Could it be that he places fresh perspectives on the data?” I don’t know…but to me this looks like another really impressive contribution.

He has a lot of experience with large X-ray crystallography data sets.

Its not just kevin who had this idea, Mann and others were looking at the same thing.

If an auditor had done the work and found out the opposite, what would folks say?

stupid question.ok.

Cowtan did better science. Its good solid work. so skeptics will change the conversation to

1. who are these guys ( I’ve heard that one before)

2. But models

3. But bad observations.

.

on a side note I’d like to give a shout out to the number of guys who are blogging and commenting and doing their own damn science: Robert Way and Zeke.

I guess I qualify as an old fart and seeing these guys publish puts a big smile

on my face.

[Mod : for completeness, this comment has been slightly edited. ]

As for it being obvious, I think it is also obvious this was not a problem crying to be addressed.

The real “Pause Buster” was Cowtan and Way 2 years ago and it was quickly accepted applied by everybody except Christie. Cowtan15 is probably being widely applied to models as I type.

So this is my question. Is Cowtan the most influential bloke in Climate today?

Yes, I knew that about Cowtan.

Personally, I would guess that my own most significant contribution to science has been in the analysis of disordered crystallographic structures. See my two models featured at ANL’s X-ray crystallography server:

http://sergey.gmca.aps.anl.gov/TRDS_sl.html

Deconstructing oscillations in ENSO is almost freaking small potatoes in comparison to being able to decipher the underlying structure of quasi-periodic 3D lattices.

I am willing to descend into part-time madness in trying to figure this stuff out 🙂 Cowtan probably also finds this stuff easy in comparison.

“Its not just kevin who had this idea, Mann and others were looking at the same thing.” Yeah, great minds thinking alike. They all deserve the credit.

I’ll be interested to see how this result pans out over time and how it fits in with a lot of other recent papers on the mismatch between models and measurement-based records of global surface temperature.

“If an auditor had done the work and found out the opposite, what would folks say?” Probably not fair for me to guess what climate scientists would say. I should admit that my response would probably be a lot more, er, skeptical, (is that ad hom or is it a sensible response to previous form?), but I would be interested if no one clever could find major holes in it (although I usually don’t have the competence to know what is a major flaw and what is a nitpick). If it were reproducible then I think some mainstream climate scientists would presumably reproduce it in time.

I maybe wrong but I don’t suppose the question of whether state of the art climate models are getting things about right in terms of projections of global surface temperature is anywhere near settled, although they are the best we have. If it turns out that the models aren’t running a lot hotter than the real world then that’s really bad news, although getting better answers about whether that’s the case is a very good thing and gives humanity more chance of tackling climate change.

Sometimes auditing’s not cheap:

Being thorough incurs costs.

Oh, and I’d fire the editor who accepted that the article’s title starts with the self-congratulatory “Robust.”

Willard “Robust ” was probably Kevin Cowtan’s word, but I must bow to your experience in the matter of self congratulatory conduct.

“As for it being obvious, I think it is also obvious this was not a problem crying to be addressed.”

There were a number of these little problems which the temporary..err…muting of warming

brought to light.

1. Temps in the arctic. Its been long known that GISS and Hadcrut disagreed over the arctic

but quite frankly the difference was small enough that it just didnt look interesting

to solve. Nothing BIG turned on solving the problem.. when the muting of temperatures

started to become an issue ( real or imagined—- tamino makes a plausible case for imagined)

then solving that problem became more interesting.

2. The data comparison. Again, its been known for a while that the comparison was apples

and orranges… ok oranges and tangerines.. but again, not something you think

would be a career maker.. until the muting extended..

And there are some other areas where more attention to detail may yeild results with good

payback.. Svalgaards work on sun spots for example.

Satellite data from what I see is also a treasure trove.. or potential treasure trove , data pigs

should be diving in..

“Sometimes auditing’s not cheap:”

that is the question willard. As a data pig I can attest to wasting huge amounts of time trying

to find an area where more attention to detail will actually pay off. years wasted actually.

Sadly failed auditing ( finding no issues ) is not exactly publishable.

That’s why you don’t normally commit too much effort to any kind of formal audit in the physical sciences. Not only are there no formal rules, since anything really interesting won’t have been done before, but those who would do the auditing would need to be as expert as those doing the science. That’s why it’s better (IMO) and more cost effective, to simply have everyone doing science and to then rely on the scientific method – reproducibility and repeatability.

Are there CMIP-5 model output datasets that are narrowly targeted at layers of troposphere above “surface,” against which the RSS and UAH variety of indices properly should be compared?

” Not only are there no formal rules, since anything really interesting won’t have been done before, but those who would do the auditing would need to be as expert as those doing the science.”

Not strictly true, but pretty close

Here is what experience ( i like that better than expertise ) buys you. It buys you a better hunch at knowing where to dig and where not to dig. the basic skills required to do an audit are not SME skills. If you are a SME then you have a better chance of auditing the right things or the things

where your mole hills can become mountains.

Tom,

I can’t see why you couldn’t extract model temperatures at specific heights in the troposphere. The issue, I think, is more to do with how you ensure that the satellite dataset you’re using is actually for a specific height in the troposphere. Ed Hawkins and Robert Way comment on this in Ed’s post.

So, dumb question alert here. Is the general assumption that sea surface temperature are equal to surface air temperatures above the sea, and that the sea and air above it are generally in thermal equilibrium?

Sam,

I don’t know if that is what has been assumed before, but what this seems to be showing is that this isn’t the case. One thing that may be relevant is that if there is some change in forcing, then even though the atmosphere and ocean’s well-mixed layer (upper 100m or so) equilibrate quite quickly, it still takes a few years. I don’t know if this is why there is this discrepancy, but it might be part of the reason.

ATTP,

Thanks. I guess in the back of my mind I just always kind of thought it wasn’t intuitively obvious that sea and air temperatures would necesarilly be equal, especially if you were taking readings during the day or the night, I just kind of thought that the air temperature would vary more rapidly while sea would be closer to a constant due to the bigger thermal intertia. Perhaps looking at anomalies removes this though.

Sam, I would have thought not (that sea surface temperature equals surface air temperature). But presumably that doesn’t matter since we are always dealing with temperature anomalies. So it’s largely under circumstances where what was land surface becomes sea surface (i. e. when there is a vary large change in sea ice extent) that there is a significant change in temperature anomalies measured at sea surface compared to air surface that isn’t “normalized” by the fact that one is measuring anomalies…

..that’s how I see it. After all one only needs to remember the times one has spent “at sea” (e.g. holidays by the beach, being forced into the N sea by one’s mother on Spring days out on the Fife coast, paddling in sea kayaks or canoes and running one’s hand through the water etc.) to know that the sea surface temperature generally doesn’t equal the air surface temperature above the sea. I expect that applies to the wider expanse of ocean…

OK you’ve highlighted the normalizing effect of anomalies in your post while I was typing mine 🙂

Chris,

True, the north sea feels incredibly cold even in the height of summer. However, how cold something ‘feels’ to us isn’t always indicative of its temperature. If you touch a piece of metal and a piece of wood which are both at room temperature, the metal will feel colder because it has a higher thermal conductivity (or was it effusivity?) and you transfer heat into it more quickly. Same is true of water and air at the same temperature.

Incidentally I think that the above is also how you explain firewalking, since coals are very poor conductors of heat.

Sam taylor,

I don’t think there was ever a general assumption that SSTs are equal to ocean SATs. The use of ocean SATs from models is largely due to convenience. The way model data is made available through CMIP5, SAT is a single global array covering land and ocean, which means it is very easy to download, process and output global land+ocean averages. SST data not only requires a separate download, but is actually part of a different domain in the model setup (SST is in ocean, SAT is in atmosphere), which means grid sizes are different. Generally SST data is higher resolution too so file sizes can be considerably larger.

Really this has more relevance to blog and public discussion than the scientific literature, where simple global average model/data SAT comparisons are not held with such importance, which is probably why this issue hasn’t been addressed much. I do wonder about D&A work though, whether using model SST data would alter results much – should probably ask Ed Hawkins!

This story does highlight the fundamental and rather wonderful value of models. Without a model we lack context for interpreting observations/measurements; one might argue that we don’t really know what we knows without a model. And comparisons between observations and models provides a focus for scientific investigations especially when there is apparent disagreement between models and measurement.

And these episodes highlight a fundamental difference between a scientist and a pseudoskeptic’s love for model-measurement discrepancies! The scientist loves the focus provided for attempting to resolve the discrepancy and advance knowledge; the pseudoskeptic loves the fact of the discrepancy for its own sake and wishes to nuture it as a permanent blemish with which to bash the science 🙂 (apols for snark!)

It’s fascinating that satellite tropospheric temperature measurements are being discussed in the context of modeled/measured temperature discrepancies. It was the long (15 year) fundamental discrepancy between UAH MSU troposphere temperature reconstructions and models of the tropospheric temperature response to greenhouse warming that lead to careful focus on the UAH reconstructions and their correction in favour, largely, of the models. If I may turn on my snark again briefly, it seems that at least one purveyor of tropospheric temp measures wishes to nuture vestigial MSU tropospheric temperature/model discrepancies, but no doubt scientists will resolve these discrepancies too in due course…

Pingback: Matt Ridley’s lecture | …and Then There's Physics

Pingback: Matt Ridley responds to Tim Palmer | …and Then There's Physics

Pingback: It’s mostly about risk | …and Then There's Physics