Update: (26/09/2019) This paper has now been retracted. The authors says

Shortly after publication, arising from comments from Nicholas Lewis, we realized that our reported uncertainties were underestimated owing to our treatment of certain systematic errors as random errors. In addition, we became aware of several smaller issues in our analysis of uncertainty. Although correcting these issues did not substantially change the central estimate of ocean warming, it led to a roughly four-fold increase in uncertainties, significantly weakening implications for an upward revision of ocean warming and climate sensitivity. Because of these weaker implications, the Nature editors asked for a Retraction, which we accept. Despite the revised uncertainties, our method remains valid and provides an estimate of ocean warming that is independent of the ocean data underpinning other approaches. The revised paper, with corrected uncertainties, will be submitted to another journal. The Retraction will contain a link to the new publication, if and when it is published.

I wanted to just briefly mention the recent paper that [quantifies] ocean heat uptake from changes in atmospheric O2 and CO2 composition, by Resplandy et al. The interesting thing about this paper is that it uses proxies to infer the change in ocean heat content. What it finds is that the change in ocean heat content is probably at the high end of earlier estimates, which are based mostly on direct measurements of ocean temperature.

What this implies is that observationally-based estimates of equilibrium climate sensitivity (ECS) are probably also on the low side. Fortunately, BlueSkiesResearch has already repeated this calculation. Essentially, it increases the lower limit by about 0.1K, the upper limit by about 0.5K, and the median by about 0.2K. This slightly resolves the issue I had with the slightly high TCR-to-ECS ratios that come out of these type of analyses.

However, the paper suggests that this increase in the change in ocean heat content implies a reduction in the carbon budget for staying below 2oC, and I don’t think that is correct. The carbon budget depends mostly on the transient climate response (or the transient response to cumulative emissions). This doesn’t really depend on how much the ocean heat content has changed; it primarily depends on how much we we’ve warmed for a given change in external forcing.

So, I don’t think that this new ocean heat content estimate really implies anything with respect to carbon budgets; I think the main significance is that it is suggests that some observationally-based estimates of equilibrium climate sensitivity are probably too low and that the likely range for equilibrium climate sensivity is still something like 2oC to 4.5oC.

Update:

There is now a guest post on Realclimate by one of the authors in which they discuss errors in the uncertainties and in the trend estimate.

ATTP, you write in the article:” Essentially, it increases the lower limit by about 0.1K” poiniting to James Annens recalculation. In your conclusion you write “and that the likely range for equilibrium climate sensivity is still something like 2°C…”

In Resplady et al.(2018) is stated:” …would push up the lowerbound of the equilibrium climate sensitivity from 1.5 K back to 2.0 K (stronger warming expected for given emissions),…”. This implies a delta ECS of 0.5°C, not 0,1°C as you mentioned in the text. You contradict yourself? What about the reliability of the conclusions of the paper?

The interesting thing about this paper is that it uses proxies to infer the change in ocean heat content. What it finds is that the change in ocean heat content is probably at the high end of earlier estimates, which are based mostly on direct measurements of ocean temperature.

Does it provide an explanation for the low bias in the estimates based on direct measurements?

If course, independent of such an explanation, we know that the conclusion on ocean heat content is wrong…as “global warming has stopped.”

Deep ARGO results?

It’s a good thing a proxy estimate didnt move the needle the other way

Haven’t found it discussed, but it would seem this finding has implications for the sea level rise budget.

The research findings contained in Resplandy et al have drawn considerable media attention throughout the world including…

Startling new research finds large buildup of heat in the oceans, suggesting a faster rate of global warming by Chris Moody & Brady Dennis, Energy & Environment, Washington Post, Oct 31, 2018

Replandy et al is also the suggested topic of discussion under Real Climate’s <a href=http://www.realclimate.org/index.php/archives/2018/11/unforced-variations-nov-2018/>Unforced variations: Nov 2018.

Replandy et al is also the suggested topic of discussion under Real Climate’s Unforced variations: Nov 2018.

That was always highly unlikely Steven. Because Consilience. The low-ball ECS estimates conflicted with so many other, independent lines of evidence that it was always far, far more likely that they’d turn out to be wrong (or measuring the wrong thing) and the mainstream ones turn out to be right.

Regarding proxies, even a mercury thermometer is a proxy for measuring temperature.

I’m especially impressed by the coral proxies that estimate equatorial SST going back in time. They calibrate very well with direct proxy measurements

Yet, these aren’t so useful for estimating AGW as they show no real trend in mean temperature, likely related to the fact that they are essentially reflecting only the cyclic ENSO state.

Joshua,

They cover that briefly in the abstract:

‘During recent decades, ocean heat uptake has been quantified by using hydrographic temperature measurements and data from the Argo float program, which expanded its coverage after 2007. However, these estimates all use the same imperfect ocean dataset and share additional uncertainties resulting from sparse coverage, especially before 2007’

Previous studies have found that the coverage shortcomings in available direct observations would be expected to result in quite large underestimation – e.g. Durack et al. 2014.

Not sure I would lean on a proxy which ever way it swung things. It basically confirms that the range of estimates from direct measures is good.

That’s all.

Yes Paul I know.

I take it your argument is we can ditch thermometers ditch Argo and just use corals..and measuring c02 and oxygen.

Isn’t it amazing that less precise less accurate, less direct measures are consistent with better measures.

Err duh. Here is the point if these proxies gave you answer not consistent with thermometers, youd pitch them. In fact the discipline is built on this.

Steven, please don’t start with ye olde FUD about proxies.

Not again.

Paul –

I saw that. I was wondering why there would be systemic error in a particular direction as opposed to random error in various directions.

Any idea how they sample out-gassing into the atmosphere from back in the day?

Shouldn’t say error…. meant bias.

Most all of the corals are in the tropics where the warming trend is clearly not as strong. I don’t think there are nearly as many corals at higher latitudes.

“The non-tropical coral Cladocora caespitosa as the new climate archive for the Mediterranean: high-resolution (∼weekly) trace element systematics”

https://www.sciencedirect.com/science/article/abs/pii/S0277379106002770

“This study thus demonstrates the feasibility of extracting and exploiting high-resolution geochemical records from non-tropical corals such as C. caespitosa as a proxy for SST.”

I largely agree with Steven that one of the first conclusions that we should probably draw is that this is consistent with the other measurements of OHC. I would argue, however, that it would be rather surprising if an alternative method for estimating OHC changes suggested a smaller change, given that there are lots of indications that these energy balance estimates are biased low.

Joshua,

In Durack et al. it was basically all about the Southern Ocean (plus adjacent areas in the South Atlantic, South Pacific, South Indian Ocean), which is expected to be one of the places with the fastest ocean warming at depth due to vertical mixing. Direct observational datasets don’t tend to reproduce such an anomalously fast warming Southern Ocean, even though they do show fast warming in other vertical mixing hotspots. However, satellite altimeter sea level data does seem to reproduce the expected pattern. One obvious possible reason for it not appearing in the gridded data from direct observations is that the Southern Ocean is/was probably the poorest sampled region in the world.

Gas measurements have been taken multiple times a day at various sites since the late 1980s/early 1990s.

Frank,

There are lots of other indications that the lower bound should be closer to 2K than to 1.5K (see the recent Royal Society report, for example). What I was suggesting is that this new OHC estimate doesn’t change the lower bound by very much; it has a bigger impact on the median and on the high-end tail.

Another line of evidence that global warming is real, not that anyone here has any doubts about global warming. I wonder what will happen as the ocean heat sink becomes saturated, more of the thermal imbalance will go into the atmosphere and cryosphere I guess.

One reason to hold doubts about the OHC as derived from direct measurement by buoys is that it has been wrong in the past. Like the satellite LT record, any change in the instrumentation, coverage or methodology in the observations can introduce a bias or systemic error.

Some may remember the discontinuity in the record when the ARGO buoys were first used. Willis had a paper reporting an apparent large fall in OHC because of the difficulty of splicing one record onto another. Different measuring regimes and unsuspected errors in the coverage/depth at which the new system was reporting data initially showed a big cooling dip…

The advantage of the results from the proxy measurement of a OHC trend is that it provides a result consistent with the best we can derive from measurement, from a methodology, or process, that is constant over the whole time period from which the proxy data is drawn.

And confirms the smooth underlying trend that ocean data returns in contrast to the ‘pauses’ and ‘hiatuses’ of the land metrics.

RP(jnr?) should be pleased that the ‘preferred’ indicator of the real trend and impact of AGW now has the added confidence of any metric that can be derived from independent lines of evidence.

Paywalled artlcle. Does anyone know how many different sites of CO2 measurement were done over the world and similarly with O2? The idea is interesting, the amount of uncertainty would depend on a lot of factors. It will be interesting to see a rerun in 5 years compared to Argo etc.Good that another different attempt has been made.

Senior, Izen, not Junior.

A mercury thermometer is a proxy Steven. It measures expansion not temperature. So is a thermocouple. A double proxy because you don’t measure resistance directly either. Even if you did measure temperatures directly, the finite set of point measurements is a proxy for global temperature in all the places we didn’t measure. Hence kriging etc. Satellite tropospheric temperatures? Proxies. And noisy ones at that. Anthropogenic CO2 emissions? Proxies, based on commercial reports, fuel carbon content and extent-of-burning estimates. Balloon heights? Proxies. Time of day? Proxies, nowadays based on oscillations of a quartz crystal. Satellite height and location? Very dodgy proxies by all accounts. Clouds? Proxies. Nobody counts the water or ice droplets. Volcanic aerosols? Ditto. Ocean pH? Proxies, no-one counts hydronium ions.

Need I go on?

The key question is how well the proxies represent the target, which is measured by correlations, comparisons and uncertainty estimates. Which I’ve seen published in every proxy paper I ever read. At least, every reputable one.

angech, the supplementary information is not paywalled. The Extended Data tables at the end list the origins of the data, which is previously published material. The references are not paywalled either. You can follow them up and extract their source data. It’s probably not paywalled either; most isn’t. The raw data is probably on a public website anyway. Free versions of older papers can often be found using Google Scholar. I recommend the Google Scholar button in Chrome.

angech, the lamenting about paywalled papers is IMO some kind of backfire… It anybody is interested in articles he should have found the magic link ( sci-xxx.xx) to get a free copy af almost any paper. Or the other way around: If you don’t know the url you are not interested?

So is a thermocouple. A double proxy because you don’t measure resistance directly either.

Nitpicking, but a thermocouple provides a temperature dependent voltage. A thermistor provides a temperature dependent resistance.

Aha, my mistake. But at risk of kicking off an infinite regression, do you measure voltage directly? And don’t your eyes detect a change in light rays as a proxy for the meter reading, and your eyes send electrical signals to your brain as a proxy for the image on your retina?

Next stop, The Matrix 🙂 .

Okay, 0 to 2000 meters sampling was sparse before ARGO.

Are there any lines of evidence that indicate OHC reconstructions prior to ARGO could be way off?

Not that I know of. Does look a trifle consilient, doesn’t it?

Pingback: A major problem with the Resplandy et al. ocean heat uptake paper | Climate Etc.

Nic Lewis has a new post on this paper at Climate Etc.

dpy,

Yes, I know. Turns out John Kennedy pointed out something similar on Twitter a few days ago

“Nicholas Lewis. 4 hours ago

I have analysed the APO data in Extended Data Table 4 and

checked the paper’s results. I found that the linear trend in the dAPO_Climate

data was 0.88 per meg per year, not 1.16 per meg per year as claimed. Moreover

the claimed uncertainty in this trend was far smaller than what I calculate

using the data error distributions. As the paper’s ocean heat uptake rate is

derived by applying a conversion factor to its dAPO_Climate trend, this means

that all of the paper’s findings are wrong.”

Bold statement.

Lucia has put up a comment backing up the Kennedy claim but I have some worrying doubts.

Nic’s response sounds pretty convincing.

Pretty convincing indeed:

Logically, OHC is likely low. That remains true regardless. On to deep ARGO.

If you plot the new data, without any attempt at fitting, it is similar to the existing OHC data.

laughing now.

Its Not the same old fud about proxies. In reality it is something I learn from Tim Osborne.

Duh.

Its this.

1. They will necessarily have wider uncertainties than direct measures

( See Osbornes complaints about the narrow uncertainty in Manns work as an example)

2. They will neccessarily have central measures that are either higher than or lower than

the central measures of observations ( strictly speaking the can never be exacty equal)

what does this imply?

#1 they can marginally improve our knowledge. It COULD tell us something about structural

uncertainty since thermometers are also proxies, albeit more direct and mediated by

things like the laws of physics for expanding liquids in tubes for example. The wider uncertainties dont help

#2 IF you want to combine the central measures from two different ways of measuring the same

thing ( thermometer proxies and other more indirect proxies) then you ought to do that

properly rather than merely accepting the estimate of the less direct way. That is, since the proxy will ALWAYS have a central measure that is diferent from ( in either small or large ways)

the central measure of a more direct method, there will always be a temptation to accept the central measure of the proxy IF it biased in the direction you happen to prefer.

The best thought experiment BBD could run on this to understand stuff is the following.

This type of thought experiment takes training, and honesty. Its a simple exercise and when your job is data every day, you can learn it in no time.

Suppose the PROXY approach was done FIRST and you got an estimate of X

for OHC.

Now suppose you did direct measurement and got X – c

what would you think?

what would you think if the direct measure got X +c?

And Now, if Lewis is correct and the proxy actually shows a lower OHC, what do you conclude about the proxy.?

me? my assessment doesnt change regardless.

The study of the proxy can tell us somethinmg about structural uncertainty.

If you want to “move” the estimate then you need to combine the two metrics properly.

no silver bullets, no coffins, no nails.

Correction, its not necessarily the case that the uncertainties of the proxy will be larger, especially

in cases where the measured metric has high spatial uncertainty or where the direct instrumental

“proxy” is horrible. The other thought to consider is would you use the less direct proxy to “correct” the more direct proxy. For example, nobody uses NH tree ring series to “correct” the actual thermometers we have prior to 1850. why not?

On consilience

from steve F

‘I didn’t see the paper, only the press releases, but my immediate reaction was: “Well, if that is right, then thermal expansion has to have been the dominant cause for measured sea level rise, and ocean mass increases (melting of land supported ice) estimated from Grace data have to be WAY wrong. That seems unlikely.”

hmm not sure, But at least he understands consilience

anyway BBD

go take it up with nick stokes

This should be fun.

OH, publishing the code for regressions should not be an IP issue

so the authors ought to, you know, publish their code as Nature could require it.

What a day. Elections. Two different ways of getting exactly the same result but using inverse SD’s. Nick Stokes says John Kennedy’s method gives him 1.162 so very close but not exact. I hate exact from 2 different measures when they are not mathematical.

I am amused by the irony that Nick Stokes is replying to Richard Tol when he writes:

;o)

Steven,

I think the point is that the ECS depends on the difference between the heat uptake rate now, and the heat uptake rate during the base period. If you weight their regression using the uncertainties then you get a higher heat uptake rate during the recent period (where the uncertainties in the proxies are large) than you would get if you did a linear regression without any uncertainty weighting.

I think it’s reasonable to weight by the uncertainties, but reasonable people can also disagree. I think it’s unfortunate that they haven’t made this clear in the paper, so it would be interesting to hear their response. One should also bear in mind that this is also published in Nature, so it’s almost certainly wrong 🙂

Steven,

Reading Nick Stokes, it’s very clear that Nic Lewis criticised the paper before understanding the methodology. Not impressive, particularly when accompanied by the condescending rhetoric.

My own view is that the ideal way to do this is to assume that they didn’t make some kind of silly mistake and then try to work out what they actually did. This is essentially what John Kennedy has done. Of course, if you try everything that you can think of and still can’t get what they did, then you can start to consider that they have indeed made some kind of silly mistake. If you can get what they did, you can still disagree with their methodological choices.

what ATTP said.

The best starting assumption should be that *YOU* have done something wrong, and that you are missing something, and try and understand what they have done. I find emailing them and asking is quite a good thing to do (and in accord with the “Golden rule”).

It appears that some people were able to work out what they did from the paper. This seems to have involved reading and work. Whoda thunk.

[I make no pretence of being qualified to comment on whether what they did was a correct, or optimal analysis. But it doesn’t seem that they hid what they did, or made any elementary mistakes as alleged]

@dikran

Not sure about that irony.

Resplandy’s trend can be reproduced with weighted least squares, using one over the squared standard error as weights, and an arbitrarily large weight for 1991. Their standard errors are cumulative, and 1991 is an arbitrary choice. Their trend does not make a lot of sense.

My take is that the new method generally corroborates existing OHC data. This is a step forward, since this is a completely independent method. However, there is too much uncertainty in the data and fit, to say much more than that. More data is going to be needed to move the needle.

The lesson – don’t get too excited about any single study or method.

Ironically, I see parallels in Energy Balance Method ECS estimates. Here was a new method, giving a different answer. However, with the passage of time and multiple studies, it is becoming clearer that EBM results don’t move the needle either.

Steven

Wrong assumption expressed in an inappropriate tone.

> 1991 is an arbitrary choice

As opposed to what, Richie – objective Gremlins?

As I understand it, the issue is that there analysis assumes that there is no uncertainty associated with the 1991 data, which then forces the best fit to esentially go through that data point.

AFAIK, the OHC is an integral in time, starting with 1991 as the start of the integral. Thus, you have no error term for zero, so even if there were an error bar, 1991 would be at it’s center and you subtract that error bar from subsequent years, It is quite clear that there is some error propagation growth between 1991 and 2016 (see Figure 3b).

Also over at CE, NS highlights their methods section and their use of MC simulations.

Finally, others are expected to eventually confirm this method or not in the peer reviewed literature. Blog science suks big time.

EFS,

Yes, I read some of Nick Stokes’s comments and noticed that he’d pointed out that it’s all relative to 1991.

NL is in a league of his own, the little league.

LR’s publications …

https://resplandy.princeton.edu/publications

Prof Tol wrote “Not sure about that irony.”

no, … pity.

The problem is that the paper supports an idea we all want to believe, that the GHG effect has produced more heat than can be shown in the observations. There is no surprise at these findings because we want to find them.

Mosher’s question, what standards do we want to adopt and what effect do our beliefs have on our acceptance of new studies is increasingly valid.

In this case the paper should have made it quite clear which method they were using to get their figures. If two different measures existed then the one used must be defined, not left to the imagination.

@EFS, Wotts

It is indeed an integral over time. However, the process did not start in 1991. Measurements started in 1991. Pretending that you start at zero without measurement error is wrong in principle. We don’t know how wrong this is in practice.

Fact is, John Kennedy was able to reconstruct their slope estimate, but only under the assumption that the change between 1991 and 1994 matters much more than the change between 1991 and 2016.

Nic Lewis has been able to reconstruct their estimate of the uncertainty about the slope, but only under the assumption that errors are uncorrelated over time, whereas in fact these errors are cumulative.

(I have been able to reconstruct both John’s and Nic’s work.)

AFAIK, no one was able to reconstruct both their slope and its error.

Richard,

Well, if what you’re presenting is the change since 1991, then it’s not clear that this is wrong.

@wotts

It’s wrong because you pretend there is no measurement uncertainty (there is). In Resplandy’s data, the measurement error grows over time. This is an artifact of the construction of the data, rather than a feature of the system studied.

It’s also wrong because you rescale to the data point you least understand. We do not know whether the observed trend exaggerates the real trend by recovery from a low point, or is masked by return from a high point.

As I said, I don’t know whether this matters here. I cannot reconstruct their result, so I do not know whether their method is sensitive to rescaling.

Is there some kind of a rulebook that lays out when peer review helps to validate a paper and when it’s evidence that verifies the hoax? I get confused sometimes.

Richard,

If it’s relative to the value at some point in time, then you don’t need to know that value, you simply need to know how it has changed. I don’t see how whether that value was high, or low, has any bearing on the trend. It only has bearing on how it relates to a longer timescales, but that isn’t what is being claimed. [Edit: Okay, you’re claiming that it could be a cherry-pick. However, you don’t know anything about a period earlier than this, so adding uncertainty to this data point would seem to be more based on a guess, than anything else.]

This has just come out on Laure Resplandy’s website:

Kudos to Prof. Resplandy.

@wotts

From a statistical point of view, you know most about the mid-point of your sample (2002-3 in this case).

From a measurement point of view, you know most about the most recent observation (2016), that is, if you believe that instruments, coverage and documentation improve over time.

dikran: and Nic??

I would assume so. When he pointed out the error in the comment on Loehle’s climate sensitivity paper that I led, he was polite and helpful in his emails; I assume the same applies here, although I haven’t really followed it in detail.

I should point out that the mistake in question was entirely mine and not that of my co-authors.

dikran, this is the behaviour which is very honourable for the “commander in chief” ( lead author): shelter for the crew 🙂 . In the end I would find it helpful also on climate related blogs: less “war”, more open mind. More politely discussion, less insults seen here, there and everywhere ( with appologies to the “Beatles”)

It’s also true – it was my mistake. Yes, less insult and more open mindedness here there and everywhere would be great. I would just say though that I find polite but bad-faith discussion worse than robust (i.e. rude) but honest argument. Politeness can be rather rude if it is only a facade! ;o)

Good luck with that, FrankB:

Counterfactual thinking isn’t exacty polite. Even less is sock puppetry. For now, you have a handle here, a handle at Judy’s, and NicL is thanking you under your real name.

Please stick to one ID, and if it’s a nom de plume, make the connection explicit on your website.

willard, not every frank is me! My handle is frankclimate, here there and everywhere. And yes, this is what I meant: less war, more open mind!

Frank –

Could you reply to whether you consider your counterfactual speculation to be open-minded, polite, etc?

Let’s try a thought experiment: do you think that none of the reviewers in question ever found, through scrutiny, problems in papers that reported. higher than expected warming (and thus, requested revisions or rejected the paper)?

Perhaps you are cherry-picking to reverse engineer the mechanism by which a paper with an error passed through peer review?

Perhaps there’s some confirmation bias in your speculation about confirmation bias?

Perhaps the might be a more positive way to foster a positive engagement between people who disagree on the science?

FrankB,

I know you’re not franktoo. He commented here too. The point here is that not everyone can afford to check back and make sure which Frank is which.

Yet again you fail to make the most relevant connection. You’re FrankB. The one whom NicL thanked in footnote xv. Correct?

Open mind starts home.

This is correct.

I’d say “The important question is whether the peer-reviewers of this paper would have given similar scrutiny to a paper that concluded the ocean heat content was rising slower than expected rather than faster.” was impolite. It is an insinuation of (perhaps unconcious) bias for which there is no evidence, but which could be interpreted as casting doubt on the peer review process, (again without evidence).

Sorry for confusing franks

> This is correct.

Then put it somewhere on your website, FrankB. Because now you comment at Judy’s under “frankclimate” and you write posts under your real name, e.g.:

Thank you for your understanding.

Joshua, for a small moment I was confused 🙂

Willard, my nickname is no secret. I spelled it out a few month ago on your request and ( as far as I remember) you thanked. No matter of disence anymore?

> Sorry for confusing franks

My fault. My “you have a handle here, a handle at Judy’s, and NicL is thanking you under your real name” was unclear. The handle at Judy’s referred to the posts, not the comment.

The counterfactual thinking example was meant to illustrate that one can sound polite without being that constructive. Politeness is the best way to be insulting, in general.

It’s constructiveness that matters.

> I spelled it out a few month ago on your request and ( as far as I remember) you thanked.

I know it, but does every ClimatBall player know? Suppose someone S wants to know who you are. S clicks on your name, gets to:

https://frank3867.wordpress.com/

then nothing. If you’d like to have a traceable science, why not do the same with your ClimateBall identity?

My prototype of constructiveness comes from maths. I try to abide by this kind of constructiveness, and could not care less if anyone is polite as long as at the end of the day, we get a more constructive ClimateBall playing field.

I’m not saying that politeness does not matter. That’s just not my role to police it, except here perhaps, something I do once every month or so nowadays. But politeness can easily become a topic to play the ref.

The best way to make sure everyone is polite is to be polite oneself. The first rule of etiquette is never to mention etiquette. Manners maketh man. Everything should naturally follow.

There are plenty of reasons to cast doubt on the peer review process. I’ve done it many times and it is very time consuming and uncompensated. I have also found that negative reviews are often ignored by editors. It is usually impossible to fully replicate any computational results because of the use of complex codes and methods. Some people like Nic have a knack for spotting bad statistical methods. This shows how easy the problem was to find for a

Statistician otherwise an outsider.

There is a great article in Significance on the misuse of statistics and how common it is.

@dpy6629 casting doubt on peer review is fine, it is doing it without evidence that is the problem, partly because it doesn’t give a way for that doubt to be assuaged.

Statistics are very easy to get wrong.

> Statistics are very easy to get wrong.

Starting one’s post with

might be even easier, as the pursuit of crappiness, like audits, never ends.

But is it politeness?

willard: I can’t find that it’s a big deal to have a nickname on climate ball in contrast to other issues. When I respond to dikran I know it’s Gavin C. because he makes no secret of it. So I don’t .

Dear FrankB,

I already countered that point – what you or I may think isn’t the matter of concern, but those who, like Joshua, aren’t in the know. There’s no need to repeat it. In fact it would have taken you less time to simply add the information on your website.

This unresponsive reply may indicate two things. First, that you may not want to associate your ClimateBall comments with your more official productions. Second, that you display some difficulty with theories of mind.

Sock puppetry remains sock puppetry, and you are in no position to epilogue on politeness here as it’s obvious that NicL’s post goes beyond issuing a polite correction.

I hope that’s clearer. If you got any question, feel free to ask.

Best,

W

Dikran, And your comment indeed points to part of the problem here. It will continue to be impossible to assuage the justified doubts about peer review or science in general until there is reform that addresses the problems and demonstrates results. There is plenty of strong evidence to support those doubts. My view is that only about 1 in every 10 papers is worth the paper its printed on. I’ve given here before some of the score or so references from top scientific journals acknowledging that there is a serious problem. Inaction at this point is not an option if we want to regain a strong reputation for science.

Here’s just one item of evidence to take seriously:

https://www.nature.com/news/registered-clinical-trials-make-positive-findings-vanish-1.18181

I know there is such a strong positive bias in the literature in my field and it would be very surprising if climate science didn’t have the same problem.

Drive-by done, DavidY. Once per thread is alright. After that it becomes peddling.

Thank you for your concerns.

“Reading Nick Stokes, it’s very clear that Nic Lewis criticised the paper before understanding the methodology. Not impressive, particularly when accompanied by the condescending rhetoric.”

Hmm

“I wanted to make sure that I had not overlooked something in my calculations, so later on November 1st I emailed Laure Resplandy querying the ΔAPOClimate trend figure in her paper and asking for her to look into the difference in our trend estimates as a matter of urgency, explaining that in view of the media coverage of the paper I was contemplating web-publishing a comment on it within a matter of days. To date I have had no substantive response from her, despite subsequently sending a further email containing the key analysis sections from a draft of this article.”

so, per Dikran he wrote them.

and explained what he did.

A) they write a paper and describe in WORDS what they did in CODE.

B) words are always less precise, more open to interpretation than code. Otherwise

we would just compile words, dont you know.

C) smart people besides Nic have tried to reproduce, no cookie.

Data as used, Code as run. usually clears everything up, but that would be reproduceable science.

Instead we rely on luddite approaches like, write them an email, post shit on blogs,

stumble around in the dark trying to reconstruct a method from words.

Anyway, I tried to reproduce their results, given their description. I was unable to. I am consequently under no rational obligation to accept their results. The point of writing a methods section is so that any competent researcher can read the words and reproduce the methods

and obtain the same results. we used to argue over data availability, took about 10 years to correct that, give it another 10 years and folks will routinely share their code, maybe journals

wil even demand it, except in rare cases of course.

Am I mistaken, or are there no posts at WUWT about this issue?

What’s up with that? Methinks there must be a reason.

@dpy6629 I think 1 in 10 is probably about right, however I don’t think that is really a problem. Peer-review should only be a fairly minimal hurdle to publication, because otherwise good ideas with equivocal support don’t get shared and the support takes longer to accumulate. Experienced researchers know the rate is about 1-in-10 and one of the first things I teach my PhD students is not to assume that just because a paper appears in a good journal that it must be a good paper and that what it says is true. It is important to consider the actual purpose of peer-review before deciding if there is a problem worth fixing.

Regarding bias – I think it is worth bearing in mind that if your paper can only convince people who already think something is true, it probably isn’t ready for publication yet. So address the reviewer’s concerns and resubmit it (possibly to a different journal).

I suspect Prof. Resplandy may have quite a full inbox at the moment, and academics can have a lot of things in their diary (especially in term time), so they may not be able to give substantive responses as quickly as one might like. This is especially true if they need to do some actual research to find out what the problem is. Unfortunately we can’t assume what we write in emails will remain private, so I can understand why someone might be reluctant to say much until they had fully understood the issues and had a robust answer. Having experienced both ends of that particular type of conversation…

I guess that the response will clarify which method was used and what the uncertainties actually are and then we can all resume our normal approaches.

What does the way in which they handled the errors meant they underestimated the uncertainties actually mean. Does it mean they did it Kennedy’s way? Does this mean that it actually does matter what starting point you use?

+1 dikran

Perhaps a… hiatus in the speculation would be appropriate at this point. Let’s give the authors an opportunity to respond and then there will be a basis for substantive as opposed to speculative discussion.

“Am I mistaken, or are there no posts at WUWT about this issue?”

There are two, basically cut-and-paste of the two CE posts by NL.

Bonus answer!

From a post titled “Another failure of peer review, due to corrupt temperature data from a single station” (wherein Watts does a Google Earth cut-and-paste of yet another temperature station)

“Still working on it. Yes I know, you think it’s taking too long, but I don’t care”

(in a Watts comment reply to SM about their EPIC BOOBSHELL 2012 daft paper on microsite issues for CONUS only temperature stations). It will be seven years and counting, to get the only thing of importance, the list of station ID’s those fools used.

BBD “Let’s give the authors an opportunity to respond and then there will be a basis for substantive as opposed to speculative discussion.”

I think it is fine to discuss it, as long as we do it in a “golden rule” sort of a way. How would we like others to discuss the questionable elements of our own papers? I don’t think anyone would like their mistakes to result in suggestions of bias or incompetence, but I would hope most scientists would be happy to see constructive analysis and suggestions for better approaches.

A memo not widely received, I fear 🙂

No, good basic answer for a wide variety of ethical questions (although no panacea); sadly not so easily put into practice 😦

“My view is that only about 1 in every 10 papers is worth the paper its printed on”

dikranmarsupial says:

“@dpy6629 I think 1 in 10 is probably about right, however I don’t think that is really a problem. “

I assume you must be taking about a specific area (earth sciences?), because this proportion is much higher in well-funded research. In work coming from state-of-the-art materials labs, just about every paper has something interesting to present. When I was doing that kind of research, every time I would read a new paper, it was to find out how much our team got scooped.

Perhaps 1 in 10 papers are dismissed by a person because the research does not intersect with their own work?

“Perhaps 1 in 10 papers are dismissed by a person because the research does not intersect with their own work?”

very “golden rule” of you

As it happens, I’d say it applies to my own work. The problem is that you don’t always know the value of a paper when you write it, sometimes it takes a decade or so, I have a few papers with which I am still happy. ;o)

I’d also suggest that the situation was rather different in the 1980s.

“very “golden rule” of you”

Indeed, do unto others as you would have them do unto you.

For example, Nic Lewis stated in the Nature paper comments section “that all of the paper’s findings are wrong”. Unfortunately, Lewis neglected to consider that the paper is describing an independent and perhaps novel means of estimating ocean heat uptake, and whatever its shortcomings, at least substantiates previous estimates.

Well Dikran, raising standards for publication doesn’t seem to me to have much of a down side. A few novel ideas might not get into print, but I don’t think that’s much of a loss. The upside here is that a lot more time could be devoted to replication and real skeptical checking. That is where citizen scientists seem to have a role to play. As I understand it that is the direction particle physics has moved in and its helped weed out incorrect results.

I am more disturbed by systematic biases. The reference I gave above documents the strong positive results bias that permeates many fields, particularly modeling fields, where the power of selection is very strong. Results that agree “too well” with the data are usually wrong in turbulent CFD simulations, where the uncertainty is substantial. Yet there are plenty of papers out there containing these “perfect” results.

Me too, Dpy.

Yes, let’s tighten up peer-review and reject more papers, unless a paper that disputes AGW is rejected, in which case there’s a some kind of publication bias.

dpy6629: You wrote:

As I understand it that is the direction particle physics has moved in and its helped weed out incorrect results.

Where did this understanding come from?

> Where did this understanding come from?

I just asked for no peddling.

This encourages peddling.

DY said:

One of those particle thingies built by citizen scientists:

dpy6629 “A few novel ideas might not get into print, but I don’t think that’s much of a loss.”

is that still the case when they are yours?

“The upside here is that a lot more time could be devoted to replication and real skeptical checking.”

In that case, the problem is not with peer-review, but the lack of reward in academia for those activities (which I would say are implicitly discouraged by e.g. REF).

“I am more disturbed by systematic biases.”

me too, for instance climate skeptic papers appearing in journals that request the author to suggest suitable reviewers.

ATTP: “Yes, let’s tighten up peer-review and reject more papers, unless a paper that disputes AGW is rejected, in which case there’s a some kind of publication bias.

As Jeeves would say “rem acu tetigisti”!

I think it ought to be remembered that peer-review is only the first step in the acceptance of an idea by the research community, not the last. The fate of most papers is to be largely ignored by the research community, or maybe pick up a handful of citations. That is post-publication peer-review in action. Sometimes this is an indication of the value of the paper, sometimes it is not, but even when “not” the idea is still there for someone to find later, and if it turns out to be a good idea a decade later, there is still a marker to indicate who’s idea it was.

evertt.

the watts 2012 really bothers me because all mcintyre had to do was rerun the analysis with the correct data. the results had to be bad because evan started to look at a subset of data after that.

with the new satillite data i have i could look at microsite on all stations but i need a good ground truth to train the algorithm.

as i predicted in 2012 years will go by before the data is shared. anthony is worried about his legacy.. every july i intend to remind folks.

by and large climate folks have improved their data sharing. mostly i attribute it to younger folks coming up.. skeptics otoh

are going the opposite direction.my experience.

Surely that will be different when the Journal of the Open Atmospheric Society begins publishing papers and it will all be “Verum in luce” everywhere?

From The Lancet, April 11, 2015

“…One of the most convincing proposals came from outside the biomedical community. Tony Weidberg is a Professor of Particle Physics at Oxford. Following several high-profile errors, the particle physics community now invests great effort into intensive checking and re-checking of data prior to publication. By filtering results through independent working groups, physicists are encouraged to criticise. Good criticism is rewarded. The goal is a reliable result, and the incentives for scientists are aligned around this goal.”

dpy,

Yes, scientists are typically more than happy to think of ways to make sure that their analyses are robust. Were you making some kind of other point?

Are we going to continue with responding to David’s politically motivated pieces if bait regarding peer review? I got the impression that Willard was suggesting this isn’t the place.

If it’s going to continue, I have some devastating and pithy comments to make that I’m sure will get David to acknowledge his wrongful thinking and fallacious arguments.

Joshua,

Willard is probably right. Probably best not to.

[Mod: Sorry, we’ve had this kind of discussion time and time again. I don’t really have any great interest in repeating it again. If you can get off your hobby horse and say something worth posting, I’ll do so. Otherwise, I won’t bother.]

Dikran

https://link.springer.com/article/10.1007%2Fs10584-018-2315-y

Thoughts?

I agree with “Combining disparate information sources is mathematically complex;”! Will try and give it a read next week. Haven’t used BNs for a project for over a decade (dangers associated with glycoalkaloids from potato consumption), but they can be a good way of encoding the uncertainties so you can experiment with the factors.

OHC through September 2018:

Total OHC is?

Helps to have a scale for comparison of importance.

It looks impressive.

angech,

What kind of scale do you want?

angech says: Total OHC is?

Helps to have a scale for comparison of importance.

It looks impressive.

I get something in the range of 1 to 2E+27 for OHC referenced to absolute zero, but I think we already knew we’d be totally screwed if the sun went out . . .

For once I agree with angech here, in the sense that the numbers are rather non-informative and confusing. How can the heat content be zero?

I know, I know, it is actually an anomaly.

It’s probably quite hard to construct a robust estimate of OHC anomalies going back hundreds of years. Based on millenial temperature reconstructions, it’s probably about 5 to 10 times faster now that it was in previous centuries, which is consistent with reconstructions of sea level rise.

The diagram does give a reference to the original paper

NODC has run out of graph once again I see.

News reports that an erratum was submitted to Nature. Focus is on the error bars, unclear whether they also revised the central estimate.

If only the former, Resplandy admits that her new method contains little information.

If also the latter, Resplandy admits that the new estimates are the same as previous ones.

I think this is a step forward. Resplandy shows a historically defensive discipline that honesty matters.

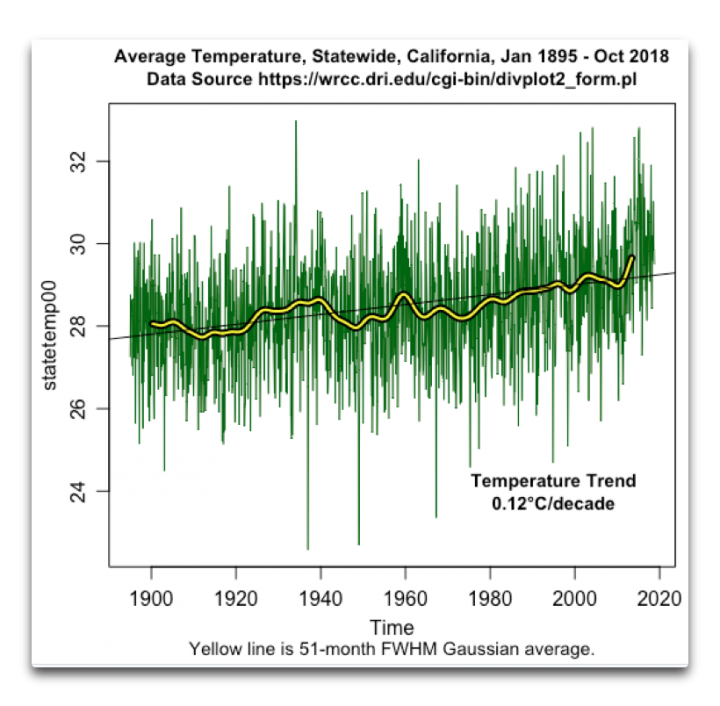

Tol, maybe you should also focus on correcting your skeptic buddies who are claiming that California warming is 10x less than the historical data shows:

Willis Eschenbach is not my buddy.

Authors’ response.

Kudos to Nic Lewis for spotting this and to authors for acknowledging and correcting.

For the record it appears the authors have acknowledged the accuracy of Lewis’s criticism, a very positive outcome.

http://www.realclimate.org/index.php/archives/2018/11/resplandy-et-al-correction-and-response/

Perhaps linking to this in the main post would be a good idea ATTP.

Here’s another article about the correction to the original paper and to the news release about it. ATTP: An addendum to the OP would appear to be in order.

Scientists acknowledge key errors in study of how fast the oceans are warming by Chris Mooney & Brady Dennis, Energy & Environment, Washington Post, Nov 14, 2018

The lead paragraphs of Mooney & Dennis article:

Scientists behind a major study that claimed the Earth’s oceans are warming faster than previously thought now say their work contained inadvertent errors that made their conclusions seem more certain than they actually are.

Two weeks after the high-profile study was published in the journal Nature, its authors have submitted corrections to the publication. The Scripps Institution of Oceanography, home to several of the researchers involved, also noted the problems in the scientists’ work and corrected a news release on its website, which previously had asserted that the study detailed how the Earth’s oceans “have absorbed 60 percent more heat than previously thought.”

“Unfortunately, we made mistakes here,” said Ralph Keeling, a climate scientist at Scripps, who was a co-author of the study. “I think the main lesson is that you work as fast as you can to fix mistakes when you find them.”

I have requested that Nic Lewis apologize for or retract his statement asserting “that all of the paper’s findings are wrong”. So far he hasn’t.

Good job

I’ll repeat my take from day 1

“Not sure I would lean on a proxy which ever way it swung things. It basically confirms that the range of estimates from direct measures is good.

That’s all.”

of course some folks leaned on these results hard.

opps.

Richard Tol wrote “I think this is a step forward. Resplandy shows a historically defensive discipline that honesty matters.”

Oh, the irony. Some people have such a short memory (responses on that thread please).

oops, the link should have been to https://andthentheresphysics.wordpress.com/2015/11/16/short-memory/

So the end result is that it may NOT be worse than we thought,

but just as bad as we already think.

So Resplandy18 still consilient with ARGO.

Pingback: A major problem with the Resplandy et al. ocean heat uptake paper – Weather News Blog

“The resulting trend in ΔAPOClimate is 1.05 ± 0.62 per meg/yr (previously 1.16 ± 0.18 per meg/yr)“

Confirms what I posted over at JC that the ability to estimate PO changes with any reasonable accuracy was debatable. The SD is way to large to enable confident, as opposed to useful, conclusions to be drawn.

So Resplandy18 still consilient with ARGO.

They have added shallow-water floats. I don’t know if there are shallow-water results in final number. And they are deploying deep-water floats.

For the record…

Resplandy et al. correction and response by Ralph Keeling, Real Climate, Nov 14, 2018

True or false: Resplandy was necessary because the theory requires higher OHC than ARGO has found.

ARGO not warm enough?

JCH: Who would “they” be?

Argument from personal incredulity. (Shock! Again!)

That’s what “they” tried, a priori, to warn the same Ralph Keeling about attempting to discern changes in atmospheric O2. As (duh) even Ralph says: Measuring changes in the amount of oxygen in the atmosphere is difficult because there is so much of it..

And yet… Eppur si muove…

With “Cape Grim” to Ralph as “Mauna Loa” was to his father David.

I know Ralph a bit, and I would bet on the error bars narrowing over time. But for now, a pretty impressive, innovative and consistent early result. (Nattering nabobs of negativity from the know-nothing peanut gallery, notwithstanding.)

False. On both counts.

Yes, I was a bit puzzled by that too.

Right on rustneversleeps! Quite impressive piece of science by Keeling the Younger!

Probably will also burn angech that Australia is shown in the chart 😉

Around the Super Disinformation Highway there is this notion that Resplandy was under orders to find more ocean warming.

I’ve always thought the thermal expansion component of the SLR budget is underestimated, and that ground water storage has always been a negative component (in some budgets it is positive and in some negative.) So on to deep ARGO.

Probably will also burn angech that Australia is shown in the chart 😉

Nah. Only 23 million inhabitants for 7,962000 Sq K, Only 1 20th the population density of USA and probably less than most countries. Clean air, clean sky and we export most all our coal overseas and pretend we do not do it.

El Nino ties with my home town Darwin.

Biggest cover up of temp anomalies down the road at Rutherglen? Nick Stokes near bye in Moyhu.

One of the 6? Antarctic owning countries.

Love Australia.

But have been O/S, rest of the world is pretty good as well.

wuwt recent changes, might be worth sending a sympathy message to Anthony, ATTP. His post on the fire effects is incredible.

Having Nick Stokes, Rudd and maybe Mosher giving some posts there.

JCH says:

“Around the Super Disinformation Highway there is this notion that Resplandy was under orders to find more ocean warming.”

They found a lot more warming JCH. Nobody seemed to be complaining that it was too high at the time as I recall. Why was that?

angech,

I hadn’t seen that. My sympathies to anyone who has been caught up in the fires in California, including Anthony.

“They found a lot more warming JCH. Nobody seemed to be complaining that it was too high at the time as I recall. Why was that?”

Errm, ISTR there were several climate scientists that were skeptical about it on Twitter. Most scientists however tend to investigate these things properly before commenting, which takes time, rather than just go to their favourite blog and spread some partisan rhetoric, as you have just done.

What ATTP said.

angech said

“wuwt recent changes, might be worth sending a sympathy message to Anthony, ATTP. His post on the fire effects is incredible.”

I think it’s better advised that Watts just shut down his blogging operation. The number of people unaccounted for in the wildfires keeps rising and all that WUWT is doing is spreading misinformation on what the warming in California has been over the last 100 years.

After correcting all the errors from earlier posts, this is the current chart that Watts and Eschenbach are showing for average California temperatures. Look at the y-scale:

Contrast that to what Resplandy et al have accomplished with their fine research.

Well, obviously. Presumably on the orders of the Alien Space Lizards, intermediated by the Illuminati.

Well, as has been pointed out angech, “nobody” would seem to be something of an exaggeration. More to the point,

Their initial estimate of 1.33 ± 0.20 x 10^22 J/yr overlapped previous estimates, so they didn’t find “a lot more warming”. Just a hunch, but that may be why people weren’t “complaining that it was too high at the time” (apart from the usual suspects of course). What a shame they didn’t make it clear in the abstract that it was not a new finding outside the range, but a new finding at the high end of the existing range. Oh, hang on a minute:

Their revised estimate of 1.21 ± 0.72 x 10^22 J/yr of course remains at the high end of the existing range, but with more uncertainty (which, like stock prices, goes up as well as down).

Always a good idea to go to the horse’s mouth, and not rely on the Super Disinformation Highway.

“Their revised estimate of 1.21 ± 0.72 x 10^22 J/yr of course remains at the high end of the existing range, but with more uncertainty”

1.21 x 10^22 J/yr compares with 1.09 x 10^22 J/yr from the other four data sets for 1993-2010 (the IPCC AR5 WG1 OHC data era), That works out to an ~11% increase using the current data sets (there are also issues with their IPCC OHC selection from Chapter 3 (0-700 m ocean depths versus the entire ocean depths)), in other words, a true apples-to-apples comparison. So not anywhere near a “so called” 60% increase over previous estimates (yeah, it’s like me pulling up OHC data circa 1950 and claiming an infinite increase over that old estimate),

Their new sigma is 0.72 for the slope error, that makes their new slope just barely pass the 90% confidence interval. (1,21 – 1.09)/0.72 = one sixth the one sigma error bar of 0.72!

Why was that?

Because it was one study using a novel methodology. Take a look at who brings up Zwally as though he proved something for once and for all, and who waited to see if he was corroborated.

#2 story on Fox News website.

https://www.foxnews.com/science/error-in-major-climate-study-revealed-warming-not-higher-than-expected

Thanks ATTP.

Sure it will be appreciated.

JCH says: Why was that? Because it was one study using a novel methodology.

That is a good reason. I think most people who read it appreciated the concept. Each time a variation in how to put old data into a form that helps validate a seemingly unrelated concept is to be applauded.

Everett F Sargent “So not anywhere near a “so called” 60% increase over previous estimates”

Are you sure where that 60% comes from, it may not help your argument.

Joshua: The Fox news story is a minor blip on the screen compared to…

https://www.theguardian.com/world/2018/nov/15/brazil-foreign-minister-ernesto-araujo-climate-change-marxist-plot

Time to Get Cereal?

Nobody Got Cereal?

Ah, what’s just another five years anyways?

While my politics have evolved over the years, one of my most firmly held beliefs is that people are idiots, and that was fully on display in ‘Nobody Got Cereal?’

JH –

Gotta say, that’s the first time I’ve seen evidence that hatred of heterosexual sex is directly linked in with the hoax. Although I can’t say I’m surprised. I mean it’s been clear for a long time that all those commie, progress-hating, one world government loving, pro-child starving climate scientists engaged in battle with righteous, truth seeking, pure science-loving, pro-child feeding “skeptics” were limp-wristed, so it only stands to reason that they’d hate hetero sex.

Thanks for the Fox News link on the Resplandy/Lewis story, with Keeling setting things straight. Found that it also includes some peddling! 🙂

Imagine if the ocean was only 30 feet deep,” said Resplandy, in a news release from the Princeton Environmental Institute that accompanied the study.

“Our data show that it would have warmed by 6.5 degrees Celsius (11.7 degrees Fahrenheit) every decade since 1991”

At least the accompanying SLR would have been small.

No. The thermal expansion coefficient for water, unusually, rises very significantly with temperature.

If all the heat went into the top 30ft of ocean, sea level rise would therefore be higher than if were distributed over a deeper column of colder water.

angech is also ignoring the contribution due to ice sheets which, as far as I’m aware, are likely to dominate sea level rise.

AT, sure, though IIRC thermal expansion is actually the largest component to date.

Mainly, Angech is showing his lack of understanding of physics. One might expect such an obvious lacuna to mediate more time listening to experts in the subject rather than opining on their perceived inadequacies …

Thermal expansion is projected to be the largest component of sea level rise for a century or more to come:

vtg,

The largest individial component, but not larger than the combination of all the other sources. Even now, I think that thermal expansion only makes up about one-third of current sea level rise (unless I’m missing something).

That’s my understanding, but I might also be missing something…

Judith’s latest post on this is pretty funny. While running a blog full of comments about frauds and “alarmists, ” blah, blah, she writes to explain she doesn’t know what hostility is referenced when people speak of a hostile environment.

Which is topped off with comments about how climate scientists (at least the ones she doesn’t agree with) should put on their big boy pants (paraphrasing) and stop “whining’ about hostility, after her years of whining about being called a “denier. “

What a rare event to see people dealing with these issues with introspection, humility, good faith, charity…rather than pettiness, partisanship, antipathy, childishness, defensiveness…

…sad…

Earth’s oceans have absorbed maybe 11 percent more heat per year than previously thought

https://www.princeton.edu/news/2018/11/01/earths-oceans-have-absorbed-60-percent-more-heat-year-previously-thought

“Imagine if the ocean was only 0.3 feet deep,” said Resplandy, who was a postdoctoral researcher at Scripps. “Our data show that it would have warmed by 444 degrees Celsius [800 degrees Fahrenheit] every decade since 1991. In comparison, the estimate of the last IPCC assessment report would correspond to a warming of only 400 degrees Celsius [720 degrees Fahrenheit] every decade.”

Just a slight edit of the original PR statement to reflect their current corrections. 😦

Decadal Ocean Heat Redistribution Since the Late 1990s and Its Association with Key Climate Modes