Something I’ve mentioned here quite regularly is the idea that warming depends roughly linearly on cumulative (total) emissions. This is slightly counter intuitive, in that warming depends logarithmically on atmospheric CO2 concentration. The reason is essentially that it incorporates climate sensitivity (which depends on changing atmospheric concentrations) and carbon cycle feedbacks, into a single quantity. It seems that the airborne fraction is expected to increase so as to compensate for the logarithmic dependence on atmospheric CO2 concentration. In others words, the expectation is that if we double how much we’ve emitted, we’ll more than double the human contribution to the atmospheric CO2 concentration.

There are a number of papers that have considered this and the general result is that it appears to be a reasonable relationship for most realistic future emission pathways, although it might over-estimate the warming from the highest emission pathway. The quantity is called the transient response to cumulative carbon emissions (TCRE) and is thought to have a range of 0.8 to 2.5oC per 1000GtC for real emission pathways, and 1 to 2oC per 1000GtC, for a 1% per year CO2 only emission pathway. The reason for the difference is simply that the real emission pathways include non-CO2 GHGs, while the TCRE is defined in terms of the CO2 emissions only.

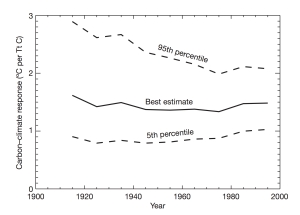

The reason I’m telling you this is because Nic Lewis has a guest post on Climate Etc. in which he is suggesting that the TCRE is quite a bit lower than other estimates suggest. The figure on the right shows his analysis (solid lines) and the IPCC values (dashed lines). Nic Lewis appears to be suggesting a best estimate for the TCRE of 1.15oC per 1000 GtC, or 0.9oC per 1000GtC if the forcing is CO2 only. His analysis suggests much less warming (along the same emission pathways as used by the IPCC).The reason, I think, for his result, is pretty straightforward. His underlying model has a low TCR (about 1.35oC) and he is assuming that the carbon cycle feedbacks are on the low end of the range. The carbon cycle feedbacks essentially relate to how the carbon sinks respond to increased CO2 and to increased temperatures. If they’re on the low side, then the sinks are not significantly influenced by increased CO2 levels and warming, and the airborne fraction will remain roughly constant. Hence, the logarithmic nature of CO2 is not compensated for by an increasing airborne fraction. So, the overall warming is reduced because of the lower TCR and because of the weaker carbon cycle feedbacks. A double-whammy.

As I understand it, this all within the realms of possibility, so it could well be what happens. However, discovering that one can develop a plausible model that suggests that warming will be on the low side, is not really evidence that it will be. Also, bear in mind that there is probably something like a range of ± 0.5oC on either side of values presented by Nic Lewis. so even his lower estimates doesn’t rule out greater than 2oC even along an RCP6 emission pathway.

Okay, I’ve managed to bumble through this post a bit. What I wanted to highlight was that, as usual, Nic Lewis’s work is being highlight as being observationally based. However, the figure on the left (from Matthews et al. (2009)) shows TCRE determined from 20th century observations (based on warming and CO2 emissions relative to 1900-1909). The range varies from about 1oC to over 2oC per 1000 GtC (depending on the time period considered), with a best estimate of about 1.5oC per 1000GtC. It is pretty similar to the IPCC range I mentioned earlier, and quite a bit more than Nic Lewis estimate of around 1.15oC per 1000GtC. Also, the break in Nic Lewis’s graphs seems to suggest that he thinks we will go from a TCRE of probably around 1.5oC per 1000GtC, to one around 0.5oC per 1000 GtC, starting about now. A little odd as many others seem to think that global warming is probably going to start accelerating.I guess we will know in the next few decades if Nic Lewis’s suggestion is correct. On the other hand, we’ll also know, in the next few decades, if the 2oC budget really is around 300GtC. Given how we appear to be unwilling to do anything to actually cut emissions, I’m hoping Nic Lewis is correct. I’m not hopeful, though. I also think it would be better to consider all the evidence, not just select what gives us what we’d like to see, but maybe that’s just me.

This is slightly counter intuitive, in that warming depends logarithmically on atmospheric CO2 concentration

The relationship is also not a logarithmic function of cumulative emissions because we did not start with a CO2 concentration of zero.

May I ask the BBD question: is the estimate of Nic Lewis consistent with the observational estimates from paleo studies?

Victor,

Yes, but I think that if the airborne fraction remained constant, then the warming wouldn’t (I think) depend linearly on cumulative emissions.

I’ll let BBD answer his question.

BBD likes to answer: “but paleo”.

Let’s add a Victor question. Is the Nic Lewis estimate consistent with our physical understanding of the climate system?

The IPCC range is consistent with our understanding of the radiative properties of CO2, of the increase in humidity that goes with the warming and with the albedo feedback from less snow and ice due to warming. If the IPCC range would not have fitted to this physical understanding, I would have said that we do not really have a solid case yet.

It is fine to produce a fun outlier result the way Nic Lewis did, but I only start to see it as part of our scientific understanding of the climate system when we understand the physical reasons why the climate sensitivity were so low. Until then, I will see it as an outlier and quite likely an indicator that the method he is using is quite sensitive to the assumptions.

Everything should fit together. We only have one reality.

Exactly. It’s important to understand the likely future realities, not choose one that might seem nice, but doesn’t end up matching reality.

In others words, the expectation is that if we double how much we’ve emitted, we’ll more than double the atmospheric CO2 concentration.

Not quite, because, as Victor pointed out, we didn’t start from zero. Perhaps just add a few words, e.g.:

In others words, the expectation is that if we double how much we’ve emitted, we’ll more than double the human contribution to atmospheric CO2 concentration.

Andy,

Thanks, yes, of course. I see now what Victor was getting it. Always obvious in retrospect 🙂

Oh, and I’ve edit the post as you suggested.

Re Paleo; the evidence is scanty and open to uncertainty(sic) but the much discussed lag in the rise of CO2 before the warming from a glacial period is also present in the fall of CO2 as the interglacial cools into the peak glacial period. In the last cooling period ~120,000 years ago the CO2 levels lagged ~5000 years behind the temperature to drop 40 ppm.

Of course this time the CO2 rise is much more rapid and we will not be starting from any comparable paleo situation when(if) CO2 emission reduce by 90%. Therefore NL has the liberty to invent whatever tenuously credible version of the carbon cycle under these exceptional conditions he desires.

Or if the analysis is likely to be accepted as unbiased, include the mainstream and opposite extreme (paleo?) conditions as alternatives or error ranges.

(the link was irresistible!)

We only have one reality.

I’ve run into an awful lot of folks who don’t seem to grasp this basic, fundamental fact. They seem to subscribe to a “selective reality” model of the universe.

ATTP:

Nic’s climate sensitivity was not just picked at random. Nic said (in his guest post):

“I select the simple ESM’s key climate, and land and ocean carbon-cycle, sub-model parameters so that its simulated global temperature, heat uptake and carbon-cycle changes since preindustrial best match recent observational estimates, sourced largely from AR5.”

So his model parameters are observationally constrained.

I think a key point to ponder is that observationally constrained work tends to a low sensitivity value, while quite a few of the global climate models project an accelerating warming with higher sensitivity numbers.

As you point out – we will know in a few decades who is correct.

I have laid in a 30 year stock of popcorn and am watching avidly to see who turns out to be correct in this debate.

One thing we know for sure is that it is pretty hard to make predictions – especially about the future (grin).

Yes, which is why I wrote what I wrote. I didn’t say it was picked.

Well, there are other observationally contrained estimates that are higher (see Cawley et al., for example).

There are plenty of plausible arguments as to why this is the case. I won’t list them again, as I’ve grown tired of doing so.

Indeed, and we can’t rule out the chance that in 30 years we will say “oh, shit!”

Well, that’s partly why we call them projections. That it hard, and uncertain isn’t an argument for ignoring what is being suggested.

Victor

No 😉

For a comprehensive evaluation of the evidence spanning the Cenozoic see Rohling et al. (2012) which estimates a range of 2.2K – 4.8K per doubling of CO2.

Victor writes:

And that’s the problem with (and for) lukewarmerism.

RickA: I think a key point to ponder is that observationally constrained work tends to a low sensitivity value, while quite a few of the global climate models project an accelerating warming with higher sensitivity numbers.

The comprehensive climate models match the observational constraints just as well.

It is thus not a comparison between an “observational method” and climate modelling, but between a highly simplified statistical model and a comprehensive physical model. As long as the statistical model gives results that are unphysical according to our best current understanding, I know what I see as the most likely resolution of this discrepancy.

Victor:

How can Nics results be unphysical if they fall within the IPCC range?

What I find unpersuasive is that 100% or 110% of the warming since 1950 has been caused by the human emissions of CO2. That assumption is what leads to such high sensitivities.

That strikes me as unphysical.

Perhaps we will (in 20 or 30 years) find out that 1/2 of the warming (since 1950) is natural and the other half caused by human emissions. Again – we will see.

I also note that the sinks are taking up more of the emitted CO2 than was forecast.

I read stuff everyday which shows that nature is reacting in ways which are different than we thought it would – all of which need to be put back into model tweaks.

More snow and therefore more mass on Antarctica than expected.

Corals growing better than expected over the last couple decades.

Arctic ice rebounding more than expected.

Europe colder than expected.

And on and on.

Perhaps in 20 or 30 years the models will be better than they are today, and projections drawn from them will be more accurate.

They sure don’t feel accurate now.

Bottom line is that there are a lot of wild guesses being published and only time and more data will sort out who is correct and who is wrong.

I lean strongly toward a low climate sensitivity based on what I have read (say an ECS of 1.5 to 1.8C per doubling). But only time will tell.

I am not against taking action – I just want the action we propose to take to be subjected to a decent cost/benefit analysis.

I think we should really ramp up electrical production with nuclear power and try to get to 75% in the USA instead of 20% ish. As we reach end-of-life for coal power plants, why not replace them with nuclear?

I would also like to see some research done on non-carbon producing power technology development which is cheaper than coal, natural gas or oil. Power storage also. The more renewables we deploy the more important power storage becomes.

If we throw 20 billion per year at these issues for a decade I bet we make some progress.

Maybe fusion will become economical.

Maybe space based solar will become a reality and hopefully cheaper than hydrocarbons.

Imagine manufacturing mirrors in space from asteroids we mine and just harvesting all that extra solar radiation (the stuff which just misses the Earth now). There is a ton of money to be made working on that. Free power from space – available 24/7 365 days a year.

Congress just passed an asteroid mining law and I believe President Obama is planning on signing it.

I am very optimistic about the future.

No, it doesn’t. Are you sure you know what you’re talking about?

There are many things we will understand better in 30 years time, including whether or not we should have started doing something now, rather than waiting.

Whereas virtually every climate scientist believes it is not unphysical.

So, what to believe: what “strikes ” Rick, or what climate science supports. Hmm.

It’s a tough one, I grant you. I’ll get back to you later.

Looking at the graph I feel like I must be missing something. Why are cumulative CO2 emissions in Lewis’ curve greater than the IPCC curve at all the decadal average points?

RickA,

What I find unpersuasive is that 100% or 110% of the warming since 1950 has been caused by the human emissions of CO2. That assumption is what leads to such high sensitivities.

That strikes me as unphysical.

Can you explain why you believe this is unphysical?

paulskio,

I’d missed that. Yes, I don’t understand that either. I thought he was using the same emission pathways (rather than the same concentration pathways) and, hence, you’d expect the cumulative emissions to be the same at each decadal average point.

RickA: “How can Nics results be unphysical if they fall within the IPCC range?”

That formulation was a bit sloppy. I wanted to shortly repeat what I had written before: “The IPCC range is consistent with our understanding of the radiative properties of CO2, of the increase in humidity that goes with the warming and with the albedo feedback from less snow and ice due to warming. If the IPCC range would not have fitted to this physical understanding, I would have said that we do not really have a solid case yet.”

A result can be wrong while being in the right range. If you are confident that you will get a 1 every time you throw a 6-sided die, you are wrong, but it is in the right range.

paulskio

“Why are cumulative CO2 emissions in Lewis’ curve greater than the IPCC curve at all the decadal average points?”

ATTP

” Yes, I don’t understand that either. I thought he was using the same emission pathways (rather than the same concentration pathways) and, hence, you’d expect the cumulative emissions to be the same at each decadal average point.”

Actually, I surprised ATTP doesn’t understand, as I recently explained the reason for the difference in the last paragraph of an answer to him, here: http://judithcurry.com/2015/11/30/how-sensitive-is-global-temperature-to-cumulative-co2-emissions/#comment-747849

Nic,

You said,

So, I’m still confused. If the emission pathways are the same, then surely the cumulative emissions at every point in time should be the same, shouldn’t they?

Also, I will add that your response in that Climate etc. comment did the remarkable trick of saying I was wrong while repeating what I’d just said. Odd that.

ATTP,

I think what Nic’s saying is that each model used the same RCP emissions pathway as him in its simulation, but concentrations produced by those models tended to be higher* than the RCP concentrations pathway. In producing the graphic they decided to scale the emissions to be consistent with the RCP concentration at each datapoint.

* I may mean lower here

paulskio,

I’m not sure it is. As I understand it, Nic is using the same emission pathways as the IPCCs RCPs and, because of his assumptions about carbon cycle feedback, then gets a different concentration pathway (which is a bit odd given that RCP8.5 – for example – is defined in terms of the forcing pathway, which – I think – Nic’s model no longer follows). However, I still don’t see – if each dot represents a decade – how the cumulative emission at each decade can be different for his emission pathways, than for those used by the IPCC, if they are both the same. Could it be that his cumulative emissions are decadal averages, while the IPCC values are not?

paulskio, ATTP,

You seem to have overlloked the final part of my answer:

” the CMIP5 ESM results in my figure and Figure SPM.10 use RCP concentration pathways and diagnose, in each CMIP5 model, what emission pathways would produce those concentration pathways.”

So, I use the underlying RCP emission pathways, but the CMIP5 models used the RCP concentration pathways (which had been diagnosed from the emission pathways using the MAGICC6 EMIC). Each CMIP5 ESM then worked out what emission pathway it would require to produce the RCP concentration pathway it was given. SPM.10 shows the mean of those diagnosed emission pathways (at decadal mean points, as for my model). The mean CMIP5 ESM diagnosed emission pathways differ from the original RCP emission pathways since the CMIP5 ESMs behave differently from MAGICC6.

paulskio asked me “Can you explain why you believe this is unphysical?”

Sure.

The Earth has been warming since the LIA, and all of that warming is mostly natural (at least up until about 1950).

Why did this natural warming stop?

We know that warming (whether natural or human caused) releases additional CO2 (and methane) – so some of the CO2 being added to the atmosphere is a result of the natural warming from 1750ish on. A feedback effect.

It seems likely to me, that since it has continued to warm since 1750ish or so, that whatever is causing that continues today. If some natural warming is still occurring then that natural warming will cause additional CO2 to be released from the environment (from the ocean or ground under retreating glaciers and so on).

I see no evidence to support the notion that this century scale natural warming just turned off. So that assumption strikes me as unphysical.

On a longer time frame – it has been warming since the last ice age (about 20,000 years ago).

The sea has risen 120 meters since 20,000 years ago,

I see no evidence to support the notion that this millennial scale natural warming just turned off. So that assumption strikes me as unphysical.

I agree that a portion of the warming since 1880 (and since 1950) is caused by humans.

I just don’t see any evidence to support the notion that all of the warming since 1950 is human caused.

The only reason I have read for this conclusion is that it is the only explanation which makes the models work – which is not a very good reason in my opinion. Especially since actual observations are quite a bit cooler than the model output (of most of the models and the model mean).

There are still naturally occurring forcings (orbital forcings for one, el ninos are still happening as a second example) – and I see no evidence they have turned off – so therefore they have to still be affecting the Earth – on all their various timescales.

The warming effects from el nino are not human caused – correct?

So how can we discount the warming from el nino and conclude it has no effect – but that all warming is caused by humans?

So 100% or more strikes me as unphysical.

50/50 or 25/75 or 75/25 I could buy – but not all of the warming.

It just makes no sense to me.

Hope that explanation helps you understand my view on this matter.

Nic,

Yes, that makes sense, which is what I initially said in the comment to which you responded with “no you’ve got it the wrong way around”.

Again, yes, this is what I was suggesting when you responded with “no you’ve got it the wrong way around”.

Ahh, I see, so the emission pathways in your figure are not the same. Well, that was easy enough. Pity you didn’t say that earlier.

RickA,

Well, that’s just silly. Some things do cause cooling.

Maybe, but this isn’t to your credit.

Forget the go-forward emissions, which is confusing enough.

How can the “historical” cumulative anthropogenic CO2 emissions be different for the two plots? How can the “historical” temperature anomalies be different??

rust,

Yes, I’d missed that too. That is rather strange. Maybe Nic can explain that one?

Why did this natural warming stop?

Right around the time Nathaniel Hawthorne’s “Scarlett Letter” was published. After the affair, things cooled off for 60 years.

ATTP:

You said “Well, that’s just silly. Some things do cause cooling.”

True.

However, the net of all the warming things and all the cooling things is a warming of the Earth from 1950 to the present. I doubt we disagree on that point.

100% of that net warming from 1950 on is caused by humans (according to some).

Because of aerosols emitted by humans, which cause cooling, but for our aerosols it would be even warmer – which is how we get to 110% of the warming is caused by humans (since 1950).

Perhaps I am being too literal.

But to me this means that the el nino isn’t warming California right now (or anywhere else)..

How can there be natural warming if 100% of the warming is caused by humans?

el ninos are not caused by humans – they are natural – therefore since 1950 they no longer warm.

What is your understanding of the meaning of 100% of the warming from 1950 on is attributed to humans?

I am fine with some warming being natural (say the current el nino) and some caused by humans – but I really don’t get this 100% unnatural warming theory.

ATTP,

When you wrote “” As I understand it RCPs are concentration pathways from which emission pathways are then determined. Presumably here you’re using the emission pathways, not the concentration pathways.”

and I responded

“No, you’ve got it the wrong way round.”

I meant that you had got it the wrong way round in your first sentence, not in your second sentence. I think that was clear from how I continued:

“The RCP concentration pathways are determined from the RCP emission pathways, using a single EMIC. See Meinshausen et al 2011. My model uses the RCP emission pathways, but the CMIP5 ESM results in my figure and Figure SPM.10 use RCP concentration pathways and diagnose, in each CMIP5 model, what emission pathways would produce those concentration pathways.”

rustneversleeps and ATTP

How can the “historical” temperature anomalies be different??

Simple. As stated just above the graph in my post at Climate Etc:

“the black lines show simulation results up to 2000–09.”

So, these are, model simulated, not observed, temperatures. The CMIP5 models simulated a higher GMST rise than that observed per HadCRUT4 (horizontal pink line), whereas my model, being observationally-constrained by that temperature data, matched its rise to 2000-09.

So the historical cumulative anthropogenic CO2 emissions are simulated as well??

Rick A., It isn’t that you are being too literal. It is that you are ignoring physics. What the physics says is that when you have a forcing that increases, the planet warms. As it warms, it emits more IR radiation–the amount determined to first order by the temperature. It continues to warm until the increased outgoing IR matches the increase in the original forcing that triggered the effect. Yes, there are feedbacks, positive and negative, but that is the gist of the process.

It is likely that the LIA was caused by increased volcanic aerosols and a decrease in solar output. Once the volcanic aerosols rain out (a matter of years) and the solar output goes back to “normal”, the temperature then rises, but only to the point where outgoing IR restores equilibrium.

Warming doesn’t happen without adding energy. We know CO2 as a greenhouse gas adds energy. That CO2 would warm the planet was predicted clear back in 1896 by Arrhenius. That we are seeing warming now ought not to be surprising unless you weren’t paying attention over the last 120 years.

@-“The Earth has been warming since the LIA, and all of that warming is mostly natural (at least up until about 1950).”

The warming from, and cooling into, the LIA are not ‘Natural’. The MWP-LIA change is rather smaller on a global scale than that measured since 1950. They have real physical causes, the 2LoT requires that. A good deal is known about those causes, that you are not aware of the causes of the LIA and its end can be corrected with a little work.

@-“On a longer time frame – it has been warming since the last ice age (about 20,000 years ago). …I see no evidence to support the notion that this millennial scale natural warming just turned off. So that assumption strikes me as unphysical.”

That conflates two climates, a cold glacial period and the present interstadial. The Milankovitch trigger for the warming in the middle of your timescale from the glacial is well established.

In the ~8000 years since the peak warmth the global temperature has slowly dropped by about a degree (with lots of noise) as is similarly seen in every past paleoclimate record of the glacial cycles.

Until now. There is no evidence of a change in the inherent (‘natural’?) forcings that are expected in a glacial cycle, to explain the recent rapid rise back to Holocene peak temperatures. There is however this wopping and coincidental rise in anthropogenic CO2.

And the numbers fit, using the best guess we have. In fact the CO2 rise could have caused a slightly larger rise in temperature than that measured. So then other factors must have cooled/negated part of the CO2 impact.

@RickA

Of course there’s natural warming—and cooling—and it’s always there, caused by such things as small changes in Earth’s tilt and orbit (slow acting); in the sun’s radiance and volcanic activity (fast acting), but it’s observed and is accounted for.

On the other hand, atmospheric CO2 concentrations have risen over the last 100 years to levels not seen for at least 800,000 years and, low and behold, the warming which physics tells us the greater concentration would be expected to create, exactly matches the observed increase. So if you want to attribute the warming to natural causes, please can you explain how the amount of increased atmospheric CO2 recorded did not produce the warming we would anticipate?

RickA wrote: “We know that warming (whether natural or human caused) releases additional CO2 (and methane) – so some of the CO2 being added to the atmosphere is a result of the natural warming from 1750ish on.”

Rick is conveniently forgetting the “CO2 lags temperature” meme here, meaning he is about 700 years too early to use the CO2 feedback as his argument.

RickA: “I see no evidence to support the notion that this century scale natural warming just turned off.”

Really? Ever notice how the solar sunspot proxi peaked circa 1960:

Rick: “it has been warming since the last ice age (about 20,000 years ago).”

Ah, no, it has not. Orbitally forced peak post-glacial warming was 8000-6000 years ago during the Holocene Climate Optimum. Global mean temperature has been slowly declining ever since, albeit with extended episodes above and below that trend. The fact is over just the last half century we have reversed all of that long term decline:

Rick specifically cites orbital forcing as a continuing natural forcing. Pity it is of the wrong sign to support his argument.

Rick also cites el nino. Pity that ENSO is not a forcing.

In short, Rick’s view on this matter is clearly based on misunderstanding.

@-“The RCP concentration pathways are determined from the RCP emission pathways, using a single EMIC. See Meinshausen et al 2011. My model uses the RCP emission pathways, but the CMIP5 ESM results in my figure and Figure SPM.10 use RCP concentration pathways and diagnose, in each CMIP5 model, what emission pathways would produce those concentration pathways.”

Yes. It is clear a large part of the offset is created by converting from emissions to concentrations by one method, and then back from concentrations to emissions by another method.

@-“So, these are, model simulated, not observed, temperatures. The CMIP5 models simulated a higher GMST rise than that observed per HadCRUT4 (horizontal pink line), whereas my model, being observationally-constrained by that temperature data, matched its rise to 2000-09.”

Total emissions to date are less than 500Gt. The current anomaly above the pre-emission era is close to (possibly over) 1degC. A level the solid lines do not reach until almost 1000Gt has accumulated. The observational constraints may have shifted.

@Nic,

I really do hope your model takes ENSO into account, so that the drastic GMST spike that we’re gonna witness won’t cause any sudden mismatch …

K.a.r.S.t.e.N: … and solar variance, and volcanoes…

> Nic’s climate sensitivity was not just picked at random.

Nobody said otherwise, and it’s the opposite of “picked at random” that is lukewarmingly troublesome.

izen:

You said “The warming from, and cooling into, the LIA are not ‘Natural’. The MWP-LIA change is rather smaller on a global scale than that measured since 1950.”

What is your definition of “natural”.

Mine is everything which effects the climate except human causes, like human CO2 emissions, human land use changes, and other human effects.

So “natural” is changes in the sun, heliosphere, magnetic coupling, natural fires, volcanoes, orbital variations, clouds, ocean currents, etc.

So I see the LIA and the MWP as being natural climate changes – i.e. not caused by humans.

Please let me know if you disagree.

Secondly, I see a change of almost 1C between the peak MWP and the bottom of the LIA – which is more than the warming since 1950 (but not much).

I do question how much of the warming since 1950 is adjustments to the land temperature record. I do not know the answer to this but have seen that the past was cooled and the present warmed – but am not sure of the magnitude of those changes since 1950.

It would be interesting to see what the raw temperature readings (unadjusted) show from 1950 to the present.

Jim said “Rick is conveniently forgetting the “CO2 lags temperature” meme here, meaning he is about 700 years too early to use the CO2 feedback as his argument.”

Ok – how much of the warming last century and this century is from CO2 released due to the MWP? That ended about 700 years ago.

izen says:

”

The observational constraints may have shifted.

”

I predict that that sentence will become a common refrain in the not-too-distant future.

Meanwhile, the growth of political pragmatism:

http://www.commerce.senate.gov/public/index.cfm/2015/12/data-or-dogma-promoting-open-inquiry-in-the-debate-over-the-magnitude-of-human-impact-on-earth-s-climate

I appreciate all the corrections to my understanding which have been shared.

I noticed nobody has taken a crack at answering my question “What is your understanding of the meaning of 100% of the warming from 1950 on is attributed to humans?”

I am very skeptical of this assertion.

Nic,

If it interests you, could you post your Anthropogenic CO2 emissions (inputs) and the corresponding ppm outputs from your model? I’d like to check them against my model. Also, if you can post a picture of your CO2 decay profile (if your model generates one), I could mimic it in my own model. Mainly, I would like to see for myself how well it backtests against fairly well known emissions and concentrations. My own decay profile, I mimicked from the one in a Joos paper which seems pretty well accepted, and then I adjusted it to get a perfect backtest, but my emissions data only include FF emissions, i.e., no biosphere emissions. But as you can see, the results square well with what other models say.

Here’s my decay profile and a couple experiments I ran:

https://googledrive.com/host/0B6KqW0UlivnVVks4cnN1THhYR3M

RickA: “I do question how much of the warming since 1950 is adjustments to the land temperature record. I do not know the answer to this but have seen that the past was cooled and the present warmed – but am not sure of the magnitude of those changes since 1950.

The adjustments over land make global warming about 0.2°C larger. The adjustments of the sea surface temperature make global warming smaller. In total the adjustments make global warming about 0.2°C smaller.

Which direction the adjustments go does not tell you anything about global warming. That is only relevant if you expect a conspiracy.

RickA: It would be interesting to see what the raw temperature readings (unadjusted) show from 1950 to the present.”

A well chosen time. After 1950 the adjustments make nearly no changes to the global mean temperature (regionally they do still matter). The 0.2°C adjustments I mentioned above are before 1950.

RickA wrote: “I see a change of almost 1C between the peak MWP and the bottom of the LIA – which is more than the warming since 1950 (but not much).”

That means the MWP was only about 0.5C above the long term downward trend since the HCO, and the LIA only about 0.5C below that trend, which means each were only around half the change since 1950.

Your arguments are dissolving beneath your feet, Rick.

RickA: “It would be interesting to see what the raw temperature readings (unadjusted) show from 1950 to the present.”

And you expect anyone to take you seriously after that whopper?

http://onlinelibrary.wiley.com/doi/10.1002/2015GL065911/full

Click to access annan_current-climate-change-reports_2015.pdf

Nic,

Yes, and once again your chose to focus on an irrelevance (do you really not do this on purpose). The point was that GCMs use concentration pathways, not emission pathways. Given that the RCPs are defined in terms of their concentration pathways, whether or not you actually know the emission pathway in advance, or work it out later, they are still defined in terms of the concentration pathway, not the emission pathway, which is what I was trying to get across. If you really aren’t trying to do this, maybe you really should try harder to think a little before going “Aha, I’ve found something to criticise”. On the other hand, if your goal is simply to promote your low climate sensitivity, and now low carbon cycle feedbacks at all costs, rather than to actually engage in a serious discussion, carry on, you’re doing a fine job.

Rick,

There’s a very good Realclimate post that discusses this, but their site seems to be down (search for “Realclimate attribution”). Also, if you look at the AR5 radiative forcing diagram, the best estimate for the change in anthropogenic forcing since 1950 is about 1.7W/m^2. So, this alone could explain more than half the observed warming. Feedbacks don’t have to be large to explain the rest. If anything, it’s harder to explain anthropogenic forcings providing less than all, than it is to explain them providing more than all.

RickA and his beloved talking points. Again.

* * *

As regards the carbon cycle, the PETM is instructive: a (geologically) abrupt and massive release of carbon followed by ~150ka of slow relaxation.

Not exactly what one would expect if the recovery from carbon cycle perturbations is rapid and concomitant temperature forcing therefore relatively weak and of short duration.

Sorry: Source for figure.

Not enough coffee.

RickA: wrote “How can Nics results be unphysical if they fall within the IPCC range?”

Consider, for example the cyclic model by Loehle and Scafetta, which can be made to give estimates of climate sensitivity that closely match the IPCC range, providing the gross errors of the model are corrected (especially if new errors are not introduced – mea maxima culpa – an nobody elses). However the Loehle and Scafetta model is still unphysical because there is no plausible evidence for the cyclic component. This is a problem with statistical model fits, you can often fit the data well with an incorrect model, and draw faulty conclusions as a result. That is why I (as a statistician) tend to have more faith in a model that is based on physics and explains the observations without having been explicitly fitted to them.

There is also the point about all models should be as simple as possible, but no simpler. You can sometimes get a simple model that fits the observational data well, but it doesn’t extrapolate properly, because some important feature of the underlying system did not greatly affect the calibration period, but would be more active outside it. For instance, if we only observe the carbon cycle in a period where it is being vigorously driven by anthropogenic emissions, we can explain that without needing to model the interactions of the deep ocean and thermocline. However, such a model is not going to give a good account of what would happen if we were to stop those emissions.

Also, that your result falls within the likely range, does not suddenly make it likely. Falling within the range would imply that it is plausible, but doesn’t necessarily mean that it’s more likely than other results. The main problem is – IMO – that we want to make decisions based on what could happen future, which involves considering everything. That one can show that warming might be low (which even the IPCC allows) does not suddenly mean that we should ignore that it might not be.

ATTP indeed, from a Bayesian perspective we should consider all possible models, weighted by their plausibility (determined by their ability to explain the observations and the prior) and use the resulting distribution to choose the course of action (e.g. by minimising the expected loss). The difficulty there is usually in deciding the prior, but at least in the Bayesian framework this needs to be stated explicitly as part of the analysis.

Rick,

It’s more simple than you think.

The world has warned.

If absent any human contribution, the world would have warmed anyway, then attribution is 100% human.

The assessment is that natural effects are a very slight cooling over the period, hence attribution is 100-110% human.

Dunno what happened there. Last post should have read as follows- an Eddie from moss would be appreciated :

Rick,

It’s more simple than you think.

The world has warned.

If absent any human contribution, the world would have warmed anyway, then attribution is 100% human.

The assessment is that natural effects are a very slight cooling over the period, hence attribution is 100-110% human.

vtg,

I’m not seeing the difference between the two posts, and I don’t follow the “If absent any human contribution” argument. “Eddie from moss” is very funny, though.

For some weird reason, there’s a whole paragraph its refused to post in the middle of the post. It’s done it twice. It’s also removing “greater than” and “less than” signs.

*penny drops * the greater than and less than signs have been interpreted as html, thereby removing the paragraph between them

I’m going to give up.

vtg,

That makes sense now. Do you want me to delete anything, or are you happy to leave your “Eddie as moss” comment? 🙂

Don’t mind- you’re the boss!

If it’s possible to extract the original comment including the greater and less thans that would be nice.

It *did* make sense. Honest.

It appears to have removed that portion even when I look at the comments in the editor, so it seems that I can’t extract the original.

Things to do, time to abandon!

JCH: https://bskiesresearch.files.wordpress.com/2014/06/annan_current-climate-change-reports_2015.pdf

Apparently James Annan takes Nic seriously.

Bill,

Nic’s published work is pretty interesting and deserves to be taken seriously, and many do. However, even James has some criticisms of Nic’s choice of priors.

vtg, you could do a screen capture of the text in notepad, for example, and post the image if you have a place where the image can be linked from. I’ve been that desperate at times. tinypic used to be great but its utility has pretty much disappeared now. I’ve resorted to google drive for images, which is very easy to use, but the images don’t embed on wordpress because wordpress doesn’t have an image tag. Completely nonsensical.

You can also try some of the markdown tags from the wordpress markdown reference guide for example the code tags and the code block tags. WordPress is really fickle for such a large blog site.

ATTP, thanks.

Nic’s published work is pretty interesting and deserves to be taken seriously, and many do.

The problem is not his work, the problem is the spin outside of the scientific literature. The pretence that this outlier result equals the best scientific understanding of the climate system, ignoring all the other estimates based on many different methods and observations.

bill shockley

NL’s estimates just do not fit with what is known about palaeoclimate behaviour. I agree strongly with Victor about NL’s tendency to over-exaggerate the importance of his results (and others to echo this spin). It is misleading and unhelpful.

RickA,

Because of aerosols emitted by humans, which cause cooling, but for our aerosols it would be even warmer – which is how we get to 110% of the warming is caused by humans (since 1950).

The 100/110% includes aerosol forcing contribution. Without including aerosols it would be about 150%.

The Earth has been warming since the LIA, and all of that warming is mostly natural (at least up until about 1950).

60% of the time this would be right every time 😉

From the 1600s both simulations and proxy reconstructions indicate a general small warming up to about 1900, though perhaps not significant in the proxy data as far as it goes, on the order of 0.1degC/Century. However, it’s not at all continuous. This can be comfortably explained by known volcanic and solar forcing. Up to about 1900, yes I think warming from a generalised “LIA” is almost all natural. From 1900 to 1950 there is probably a significant anthropogenic influence, could be about half and half.

Why did this natural warming stop?

Because natural warming isn’t magical, it has a cause. Based on comparisons between forced simulations of the past millennia against historical simulations initialised by a generic pre-industrial control, I think natural warming probably accounts for about 15% of total observed historical warming since 1850. At a stretch possibly as much as 25%. However, nearly all of this occurs before 1960. The first half of the 19th Century featured extremely strong volcanic activity and the period 1920-1960 was quiet volcanically. We would therefore expect a warming trend between these periods. However, even the numbers I’m quoting here are quite dependent on a moderate sensitivity (>2C ECS). Trying to squeeze out further non-negligible warming extending to the present invokes a high-sensitivity Earth with a reasonably large ECS/TCR ratio, to supply the necessary “pipeline” warming. You’ll perhaps appreciate that’s incompatible with Nic Lewis’ model.

We know that warming (whether natural or human caused) releases additional CO2 (and methane) – so some of the CO2 being added to the atmosphere is a result of the natural warming from 1750ish on. A feedback effect.

Isn’t this essentially what Nic Lewis is arguing against?

Lukewarmers seem to treat CO2 forcing as some kind of special case, rather than just another radiative perturbation of the climate system.

While there are of course different efficacies of forcing, as far as I know they are not different enough to square the circle of lukewarmerist low sensitivity to radiative perturbation and observed (and palaeoclimate) behaviour.

The two just aren’t compatible.

…enough to square the circle of lukewarmerist low sensitivity to radiative perturbation and observed (and palaeoclimate) behaviour.

I’m not sure a low-end 1.5C ECS is necessarily incompatible with temperature evolution over the past millennium up to the present. But if you want that ECS and to also infer a large natural LIA recovery warming extending to the present I think you’re breaching the implausibility barrier.

paulskio,

I think that is an issue that many don’t get. All of the variability gives us some indication of how sensitive our climate is to radiative perturbations. It’s hard to argue for a high sensitivity to natural perturbations and a low one to anthropogenic ones.

BBD,

I agree with ATTP, that it’s interesting, from the standpoint of getting a handle on certainty. It’s different, it’s interesting, it’s a discipline (Bayesian theory). But substantively, I don’t take him seriously. I’m all on board the paleo-is-better wagon.

Hansen says 800,000 years of paleo history PROVES ECS is 3.0 +/- 0.5C. If you want to add the caveat that sensitivity may be relative to the strength of forcing, then it’s going to be stronger now, not weaker than during the last million years.

> As I understand it, this all within the realms of possibility, so it could well be what happens. However, discovering that one can develop a plausible model that suggests that warming will be on the low side, is not really evidence that it will be.

There’s a jump from possible to plausible in these two sentences. First, we need to argue that what is possible is plausible. Then we argue that what is plausible is likely.

Rather than showing that climate sensitivity might be low, what we really want is a study that shows that climate sensitivity cannot be high, that would be far more reassuring.

Willard,

I’m going to have to think about that.

Dikran,

Exactly, and a problem is that Nic Lewis’s method cannot do so, by definition. If, for example, one assumes that feedbacks are linear, then you cannot say anything about whether they are, or not. By assuming that they’re linear, he’s fundamentally assuming that our warming in future will essentially continue as it has in the past. There is, however, plenty of evidence that this is probably too simplistic and that even if the feedbacks themselves are linear, there is a time dependence in the spatial response. As I understand it, polar amplification is largely accepted and is pretty strong evidence that Nic Lewis’s assumption of linear feedbacks is clearly wrong at some level.

paulski

I think I’d agree with all of that (definitely the second sentence) but I can’t see a reason why climate sensitivity for the last ~1ka would be different than for the Pleistocene as a whole (eg. Hansen & Sato, 2012). What’s more, I can see evidence that 1.5C ECS *is* incompatible with palaeoclimate behaviour across the entire Cenozoic (Rohling et al., 2012). So by that reasoning I think we can probably discount arguments based on millennial climate behaviour ‘supporting’ an ECS of ~1.5C. Would you agree?

ATTP

It’s possible that I might win the lottery, but not plausible to the extent that the bank would approve a loan on the possibility.

Just me, but when you have this gigantic pool of cold water called the deep oceans, and this phenomena called “anomalously strong winds for two decades”, I think anybody who thinks observations of SSTs and 2 meters above the surface that include that completely abnormal mess can determine climate sensitivity – as in, I know it’s low – is off their rocker.

BBD,

Okay, yes, I get it now 🙂

JCH: Just me, but when you have this gigantic pool of cold water called the deep oceans, and this phenomena called “anomalously strong winds for two decades”, I think anybody who thinks observations of SSTs and 2 meters above the surface that include that completely abnormal mess can determine climate sensitivity – as in, I know it’s low – is off their rocker.

It’s not just you. I think Hansen has said essentially the same thing.

what we really want is a study that shows that climate sensitivity cannot be high, that would be far more reassuring.

Good point, if only because science is best at showing things wrong. Falsification is still hard, but a lot easier than being sure you are probably right about something.

Also, coming back to the above graph the blog post was about with the future temperature increases, extrapolation is very dangerous based on statistical models. As dikranmarsupial wrote:

“This is a problem with statistical model fits, you can often fit the data well with an incorrect model, and draw faulty conclusions as a result. That is why I (as a statistician) tend to have more faith in a model that is based on physics and explains the observations without having been explicitly fitted to them.

There is also the point about all models should be as simple as possible, but no simpler. You can sometimes get a simple model that fits the observational data well, but it doesn’t extrapolate properly, because some important feature of the underlying system did not greatly affect the calibration period, but would be more active outside it. For instance, if we only observe the carbon cycle in a period where it is being vigorously driven by anthropogenic emissions, we can explain that without needing to model the interactions of the deep ocean and thermocline. However, such a model is not going to give a good account of what would happen if we were to stop those emissions.

For now, as long as we do not understand physics of the strong damping feedbacks that would be needed for the low climate sensitivity of Nic Lewis, his result is not just an outlier, but also just statistics.

This is Hansen’s amazing image “proving” ECS is 3.0C +/- 0.5C. I think it’s from around 2009.

I was thinking a little about the point that rustneversleeps made about even the historical temperatures not lining up. My understanding (which may be wrong) is that the TCRE is really the CO2 (or GHG/anthro) attributable warming divided by the cumulative CO2 emissions. If we think that natural/internal variability has provided a cooling influence over the last decade or so (and certainly the best estimate for the anthropogenic warming since 1950 is about 110% of what’s been observed) then if Nic is fitting to the observed temperature only, then he may be underestimating the CO2 attributable portion by about 10%.

Before you think about plausible reasoning, AT, please consider where the “it’s consistent with” can lead:

(This thread might give you an idea why there’s no real open threads at Judy’s.)

Honest brokers use “consistent with” to weasel their way out of rocky places.

***

While an outlier O can be “consistent” with the overall picture, O is still an outlier. How do we know it’s an outlier? By looking at comparables. One model doesn’t tell us much – we need to compare it to others. The very idea that one model could be plausible stretches credulity. As Steve Easterbrook has pointed out in a presentation I’ve recently seen – an isolated model ain’t that useful.

Hence my question at Judy’s, yet to be answered: would it be possible to Nic to come up with a model with an even lower sensitivity and even more modest carbon cycle that would be “consistent with” the official guestimates?

BBD,

So by that reasoning I think we can probably discount arguments based on millennial climate behaviour ‘supporting’ an ECS of ~1.5C. Would you agree?

It seems unlikely that a truly representative ECS would be as low as 1.5C based on the full range of evidence.

ATTP,

(and certainly the best estimate for the anthropogenic warming since 1950 is about 110% of what’s been observed)

I think anthropogenic warming attribution being about 110% might be due to HadCRUT4 coverage bias. The D&A method scales modelled warming to HadCRUT4 warming, but only for grid cells with coverage, and determines a scaling factor which fits best. When producing an attributable warming estimate I would guess that scaling factor is applied to the full model global average, which would have a larger average temperature change than the HadCRUT4 covered area.

The thing to remember about “it’s consistent with” is that “is not consistent with” is usually a severe problem for a theory precisely because “is consistent with” is such a low hurdle (and hence rather feint praise).

A stopped clock is consistent with a well-functioning clock at least twice a day.

;o) The example I would use is being able to differentiate cos(x) is consistent with being a mathematician. This is correct, but it doesn’t mean that person is a good (or even competent) mathematician.

The phrase “is consistent with” is a useful phrase in a scientific context, provided the audience are aware of what (and usually, how little) it means”. I have noticed that many skeptics interpret the fact that observed temperature trends are “consistent with” the GCM ensemble as being some sort of ringing endorsement of the models, but I don’t think any climatologist actually means that. I suspect I have tried to explain that on blogs a number of times.

paulskio,

Does that still not potentially explain the discrepancy. If Nic’s model is constrained to match HadCRUT4, then he will still potentially be under-estimated the attributable anthropogenic warming?

Let’s emphasize Nic’s consistency clauses in the main para of his piece:

And then, by “being consistent” all the way, Nic stumbled upon a TCR that is consistent with every low-balling he did so far in his research.

Sometimes, you’re just lucky.

Actually, this is interesting

I had somewhat missed that Nic also seems to have tried to constrain his carbon cycle feedback to match recent observations. I did ask Nic what would happen if he ran his model with a higher TCR (say 2C) to see how much his carbon cycle assumptions influence his results.

Considering the amount of weasel wording in that para, it might be interesting to pay due diligence to the two endtnotes:

The wording of last footnote is intriguing. Let’s put it back in the claim it belongs:

No justification has been provided.

Not that it matters much to Matt King Coal, whom we predict will promote this study in a short while.

***

Coincidentally, there will be this hearing in a few days:

It will be interesting to see if Judy’s testimony will be consistent with Nic’s results.

In any case, she’s looking for some tips:

Expertise experts such as Judy only need a few pointers and a few days to make their testimonies consistent with the state-of-the-art science in just about any climate science field.

” I select the simple ESM’s key climate, and land and ocean carbon-cycle, sub-model parameters so that its simulated global temperature, heat uptake and carbon-cycle changes since preindustrial best match recent observational estimates, sourced largely from AR5.”

Optimisation is the root of all evil in statistics. If you only look at the parameter set that gives the best fit to the observations then over-fitting is potentially a pitfall (i.e. the realization of the noise in the observations may have a significant influence on the “best” set of parameters). But more importantly, while the set of “best” parameters in this sense may (or may not) be unique, there may be a wide range of other parameter settings that are almost as good.

One approach would be to determine the set of parameters that can plausibly simulate the observed quantities (given the uncertainties). If you can’t get a climate sensitivity > X using a combination parameter values from this set, then you have evidence that climate sensitivity cannot be that high (providing you accept the model itself as reasonable).

I should point out “is consistent with” is not “weasel wording”, it is normal scientific parlance.

Dikran –

Glad to see your 3:38. I was just about to write something similar, as one thing that I notice when reading WUWT is how frequently “skeptics” label appropriate quantification of uncertainty – of the sort they rarely provide (may, could, might,..) – as “weasel words.”

And at least it’s better than “is not inconsistent with.”

“and its land carbon sink sub-model’s characteristics are consistent with feedback parameter estimates in a recent paper.[9]”

If it was me though, I would have pointed out that [9] is at the low end of modeled carbon cycle feedback estimates (something that Prof. Friedlingstein explicitly states in the abstract), in other words it is a bit of a cherry pick. Prof. Friedlingstein is a top carbon cycle researcher, and the paper is one that needs to be taken very seriously (especially as it is good news ;o), but it is a good idea to point out the questionable assumptions in your argument before your competitors do it for you!

Joshua – yes, I have the same experience. Scientists tend to be rather moderate in their claims and give lots of caveats and statements of uncertainty, that generally are misinterpreted in a rhetorical discussion. There are good reasons for scientists to do this and this is one of the “impedance mismatches” involved when scientists interface with politicians and other groups where rhetoric is a valid means of making decisions.

”

A stopped clock is consistent with a well-functioning clock at least twice a day.

”

But a man with two watches is never sure of the time.

==> “…where rhetoric is a valid means of making decisions.”

And where acknowledging and quantifying uncertainty is spun into a sign of weakness. Consider Trump’s success in presenting opinions with complete certainty in the face of overwhelming contradicting evidence.

One sure sign of “skepticism” (as opposed to skepticism) is when those who speak of the importance of acknowledging uncertainty then turn around and talk about appropriate quantification of uncertainty as “weasel words.”

It must be tough to resist the tendency to respond to exploitation of appropriate uncertainty from “skeptics” by avoiding any acknowledgement of uncertainty.

> “is consistent with” is not “weasel wording”, it is normal scientific parlance.

A wording can be [1] called weasel wording because of its usage, not because it uses a specific wording. In one context, a wording W can be perfectly fine, while in others it can be weasel wording [2]. That’s one of the beauty of using weasel wording [3].

Consistency is first and foremost a concept that belongs to formal semantics. It refers to theories or models that are no contradictory: two theories are consistent if one can’t derive contradictions between them. Scientific theories are not (interpreted) deductive systems: to establish that one set of propositions is consistent with another one will oftentimes be a judgement call. In Nic’s case, it’s quite clear that he’s referring to sets of numerical entities, not truth values.

Nic’s usage of “consistent with” clauses helps him hide the choices he made while appearing to justify them.

***

Normal scientific parlance is more than consistent with weasel wording. It is replete with it [4]:

http://www.jstor.org/stable/378102

As long as there’s a political component in science, there’s no reason to think that it will stop containing [5] weasel wording. This tradition may very well be [6] exacerbated by the downsizing of the editing process and the open science model.

[1]: Nothing’s easier than to argue from possibility.

[2]: Truisms can hide profund truths.

[3]: One out of how many, and what do I mean by beauty?

[4]: Perhaps, but how many is too many?

[5]: Double negatives to the rescue!

[6]: As you can see, I have no problem using weasel wording myself.

”

Nothing’s easier than to argue from possibility.

”

Nothing? That’s not impossible, but it’s implausible. At least it’s not inconsistent.

But since anything follows from a contradiction, it might be easier to argue from impossibility.

Willard, as Nic was presenting some science, his usage of “consistent with” was certainly “consistent with” the normal scientific usage of the phrase. As I said the thing that really deserved noting is that the estimate it was consistent with was at the low end of such estimates (as I suspect you were pointing out).

“Consistency is first and foremost a concept that belongs to formal semantics” it is probably better to try and work out what Nic actually meant by it, rather than to interpret it in a framework that you consider to be a more correct one, at least if you aim is to understand the Nic’s intended meaning. Variants of Hanlon’s razor are also a useful guide (i.e. no not assume something is “weasel words” if there is a more charitable interpretation that remains plausible). As it happens “is not contradictory” is just what “is consistent” usually means in a scientific or statistical context.

Nic could have written “the values I chose do not contradict the findings of [9]”, but the “is consistent with” wording would be immediately recognisable by scientists and is the phrasing that most would use.

joshua and dikranmarsupial:

In this case though it is the skeptics who are saying the future is uncertain and the consensus scientists who are pushing back.

The uncertainty monster and all that.

Nic’s observational constrained ECS is within the IPCC range of 1.5 to 4.5 for ECS and I feel quite a bit of push back against that, just because it is at the low end of the range.

Laypersons like myself read all the back and forth and think the future climate seems pretty uncertain – period – and certainly uncertain in the range of 1.5 to 4.5.

I am sure you are right about uncertainty language in papers – but you sure don’t see it much in the lead up to Paris.

http://www.nytimes.com/interactive/projects/cp/climate/2015-paris-climate-talks/at-paris-climate-talks-an-abundance-of-options

The headline is “World leaders have 12 days to agree on plans to slow global warming.”

Etc.

On the other hand – that is must one persons perception, and like all people I am biased.

“just one persons perception” – fumble fingers

The D&A method scales modelled warming to HadCRUT4 warming, but only for grid cells with coverage, and determines a scaling factor which fits best.

For long-term trends matching the grids is sufficient to avoid biases due to, for example, polar amplification. The coverage was important for the comparison of models with observations for the recent decade because more warming took place in the Arctic than expected by just polar amplification. The long-term trends (more accurately: the 3-dimensional pattern of the trends) are used in Detection & Attribution (D&A).

I don’t think this is true. I think, at best, “skeptics” are saying that the future is uncertain, let’s not do anything until we know more, grrrrrowth. Others, on the other hand, are saying that it’s uncertain, but we have some understanding of what could happen, maybe we should consider doing to something to avoid the possibility that the impacts could be severely damaging.

@-RickA

“So “natural” is changes in the sun, heliosphere, magnetic coupling, natural fires, volcanoes, orbital variations, clouds, ocean currents, etc.”

Okay, so ‘natural’ is any change in the energy balance that is caused by something independent of human activity.

Those are all measurable, and measured, parameters. It is straight-forward to determine if any have altered in a manner that could explain the observed warming.

@-“Secondly, I see a change of almost 1C between the peak MWP and the bottom of the LIA – which is more than the warming since 1950 (but not much).”

1C for local peaks and bottoms, but not global. The LIA was a minor feature of some S hemisphere regions and the MWP peak warmth was not globally synchronous.

@-“Ok – how much of the warming last century and this century is from CO2 released due to the MWP? That ended about 700 years ago.”

You can derive how much CO2 rises when milankovitch triggered, and CO2 amplified, warming occurs at the end of a glacial period. You get about 80ppm increase for a 6-8 deg C temperature rise. Throw in a bit of Henry’s law and I think you get about 10ppm per deg C.

But your question is… ill posed.

Any potential CO2 rise initiated by the MWP warming will have been arrested and reversed by the LIA. Warming will only increase the airborne CO2 fraction where it is maintained until equilibrium is reached.

This is an issue with the NL sensitivity paper discussed in this thread. How the carbon cycle will respond under climate conditions that are rare and extreme in the paleo record is uncertain. While this allows some optimistic projections which are – ahem – consistent with the modelling, it ignores the possibility that there is NO reduction in CO2 when/if CO2 emissions cease. the tectonic input and slow geological sequestration balance out at a new higher concentration and temperature.

RickA says: “In this case though it is the skeptics who are saying the future is uncertain and the consensus scientists who are pushing back.”

I don’t think this is actually true. Skeptics argue that climate sensitivity is low and focus only on estimates at the low end, which implies certainty that climate sensitivity is low. The mainstream scientists position is that there is a much broader range of values for climate sensitivity that are consistent with (parts of) what we know about climate. So the mainstream scientists are arguing that the uncertainty is greater than admitted by the skeptics.

Fig 1. The uncertainty monster.

Was hoping I could include an image, never mind, here is the uncertainty monster. ;o)

Scientists always talk about uncertainties and the uncertainty monster is the favourite pet of the mitigation sceptics. The difference is that scientists use uncertainty to express the range of values that fits to the evidence, while the mitigation sceptics use it to pretend nothing will happen, which is very weird because the outcome of every single political or personal decision is uncertain.

When you start seeing evidence and arguments as an attempt to understand the world and not as push-back and excuses you have made the first step to become a more rational than ideological person.

Dikranm

Usually if you just post the url on its own, the image displays in the comment:

BBD cheers. Fearsome chap, isn’t he?

> As it happens “is not contradictory” is just what “is consistent” usually means in a scientific or statistical context.

Agreed. The main difference between the two expressions is the lack of double negatives in “is consistent.” The main difference between “is not contradictory” in a logic and in empirical science is that in logic it refers to a deduction, whence in empirical science it denotes something else. In Nic’s case, it is a selection: the subset of values he selected is “consistent with” the set in which he picked his values.

That’s quite obvious, and carries very little information. To see it, remove all the consistency claims from the para:

As you can see, this para describes what Nic did. There are gaps in the specification, but I’m sure that Nic would complete them when needed. The main difference is that the argumentative tenor of his specification is gone, and that all his choices are made explicit. There is simply no reason to insist so much in the consistency of the selection when you can cite your sources.

If that’s also something we can read in the scientific lichurchur, then so much the worse for the scientific lichurchur.

Victor

For long-term trends matching the grids is sufficient to avoid biases

Yes, the initial comparison shouldn’t be coverage biased (though may be due to use of sst versus sat). But the output of the D&A process is a scaling factor, which is then applied to the model global average for the anthrogenic historical run to find the amount of warming attributed. In this step, if they use the full global average this should be larger than the hadCRUT4-coverage global average.

image urls with an image extension like .jpg or .gif will embed automatically. .png, .bmp, not sure. But definitely not if it doesn’t have an image extension.

Here’s Judy’s monster:

Willard ” In Nic’s case, it is a selection: the subset of values he selected is “consistent with” the set in which he picked his values.”

You are missing an important point here. The set of values from which Nic picked his set were a superset of those considered plausible by [9], he could have picked a set that were outside the range considered plausible by [9], so the statement does actually convey information. The value of saying that his values are consistent with those of [9] is to convey that the values are considered plausible by an expert on the carbon cycle.

“As you can see, this para describes what Nic did.” no, it describes your interpretation of what Nic did.

” There is simply no reason to insist so much in the consistency of the selection when you can cite your sources.”

You don’t appear to understand the scientific usage of the phrase, Nic wasn’t insisting anything, he was just showing that there does exist a carbon cycle specialist that would agree the value used was plausible. That is in no way overstating his true position in that respect. He could only reference his source in this case if he used a point estimate explicitly provided by [9].

“If that’s also something we can read in the scientific lichurchur, then so much the worse for the scientific lichurchur.”

Perhaps you ought to become more familiar with scientific/statistical terminology/usage if you are going to make a point of analyzing scientific exchanges? BTW “consistent” has an everyday usage which is “consistent with” its scientific usage.

To clarify a point in my last post:

”There is simply no reason to insist so much in the consistency of the selection when you can cite your sources.”

Had Nic written “I used a value lambda = 2.178 [9]”, that would imply that [9] specifically advocated that particular value, and hence would be misleading unless it actually did so explicitly. Saying that the value used was “consistent with” [9] is actually a much weaker statement, implying a weaker endorsement. Nic could have written “the value used was within the range considered plausible by [9]”, but that is equivalent to “is consistent with [9]” in common scientific parlance and rather more verbose.

@-dikranmarsupial

“… it is probably better to try and work out what Nic actually meant by it, rather than to interpret it in a framework that you consider to be a more correct one, at least if you aim is to understand the Nic’s intended meaning.”

The desire to understand the intentions and meaning behind the actions of another person is a very strong human motivation. It is probably impossible and inappropriate when applied to a scientific paper on this contentious subject.

Whatever the intention of the word use as you correctly identify it is limited to “the values I chose do not contradict the findings of [9]”. Or at least the two statements are consistent with each other. –

It is the lower limit, the minimum requirement that justifies bothering to do the research at all. In the absence of any further argument in favour of that choice, whatever the intention of the writer, it omits information about the reason for the selection made.

Willard’s question is apposite as usual, – are there any other choices that could be made that would also be ‘consistent’ with other findings that could give a LOWER climate sensitivity, rate of warming and concentration reductions ?

If not, then the lack of better justifications for the models and methods used may raise questions of intentionality.

dikranmarsupial: Pictures don’t always work… remember some people will believe anything;

Click to access jdm15923a.pdf

I wondered where they all went, does that mean the Vogons are on their way?

izen, as I said, the problem isn’t the “is consistent with”, it is not mentioning that the thing it is consistent with is at the lower end of the spectrum (as explicitly acknowledged in the abstract). Hanlon’s razor is a good idea, try to find the most charitable explanation of what is actually written that remains plausible and assume that is what was actually meant. If nothing else it guards against making incorrect claims of nefarious intent based on circumstantial evidence, so it is strategically a good idea as well as being the right thing to do anyway.

anoilman – LOL, I’ll have to read that paper! (I enjoyed Frankfurt’s little book)

”

Any evidence-based argument that is more inclined to admit one type of evidence or argument rather than another tends to be biased. Parallel evidence-based analysis (sic) of competing hypotheses provides a framework whereby scientists with a plurality of viewpoints participate in an assessment. In a Bayesian analysis with multiple lines of evidence, it is conceivable that there are multiple lines of evidence that produce a high confidence level for each of two opposing arguments, which is referred to as the ambiguity of competing certainties. If uncertainty and ignorance are acknowledged adequately, then the competing certainties disappear. Disagreement then becomes the basis for focusing research in a certain area, and so moves the science forward.

”

(Curry and Webster, BAMS, December 2011)

Move the science forward. Admit all types of evidence and arguments to avoid bias. Make competing certainties disappear. Adequately acknowledge the monster.

”

The monster is too big to hide, exorcise, or simplify.

”

(Curry and Webster, BAMS, December 2011)

Don’t panic.

Just don your peril sensitive sunglasses.

Required reading for this sort of thing, DM.

RickA,

It’s more simple than you think.

The world has warmed.

If absent any human contribution, the world would have warmed anyway, then attribution is less than 100% human.

If absent any human contribution, the world would have cooled, then attribution is greater than 100% human.

The actual assessment is that natural effects are a very slight cooling over the period, hence attribution is 100-110% human.

See http://www.realclimate.org/index.php/archives/2013/10/the-ipcc-ar5-attribution-statement/ for more

html, on the other hand, turns out to be more difficult than I thought. Memo to self to use prose rather than symbols in future.

> You don’t appear to understand the scientific usage of the phrase […]

In return, you don’t seem to understand how specifications are written in technical documentation, how citations work in scholarship, how argumentative function can’t be reduced to usage, that appealing to common usage is in this case fallacious, and that your ad hominems will he held against you. However, please continue, since that would be quite amusing.

If Nic would have taken something outside [9], his appeal to the authority of “a recent paper” would have been quite moot. Besides, all his consistency remarks are meta, and belongs to a discussion.

Had he created a para where he discussed his results, the first question he’d have to ask is: are his overall results “consistent with” other estimates of TCR and ECS? There’s an issue of compositionality here: that every bit of his analysis is consistent with the lichurchur may not imply his results are. A second question needs to be: in what way are these results more “realistic”? As far as I can see, Nic offered no explicit argument for his main claim. A third could very well be: are there other properties than consistency that such an analysis would need to meet? In other words, consistency is cheap and only suffices for lukewarm marketing efforts.

That Nic’s sleezy rhetoric could be defended is beyond me.

One only has to read lichurchur from decades ago to see that “but that’s how scientists write” is moot at best. While it may be easy for empirical scientists to snob social scientists’ writing, please be assured that the feeling can easily be reciprocated. Such slug fest would not be “unprecedented,” if you know what I mean.

What ad-hominems? Saying that you appear not to understand a particular piece of scientific terminology is in no way an ad-hominem. There are plenty of items of terminology that I don’t understand [many of which you use on a regular basis] and I wouldn’t regard someone telling me that as an ad-hominem, just as someone pointing out that there is a specific terminological issue that I don’t understand. Perhaps I don’t understand the meaning of ad-hominem, that is possible.

” While it may be easy for empirical scientists to snob social scientists’ writing,”

I have no idea where that comes from, empirical scientists and statisticians having adopted a particular phrase (generally indicating a very low level of endorsement/agreement) in no way suggests that they are better in some way than social scientists.

I think that referencing “weasels” suggests judgement of intent (a deliberate effort to avoid accountability) which in many cases is unknowable.

That is, of course, different than arguing that there was an insufficient quantification of uncertainty (which saying “is consistent with” is often…well…consistent with).

In this particular case, while I can recognize the frame for asserting that there was a sub-optimal treatment of uncertainty, I don’t have the skillz to judge for myself. I certainly recognize a pattern in the past where Nic’s treatment of uncertainty was sub-optimal, but generalizing to specifics from general patterns is problematic if I can’t evaluate relevant details.

But another pattern I see is a tendency to (selectively) demonize qualification on uncertainty (may, could, might, if this trend continues, etc.), as something a “weasel” does, and I think that is an unfortunate by-product of motivated reasoning of the sort so often manifest at WUWT. Not to say that was the case here, but I’m not sure that there is any value added by invoking weasels.